What Is AWS Compute Optimizer?

AWS Compute Optimizer is a free service offered by Amazon Web Services (AWS). It uses machine learning to analyze your AWS computing resources and provides recommendations on how to optimize them. It can help you ensure that your resources are operating at their maximum potential, with minimal cost and optimal performance. Compute Optimizer optimizes cost by reducing waste and right-sizing resources, and improves performance by ensuring you have sufficient resources for your workloads.

You can access AWS Compute Optimizer here.

This is part of a series of articles about AWS cost optimization

In this article:

- How AWS Compute Optimizer Works

- Metrics Analyzed by AWS Compute Optimizer

- Using AWS Compute Optimizer to Get Recommendations for EC2 Instances

How AWS Compute Optimizer Works

AWS Compute Optimizer uses historical utilization data to predict future performance. It takes into account factors like CPU utilization, memory usage, and network I/O. Based on these parameters, it determines if the instance is under-provisioned, over-provisioned, or optimally provisioned.

Once the analysis is done, AWS Compute Optimizer provides detailed recommendations. These could include resizing instances, configuring instances for better performance, or even moving workloads to a different instance type.

AWS Compute Optimizer can provide specific recommendations for the following types of AWS resources:

- Elastic Compute Cloud (EC2) instances: Evaluates metrics like CPU utilization and instance usage patterns to recommend appropriate instance types or sizes, potentially leading to cost savings and improved performance.

- Auto Scaling groups: Offers insights into optimal configurations and scaling policies based on usage patterns, ensuring efficient resource utilization and responsiveness to demand changes.

- Elastic Block Storage (EBS) volumes: Assesses volume performance, such as IOPS and throughput, suggesting changes in volume type or size for better performance and cost-effectiveness.

- Lambda functions: Analyzes function execution patterns, like invocation frequency and execution duration, recommending adjustments in resource allocation for enhanced efficiency.

- Amazon Elastic Compute Service (ECS) on Fargate: Evaluates container resource utilization, suggesting optimal CPU and memory configurations to align performance needs with cost.

- Commercial software licenses: Assesses license usage patterns and costs, providing recommendations to optimize license allocations and compliance, avoiding overspending.

Related content: Read our guide to AWS cost explorer

Metrics Analyzed by AWS Compute Optimizer

AWS Compute Optimizer analyzes a wide range of metrics to provide its recommendations.

EC2 Instance Metrics

EC2 instance metrics are vital to understanding how your instances are performing. These include CPU utilization, memory usage, network throughput, and disk I/O. AWS Compute Optimizer uses these metrics to determine if your instances are under-provisioned, over-provisioned, or optimally provisioned.

For example, if your CPU utilization is consistently high, it might indicate that your instance is under-provisioned. On the other hand, if your memory usage is low, it might suggest that you’re over-provisioning your instance. AWS Compute Optimizer takes these metrics into account to provide you with recommendations on how to optimize your instances.

EBS Volume Metrics

EBS volume metrics are used to analyze the performance of your EBS volumes. These include metrics such as read and write latency, throughput, and IOPS. AWS Compute Optimizer uses these metrics to determine if your EBS volumes are performing optimally.

For example, if your read and write latency is high, it might indicate that your EBS volume is under-provisioned. Similarly, if your throughput is low, it might suggest that your EBS volume is over-provisioned. Based on these metrics, AWS Compute Optimizer provides recommendations on how to optimize your EBS volumes.

Lambda Function Metrics

AWS Compute Optimizer analyzes several metrics related to Lambda functions, including invocation count, error count, duration, and more. By doing so, it can identify potential performance issues and provide recommendations on how to optimize your Lambda functions.

For instance, if your Lambda function is frequently timing out, AWS Compute Optimizer may recommend increasing the function’s timeout setting. Or, if your function is using more memory than necessary, it could suggest reducing the function’s memory allocation.

Metrics for Amazon ECS Services on Fargate

If you’re using Amazon Elastic Container Service (ECS) on Fargate, AWS Compute Optimizer can analyze metrics like CPU utilization, memory utilization, and network bytes. By analyzing these metrics, the service can suggest ways to optimize your container configurations.

For example, if your containers are consistently using less CPU or memory than allocated, AWS Compute Optimizer could recommend reducing their resource allocations. By doing so, you can save on your AWS costs without negatively affecting your application’s performance.

Metrics for Commercial Software Licenses

AWS Compute Optimizer can also analyze metrics related to your commercial software licenses. This includes metrics like license usage, license compliance, and license cost.

By analyzing these metrics, AWS Compute Optimizer can help ensure you’re not overpaying for licenses you’re not fully utilizing. It can also help identify potential compliance issues, such as if you’re using more licenses than you have purchased.

Using AWS Compute Optimizer to Get Recommendations for EC2 Instances

‘Finding’ Classifications

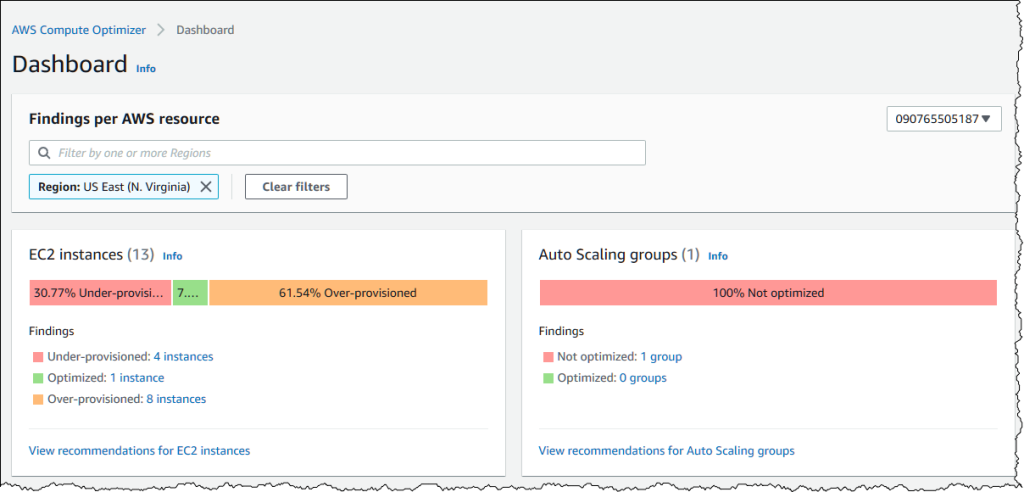

AWS Compute Optimizer classifies EC2 instances into three categories based on their performance during the analyzed period:

- Under-provisioned: Instances fall into this category when at least one specification (CPU, memory, network) fails to meet the workload’s performance requirements. This under-provisioning can lead to poor application performance.

- Over-provisioned: Instances are labeled over-provisioned when specifications exceed what is necessary for the workload. Such instances can be resized to more cost-effective options without compromising performance.

- Optimized: Instances are deemed optimized when all specifications align perfectly with workload requirements, not over or under-provisioned. Compute Optimizer may sometimes suggest newer generation instance types for these instances.

‘Finding’ Reasons

Reasons in AWS Compute Optimizer detail why an instance is under-provisioned or over-provisioned:

- CPU: Over-provisioning is identified when CPU configuration can be reduced without affecting workload performance, and under-provisioning is flagged when the CPU doesn’t meet performance needs.

- Memory: Similar to CPU, memory configurations are evaluated for over and under-provisioning.

- GPU: GPU and GPU memory configurations are analyzed for over and under-utilization.

- EBS Throughput and IOPS: The optimizer checks if EBS throughput and IOPS (input/output operations per second) configurations are more than necessary (over-provisioned) or insufficient (under-provisioned).

- Network Bandwidth: Network configurations are evaluated to determine if they can be downsized (over-provisioned) or need enhancement (under-provisioned)

Migration Effort

Migration effort refers to the level of effort required to move from the current instance type to the recommended one. This effort varies depending on the workload and the architecture of the recommended instance.

For instance, migration effort is medium if an AWS Graviton instance type is suggested without a workload type, low for Amazon EMR workloads to AWS Graviton, and very low if the current and recommended instances share the same CPU architecture.

Estimated Monthly Savings and Savings Opportunity

AWS Compute Optimizer estimates monthly savings from migrating workloads to recommended instance types. These savings are calculated under different pricing models:

- Savings Plans and Reserved Instances: Savings are estimated after discounts from these plans.

- On-Demand: This default mode shows savings when switching to recommended instances on On-Demand pricing.

- Savings Opportunity (%): This metric indicates the percentage difference in cost between the current and recommended instance types, factoring in discounts if the savings estimation mode is activated.

Performance Risk

Performance risk measures the likelihood of a recommended instance type failing to meet the workload’s resource needs.

Compute Optimizer calculates this risk for each specification (CPU, memory, EBS throughput, etc.) of the recommended instance. The overall risk is determined by the highest individual risk score. Recommendations come with varying levels of performance risk, from very low (indicating sufficient capability) to very high (suggesting a need for careful evaluation before migration).

Viewing EC2 Instance Recommendations and Instance Details

To view EC2 instance recommendations:

- Open Compute Optimizer and select EC2 instances.

- The recommendations page displays current instances, their classifications, and recommended specifications.

- Users can view impact on different instance types, filter by various criteria, and view recommendations for instances in different accounts.

For detailed information on a specific instance:

- Navigate to the EC2 instance details page.

- The page lists optimization recommendations, current instance specifications, performance risks, and utilization metric graphs.

- Options include viewing the impact of AWS Graviton-based instances and comparing current and recommended instance utilizations.

Learn more in our detailed guide to AWS cost savings

Automating AWS Cost Savings with Spot

While AWS offers Savings Plans, RIs and spot instances for reducing EC2 cost, these all have inherent challenges:

- Spot instances can be 90% less expensive than on-demand instances, however, as spare capacity, AWS can reclaim those instances with just a two minute warning, making them less than ideal for production and mission-critical workloads.

- AWS Savings Plans and RIs can deliver up to 72% cost savings, but they do create financial lock-in for 1 or 3 years and if not fully utilized can end up wasting money instead of saving it.

Spot addresses these challenges, allowing you to reliably use spot instances for production and mission-critical workloads as well as enjoy the long-term pricing of RIs without the risks of long-term commitment.

Key features of Spot’s cloud financial management suite include:

- Predictive rebalancing—identifies spot instance interruptions up to an hour in advance, allowing for graceful draining and workload placement on new instances, whether spot, reserved or on-demand.

- Advanced auto scaling—simplifies the process of defining scaling policies, identifying peak times, automatically scaling to ensure the right capacity in advance.

- Optimized cost and performance—keeps your cluster running at the best possible performance while using the optimal mix of on-demand, spot and reserved instances.

- Enterprise-grade SLAs—constantly monitors and predicts spot instance behavior, capacity trends, pricing, and interruption rates. Acts in advance to add capacity whenever there is a risk of interruption.

- Serverless containers—allows you to run your Kubernetes and container workloads on fully utilized and highly available compute infrastructure while leveraging spot instances, Savings Plans and RIs for extreme cost savings.

- Intelligent and flexible utilization of AWS Savings Plans and RIs—ensures that whenever there are unused reserved capacity resources, these will be used before spinning up new spot instances, driving maximum cost-efficiency. Additionally, RIs and Savings Plans are fully managed from planning and procurement to offloading unused capacity when no longer needed, so your long-term cloud commitments always generate maximum savings.

- Visibility and recommendations—lets you visualize all your cloud spend with the ability to drill-down based on the broadest range of criteria from tags, accounts, services to namespaces, annotations, labels, and more for containerized workloads as well as receive cost reduction recommendations that can be implemented in a few clicks.

Learn more about Spot’s cloud financial management solutions