The DevOps philosophy—integration of development and operations teams—can be applied to cloud infrastructure, leveraging Amazon Web Services (AWS) environments. It emphasizes agility, continuous integration (CI), continuous delivery (CD), and automation. The AWS cloud platform provides scalable and flexible resources that support automated tools, helping teams deploy software faster and more easily.

Using AWS for DevOps enables organizations to accelerate the pipeline for software development and deployment by using AWS services and tools. These include AWS CodeCommit, CodePipeline, and CodeBuild. By integrating these tools, organizations can manage their applications and services with greater precision and control.

This is part of a series of articles about CI/CD

In this article:

- Benefits of AWS for DevOps Teams

- Top 5 AWS Native DevOps Tools

- Reference Architecture: DevOps Monitoring Dashboard Solution on AWS

- AWS DevOps Best Practices

Benefits of AWS for DevOps Teams

AWS has several characteristics that are useful for implementing DevOps.

Fully Managed Services

AWS offers a range of fully managed services that reduce the overhead associated with setting up and managing infrastructure, allowing teams to focus on developing software rather than managing server configurations. Services like AWS Elastic Beanstalk and AWS Fargate provide environments where users can deploy applications without handling the underlying servers. These services also come with built-in monitoring and management capabilities.

Programmability

AWS is highly programmable, providing APIs and CLI tools for almost every service it offers. This allows DevOps teams to automate processes using scripts or code, reducing manual efforts and potential human error. Teams can automate their cloud infrastructure provisioning, application deployment, and operations tasks by writing code that interfaces directly with AWS resources. Configuration management and orchestration tools like AWS CloudFormation and OpsWorks help automate the deployment of server and software configurations.

Large Partner Ecosystem

AWS supports a large partner ecosystem with third-party solutions that are pre-integrated to work seamlessly with AWS services. This includes a range of tools covering aspects of the software development lifecycle such as security, monitoring, performance and optimization. AWS has partnerships with popular tools like Jenkins, GitLab, and Atlassian, which enable an easy integration process and enhanced functionality.

Related content: Read our guide to CI/CD vs DevOps

Top 5 AWS Native DevOps Tools

Here are some AWS’ native tools that support DevOps practices.

1. AWS CodePipeline

AWS CodePipeline automates the steps required to release software changes continuously. It models complex workflows for building, testing, and deploying software based on the defined release process models. This tool acts as an orchestration service for version control, compilation, testing, and deployment.

CodePipeline integrates with AWS CodeBuild for the build stage, AWS Lambda for running custom scripts, and AWS Elastic Beanstalk for deployments, enabling a seamless DevOps flow from source control to production.

2. AWS CodeBuild

AWS CodeBuild is a fully managed build service that compiles source code, runs tests, and produces software packages that are ready to deploy. It can centralize the build process in the cloud, eliminating the need for separate build servers. The service scales automatically and processes multiple builds concurrently, reducing wait times and speeding up the compilation process.

CodeBuild also integrates seamlessly with AWS CodePipeline, providing a continuous integration and delivery (CI/CD) solution that automates the software release process. The use of custom build environments, as well as pre-packaged build environments, allows teams to start new projects with minimal setup.

3. AWS CodeDeploy

AWS CodeDeploy is a service that automates application deployments to various compute services such as Amazon EC2, AWS Lambda, and on-premises servers. This flexibility allows DevOps teams to deploy applications with minimal downtime, enabling rapid and reliable delivery of new features and updates.

CodeDeploy supports several deployment strategies including in-place and blue/green deployments. In-place deployments update the instances in a deployment group with the latest application revision, while blue/green deployments shift traffic between two identical environments, ensuring zero downtime and enabling easy rollback if needed. Integration with services like CloudWatch helps in monitoring the deployment process and ensuring successful rollouts.

4. AWS CodeStar

AWS CodeStar provides a unified user interface for managing software development activities. It simplifies the setup of a continuous delivery toolchain, enabling quick start-ups of projects by provisioning the necessary resources and linking them together.

CodeStar supports multiple programming languages and frameworks, offering pre-configured project templates that incorporate services like CodeCommit, CodeBuild, CodeDeploy, and CodePipeline. It also includes built-in collaboration features such as team wiki, issue tracking, and project management dashboards.

5. AWS Cloud Development Kit

The AWS Cloud Development Kit (CDK) is an open-source software development framework that enables DevOps teams to define cloud infrastructure using familiar programming languages like TypeScript, Python, Java, and C#. This approach, known as Infrastructure as Code (IaC), enables the provisioning of AWS resources programmatically.

CDK abstracts AWS CloudFormation, providing high-level components called constructs that simplify cloud resource configuration. By using CDK, developers can leverage AWS services while writing less boilerplate code.

Reference Architecture: DevOps Monitoring Dashboard Solution on AWS

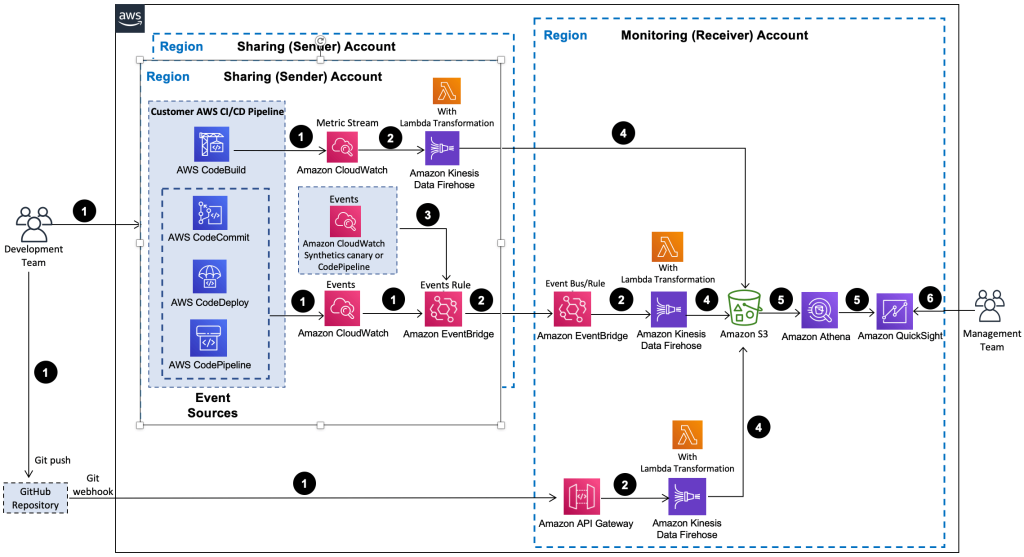

The DevOps Monitoring Dashboard on AWS is a reference architecture by AWS, illustrating how DevOps teams can automate monitoring and visualizing CI/CD metrics. It provides organizations with the ability to track and measure the activities of their development teams, helping DevOps leaders make data-driven decisions to improve the software delivery process.

Key Features and Workflow

1. Data Ingestion and Event Detection

The solution starts with the ingestion of events from various sources within the CI/CD pipeline. When a developer initiates an activity such as pushing a code change to AWS CodeCommit or deploying an application using AWS CodeDeploy, these activities generate events. The system also supports multi-account and multi-region configurations, allowing for events to be captured from multiple AWS accounts and regions.

Amazon EventBridge rules detect these events based on predefined patterns and send the event data to an Amazon Kinesis Data Firehose delivery stream. For GitHub repositories, git push events are captured using an Amazon API endpoint.

2. Data Transformation and Storage

An AWS Lambda function transforms the event data, extracting relevant metrics, and then stores the data in a central Amazon S3 bucket. This bucket acts as the main repository for all collected metrics.

3. Data Analysis and Visualization

The stored data is linked to an Amazon Athena database, which queries the data and returns results for visualization. Amazon QuickSight uses these query results to build detailed dashboards that present key DevOps metrics such as mean time to recovery (MTTR), change failure rate, deployment frequency, build activity, and pipeline activity. These metrics help in assessing the performance and efficiency of the development process.

Components Deployed

Deploying this solution with default parameters involves several key AWS services configured according to best practices for security, availability, and cost optimization:

- Amazon EventBridge for event detection.

- Amazon Kinesis Data Firehose for data ingestion and delivery.

- AWS Lambda for data transformation.

- Amazon S3 for data storage.

- Amazon Athena for data querying.

- Amazon QuickSight for data visualization.

Additionally, the solution supports integration with other visualization tools like Tableau for users who prefer different interfaces.

AWS DevOps Best Practices

Here are some of the ways that organizations can ensure the most effective use of AWS infrastructure and services for their DevOps implementation.

Use Infrastructure as Code (IaC)

Implementing Infrastructure as Code (IaC) allows developers to manage and provision their infrastructure through code, eliminating the need for manual configurations. Using tools like AWS CloudFormation and AWS CDK, DevOps teams can define cloud resources in a template or programming language.

This practice ensures consistency across different environments and enables version control, helping track and manage changes. IaC reduces configuration drift, minimizes errors, and simplifies the deployment process, making it easier to replicate environments and recover from failures.

Deploy Microservices with AWS

A microservices architecture brakes down applications into smaller, independent services that can be developed, deployed, and scaled individually. AWS provides several services that support microservices deployment, such as Amazon ECS, AWS Fargate, and AWS Lambda.

These services offer managed environments that handle orchestration, scaling, and resource management, reducing operational complexity. By leveraging these AWS services, organizations can improve the agility and scalability of their applications, enabling faster iteration and deployment of new features.

Implement Comprehensive Monitoring and Logging

Regular monitoring and logging are essential for maintaining the health, performance, and security of applications. AWS offers a suite of tools for this purpose, including:

- CloudWatch provides real-time monitoring and alerting for AWS resources and applications, enabling users to detect and respond to issues quickly.

- AWS X-Ray helps teams analyze and debug distributed applications, providing insights into the performance of microservices.

- AWS CloudTrail records AWS API calls, assisting in security analysis, resource change tracking, and compliance auditing.

Apply the AWS Well-Architected Framework

The AWS Well-Architected Framework is a set of best practices to help organizations build secure, high-performing, resilient, and efficient cloud infrastructure. It includes five pillars: operational excellence, security, reliability, performance efficiency, and cost optimization.

Regularly reviewing the architecture against these pillars helps identify potential risks and areas for improvement. By adhering to these best practices, organizations can improve the quality of their deployments, optimize costs, and ensure applications meet business and technical requirements.

Implement Feedback Mechanisms with AWS SNS

Feedback mechanisms are important for enabling timely responses to operational events and maintaining system resilience. AWS Simple Notification Service (SNS) allows organizations to set up notifications for various events within the AWS environment. Using SNS for communication within a DevOps team can improve collaboration and incident response times.

By integrating SNS with other AWS services like CloudWatch, Lambda, and SQS, DevOps teams can automate responses to specific conditions, such as scaling events, security incidents, or application errors. This integration helps create feedback loops that enable the system to adapt to changing conditions and ensure continuous operation.

Build Your DevOps Pipeline with Spot

Spot’s optimization portfolio provides resource optimization solutions that can help make your DevOps pipeline more impactful. Here are some examples of automated actions our users enjoy on their K8s, EKS, ECS, AKS and GKE infrastructure:

- Autoscaling: This single word encompasses multiple procedures: knowing when to scale up or down, determining what types of instances to spin up, and keeping those instances available for as long as the workload requires. EC2 ASG’s are an example for rigid, rule-based autoscaling. You might want to get acquainted with additional K8s autoscaling methods like HPA or event-driven autoscaling.

- Automated rightsizing: Recommendations based on actual memory and CPU usage can be automatically applied to certain clusters or workloads

- Default shutdown scheduling: Requested resources can be eliminated after regular office hours, unless the developer opts out a specific cluster.

- Automated bin packing: Instead of having nine servers 10% utilized, gather those small workloads in one server. Bin packing can be user-specific or not, according to your security policies.

- Dynamic storage volume: Your IDP should regularly remove idle storage. It’s also recommended to align attached volume and IOPS with node size to avoid overprovisioning in smaller nodes.

- AI-based predictive rebalancing replaces spot machines before they’re evicted involuntarily due to unavailability.

- Data, network, and application persistence for stateful workloads, either by reattachment or frequent snapshots.

- Dynamic resource blending aware of existing commitments (RIs, SPs) which must be used before purchasing any spot or on-demand machines.

- “Roly-poly” fallback moves your workload to on-demand or existing commitments if there is no spot availability. When spots are once again available, you want to hop back onto them.

To discover what key optimization capabilities your platform can enable in container infrastructures, read our blog post or visit the product page.