What is a Kubernetes CI/CD Pipeline?

Developers use Kubernetes to manage containers across public, hybrid, and private cloud environments. Organizations can also use this open-source platform to handle microservice architectures. You can deploy Kubernetes and containers on many cloud providers.

Speed is essential to contemporary software development. Rapid iterative paradigms have replaced the all-or-nothing approach of waterfall software development. New techniques supporting rapid development and release include continuous delivery, continuous deployment, and continuous integration.

As organizations deploy and manage applications using Kubernetes, their CI/CD pipelines will change. CI/CD needs to adapt to Kubernetes and capitalize on its unique values to make agile development faster and more effective. Kubernetes can:

- Reducing release cycle time by automating steps like testing and deployment and simplifying orchestration.

- Avoid outages by managing autoscaling and automating the healing of failed components.

- Improve utilization for dev, testing, and production environments by running more applications on the same computing resources.

This is part of an extensive series of guides about Kubernetes and a series of articles about CI/CD

In this article:

- Which Kubernetes Capabilities Can Benefit Your CI/CD Pipeline?

- Monolithic Pipelines vs. Kubernetes Pipelines

- The CI/CD Pipeline Process: How Kubernetes Helps

- Kubernetes Pipeline Example

- Kubernetes CI/CD Best Practices

- Kubernetes Pipeline CD with Spot.io

Which Kubernetes Capabilities Can Benefit Your CI/CD Pipeline?

To ensure you maintain a successful CI/CD pipeline, you should implement application updates automatically and swiftly. Kubernetes provides many solutions for typical issues that programmers encounter during this process. For example:

- Ability to containerize the code—with Kubernetes, you can run your applications in containers, ensuring that you have the libraries and resources needed, while preventing compatibility issues and removing the dependency on infrastructure. Containerizing the code means that your application remains movable between environments while making them simpler to scale and replicate

- Orchestrating deployment—Kubernetes streamlines the deployment process in several ways. Running applications on containers doesn’t deal with all issues in the CI/CD pipeline. However, the platform takes over many manual tasks, including monitoring application health, scalability, and deployment automation.

- Automating application life cycles—Kubernetes helps programmers cap the time and effort they need to develop and deploy their applications via the CI/CD pipeline. Kubernetes automates the application management process, allowing teams to make more efficient use of resources and streamline ongoing operations.

Monolithic Pipelines vs. Kubernetes Pipelines

Kubernetes is not just a new way of deploying applications. It will drive new software development patterns and can transform the CI/CD pipeline.

A traditional CI/CD pipeline has the following characteristics:

- The application is monolithic—all components are packaged together in one integrated system.

- A CI server pulls code from all components used in the project and compiles them to create a build.

- The build assumes a specific infrastructure, for example, a specific web server and database.

- The build results in an executable file that you deploy on computing resources, using virtual machines, cloud machine images, or containers.

- In many cases, there is a separate build for development, testing, and production environments.

It is possible to implement a similar process in a Kubernetes environment. For example, you can have a separate development cluster, testing cluster, and production cluster. You then deploy the application separately to each cluster as it moves through the development process. However, this is still a monolithic pipeline.

A pure Kubernetes CI/CD pipeline will behave differently:

- The application is decomposed into microservices, each performing a distinct function. Each microservice is loosely coupled and integrated with the others via APIs.

- Each microservice has a separate release cycle and CI/CD pipeline.

- The application uses only one Kubernetes cluster for development, testing, and production.

- A new version of each microservice is deployed to the cluster alongside the older version.

- Routing is handled by a service mesh or load balancer, which directs users to the most appropriate version of the service.

- At first, when the new version is not tested yet, only developers can access it. Later, a small subset of users gets the latest version (as in canary deployment). Finally, the routing mechanism directs the entire user base to the new version.

- Roll forward is just a matter of routing from the old version to the new version. Rollback is the opposite—routing back to the older version, still running on the cluster.

This pure Kubernetes pipeline has significant advantages. It allows teams to move faster and release new versions of microservices effortlessly. Teams need only deploy a microservice version once—and can go through dev, test, and production without redeployment.

Most importantly, in a pure Kubernetes pipeline, there may be no need for development, testing, and production environments. Each microservice undergoes a continuous process from initial development to working production version.

The CI/CD Pipeline Process: How Kubernetes Helps

Here is an overview of a typical CI/CD pipeline process, and how each step of the process can benefit when the application is deployed using Kubernetes.

1. Build

The CI/CD pipeline starts building the application the minute the developer commits code to the central branch.

How Kubernetes helps: The application is defined as a containerized image, which can be easily deployed to the Kubernetes cluster.

2. Test

During the testing phase, integration tests and unit tests check if a new addition is causing any issues in the application. Static analysis tools discover security problems and bugs in code, and container scanners examine container images for weaknesses.

How Kubernetes helps: Kubernetes is beneficial for the testing stage, because it can readily spin up containers and then immediately shut them down when testing completes. This enables fast testing in a realistic environment.

3. Deploy

Traditionally, CI/CD pipelines used a series of orchestrated steps to automatically deploy the application in each environment (development, testing, and production).

How Kubernetes helps: The CI/CD pipeline can build each new version of the application as one or more container images, placing them in a registry. If desired, the CI/CD pipeline can automatically cause a rolling update, pushing the updated container image to the cluster. Many teams do this using Helm charts and the Helm upgrade CLI.

4. Verify

Monitors deployment to make sure it completes and doesn’t trigger errors. This stage is critical because if something is incorrect and goes unnoticed, it can result in production faults and description of service.

How Kubernetes helps: Kubernetes has automated mechanisms that can ensure containers are running and self-heal pods if containers are not functioning. Kubernetes can also ensure there are enough instances of a container to serve current production loads.

Kubernetes Pipeline Example

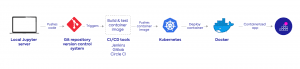

Here is an example of a Kubernetes-based CI/CD pipeline.

[this image is copyrighted, do not use it as is – we recommend building it with your graphic designer]

The pipeline includes the following components:

- Version control system—a code repository. Update and code changes are pushed from this repository to multiple developers. A CI/CD tool is generally initiated when developers push code modifications to a Git-based version control mechanism. A common example is GitHub.

- CI/CD tools—a test and integration system that creates the Docker image and runs a set of tests. It also deploys the built image to the Kubernetes cluster. Common examples include Travis, CircleCI, and Jenkins.

- Kubernetes cluster—after the CI/CD tool verifies that a build is ready, it beings to gradually deploy it to the Kubernetes cluster, running tests immediately as new instances are deployed..

- Containers—units running within pods in the Kubernetes cluster, which contain the new release of a software application. Common examples are Docker and runc.

Related content: Read our guide to ci/cd with jenkins.

Kubernetes CI/CD Best Practices

Containers Should Be Immutable

Docker lets you rewrite a tag when pushing a container. A known instance of this is the “latest” tag, a risky practice because a possible scenario arises where you don’t know what code is running in your container.

A recommended approach is that all tags are immutable. Tags should be tied to a unique, immutable value in your codebase—the commit ID. This connects a container directly to the code from which it originated. This way, you remain aware of precisely what is running inside that specific container.

Implement Git-based Workflows (GitOps)

Triggering CI/CD pipelines via Git-based operations has a lot of advantages in terms of usability and collaboration. Organizations keep all the pipeline modifications and source code at a single source repository, which lets developers review changes and eliminate mistakes before deployment.

Furthermore, integration of chat tools (such as Slack) and support for build snapshots help track changes and recover from failure.

Leverage Blue-Green or Canary Deployment Patterns

CI/CD pipelines deploy code in production after it passes the requirements of previous testing stages. However, despite the tests, in many cases a faulty or insecure version of the application is deployed to production.

Given this, you should implement a blue-green deployment pattern. Blue-green deployment means you deploy a second set of application instances, in parallel to your production instances. You switch users over to the new version, but keep the old version intact, so you can easily roll back to it in case of failure.

You can leverage a canary deployment to release an upgraded version of your existing deployment. This version must include all required application code and dependencies. A canary deployment can help you test upgrades and new features and analyze how they perform in production.

Kubernetes clusters use a service to manage canary deployments. The service employs labels and selectors to route traffic to pods with the specified label. This functionality enables you to easily remove or add deployments.

Release The Container That Was Tested

The immutable container you deploy to a staging, dev, or QA environment should be the same container deployed to production. This process prevents changes that can happen between the successful test and actual production release. You can achieve this by triggering a deployment to production using a git tag and deploying the container with the tag’s commit ID.

Keep Secrets Secure

A secret is a digital credential, which needs to be secured within the Kubernetes cluster. Most applications use secrets to enable authentication for different CI/CD services and applications in the Kubernetes cluster. A source control system such as GitHub might expose secrets when a CI/CD pipeline is released. So, you should keep secrets outside the container and encrypt them for reliability and security.

Test and Scan Container Images

Scanning and testing every new container image is critical for identifying vulnerabilities introduced by new builds or components. Testing container images can also verify that containers have the expected content and that the image is valid and run correctly.

Kubernetes Pipeline CD with Spot.io

Ocean CD is a Kubernetes-native solution. It focuses on the most difficult part of modern application delivery by automating verification and mission-critical deployment processes. With Ocean CD, developers can push code while DevOps maintain SLOs and governance.

Learn more in our detailed guide to devops automation.

Key features of Ocean CD include:

- Complete verification and deployment automation—developers and DevOps can have confidence in deployment reliability because Ocean CD validates and controls deployments, and rolls back when required, so code can smoothly deploy into production.

- Out-of-the box delivery approaches—overheads related to release management are reduced with Ocean CD’s out-of-the-box progressive delivery approaches. These strategies make it simple for users to execute deployments. Developers now have visibility across every delivery stage, and thus the entire CD process.

- Container-based infrastructure—when employing Ocean, users leverage container-driven infrastructure that can auto-scale to adhere to application requirements during the deployment process, optimizing cloud infrastructure costs and operations.

See Additional Guides on Key Kubernetes Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of Kubernetes.

Kubernetes Troubleshooting

Authored by Komodor

- Kubernetes CrashLoopBackOff Error: What It Is and How to Fix It

- Kubernetes Health Checks: Everything You Need to Know

- How to Fix CreateContainerError & CreateContainerConfigError

Container Security

Authored by Tigera

- What Is Container Security? 8 Best Practices You Must Know

- Docker Container Monitoring: Options, Challenges & Best Practices

- Docker Security: 5 Risks and 5 Best Practices

Kubernetes Security

Authored by Tigera