As a managed data plane service for containerized applications, Spot Ocean provides a severless experience for running containers in the cloud. Ocean integrates with the control plane of your choice, and handles key areas of infrastructure management, from provisioning compute and autoscaling, to pricing optimization and right-sizing.

A core component of Ocean’s architecture is the Ocean controller, which is how Ocean and your Kubernetes cluster integrate and interact. The Ocean controller exports pod metadata to the Spot backend and enables Ocean to take automated actions to manage, control and optimize your infrastructure.

How does the Ocean controller work?

One controller is usually deployed per cluster, and consists of 6 Kubernetes resources:

- ConfigMap

- Secret (optional)

- ServiceAccount

- ClusterRole

- ClusterRoleBinding

- Deployment

The controller binds the Kubernetes cluster to the relevant Ocean resource based on the configured Spot Account ID, Spot Token and a unique Cluster Identifier that is specified per cluster. Ocean is responsible for managing the cluster infrastructure, and since the controller resides in a pod inside your Kubernetes cluster, it’s crucial that the controller is always available so that Ocean can accommodate newly added workloads. To ensure reliability, the controller is configured with a priority class (system-node-critical priorityClassName) so that the Kubernetes Scheduler will prioritize the Ocean controller pod in case it is evicted or become pending (due to a scale-down event, rolling update, node unhealthiness, etc).

In the rare event that the Ocean controller does become unavailable, the cluster’s current capacity will be maintained by the Spot platform. Workloads running on spot instances will be replaced if there is risk of interruption, with fallback to on-demand still enabled, and workloads can continue to utilize committed capacity. In this scenario, however, Ocean will not be able to react to pending pods (scale-up) nor scale-down for optimization reasons. Also note that safe draining of terminated nodes will be affected.

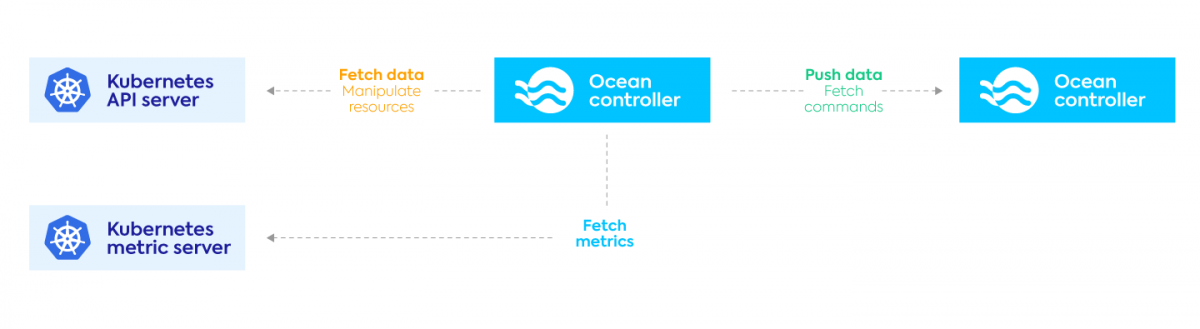

Ocean controller architecture

Deployed within the Kubernetes cluster, the Ocean controller communicates with the Kubernetes API and Metrics server to fetch and ship data to the Ocean SaaS, which is responsible for making scaling decisions. In case of a scale-down decision, the Ocean controller will ensure a graceful draining process of nodes being removed from the cluster.

The controller communicates using the HTTPS protocol and requires only egress communication towards the Ocean SaaS.

Controller Permissions

The Controller policy consists of multiple sections described below:

- Read-Only: Permissions for fetching data. Required for functional operation of Ocean.

- Node/Pod Manipulation: Permissions to update nodes and evict pods. This section is required for draining purposes, updating nodes as unschedulable, and evicting pods.

- CleanUp Feature: Required for Ocean AKS-Engine integration.

- CSR Approval: Required for the CSR approval feature.

- Auto-update: This section gives the controller permissions to update its deployment and role. This is required only for the auto-update feature.

- Full CRUD for Resources: currently the resources include pods, deployments, and daemonsets. This is required for the Run Workloads feature.

- Ocean for Apache Spark: Required by the Ocean for Spark feature.

The policy can be adjusted according to the desired capabilities. You can safely remove sections if you’d like to opt out certain features.

You can extend the Ocean Cost Analysis feature with CustomResourceDefinitions by adding another ClusterRole & ClusterRoleBinding, as CRDs extend the Kubernetes API, the controller must be aware of the specific extension name in order to query and collect the relevant data.

Please note – if auto-update is disabled those modifications can be done directly to the Ocean Controller ClusterRole object.

Controller Auto-Update – disable-auto-update

By default, the controller auto-update feature is enabled to allow users to get the latest Ocean controller versions. Controller updates improve stability, performance, new metric collection, and support for new Kubernetes versions & features. With the auto-update enabled, users don’t have to monitor for new controller versions or manually upgrade. Users can find the version history here.

To disable the automatic updates, add the disable-auto-update: “true” parameter in the controller configMap object and restart the controller (delete the current pod).

Update Process

When deploying a new version, updates are managed by Spot developers. During this process both the Controller Deployment and ClusterRole objects are updated.

Please note – if the controller isn’t available during the release cycle (due to any reason, including the usage of shutdown/running hours feature) it won’t be updated until the next controller update.

Please note – the auto-update process will override any Role changes applied by the user

Proxy usage, proxy-url

For customers that utilize a Proxy for their egress communications, the Ocean controller supports an optional proxy-url parameter. This allows the traffic sent from the Ocean Controller to the Ocean SaaS to pass through the configured proxy server. This feature is supported from Controller version 1.0.45.

Static endpoint, base-url

Customers who utilize strict firewall rules for egress communication might require configuring static IP addresses in their firewall rules. By default, the Ocean controller communicates with the Ocean SaaS using the following endpoint: api.spotinst.io.

The Ocean controller can be configured to communicate through a different endpoint which has static IP address. If your configuration requires this, please reach out to your Spot representative or the Spot support team for more details.

Certificate Signing Requests (CSR) Approval, enable-csr-approval

Kubernetes clusters can be configured with TLS bootstrapping to keep communication private, interference-free, and ensure that when a node joins a cluster, it creates a certificate signing request that must be approved before the node is registered and can operate as part of the cluster.

Ocean can approve node registration certificate signing requests (CSRs), which can easily be used by adding the following configuration to the controller configMap: enable-csr-approval=true

When enabled, the Ocean controller will only approve CSRs for nodes launched by Ocean, removing the risk of unknown nodes registering to the cluster.

Note – Ocean support for GKE Shielded Nodes performs a similar practice though does not require changes to the controller configMap.

Container Image location

The Ocean Controller image is located in two main repositories:

- Docker hub – spotinst/kubernetes-cluster-controller:latest

- GCR – gcr.io/spotinst-artifacts/kubernetes-cluster-controller:latest

Due to the Docker hub rate limit restrictions, our installation scripts defaults to the GCR image location.

Controller Not Reporting?

Follow this helpful troubleshooting guide. If those steps do not resolve the issue, please reach out to our support team via the console for assistance.

Summary

The controller enables the powerful automated features and capabilities of Ocean so you can scale fully-optimized Kubernetes clusters fast and efficiently.

To learn more about Ocean, read the other articles in our Ocean explained series on automatic headroom and container-driven autoscaling.