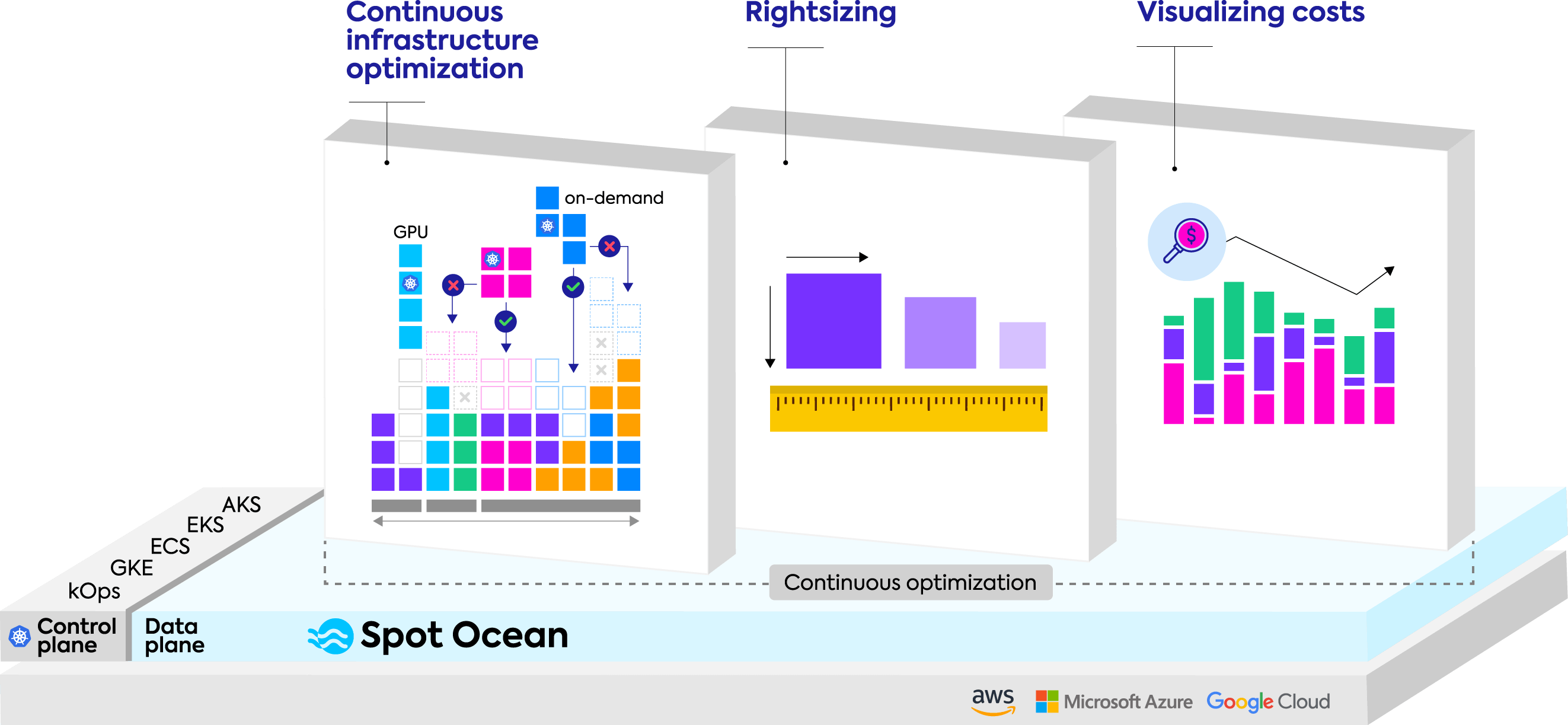

Automating continuous optimization

Intelligent Kubernetes infrastructure optimization

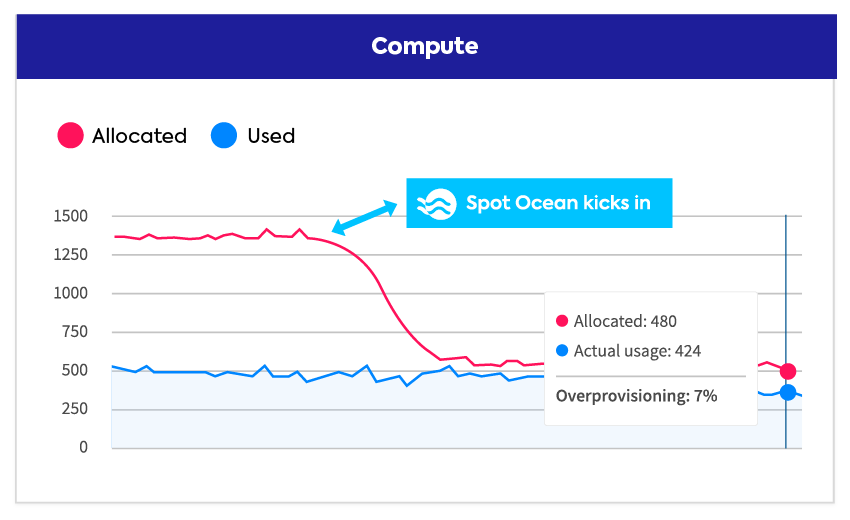

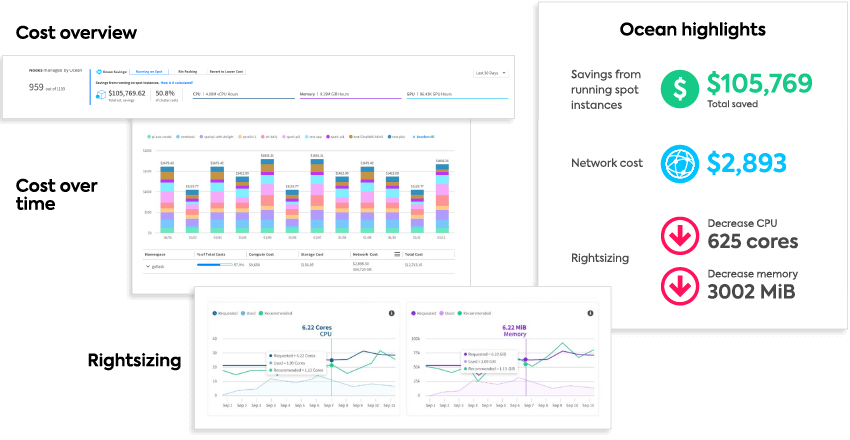

Continuous cost optimization

Ensure the maximum performance for the lowest cost possible with application aware, AI-driven automated provisioning of resources, pricing models, and commitments.

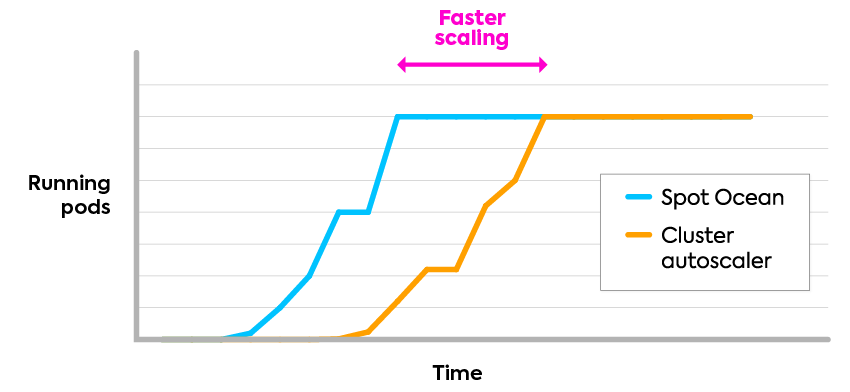

The fastest scaling

Respond in real-time to workload changes with Spot Ocean’s event-driven controller, enabling you to drive optimal resource allocation and performance without delay.

Exact automated rightsizing

Maximize utilization and efficiency with tailored recommendations and fully automated rightsizing of compute resources.

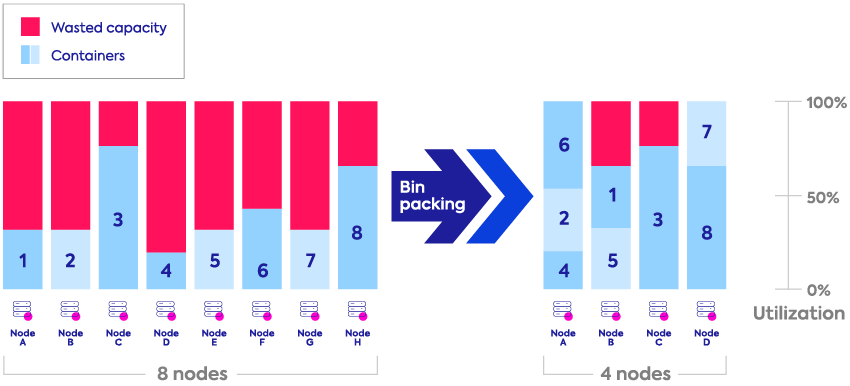

Nonstop bin-packing

Automatically place your containers in a way that optimizes resource utilization and minimizes wasted capacity.

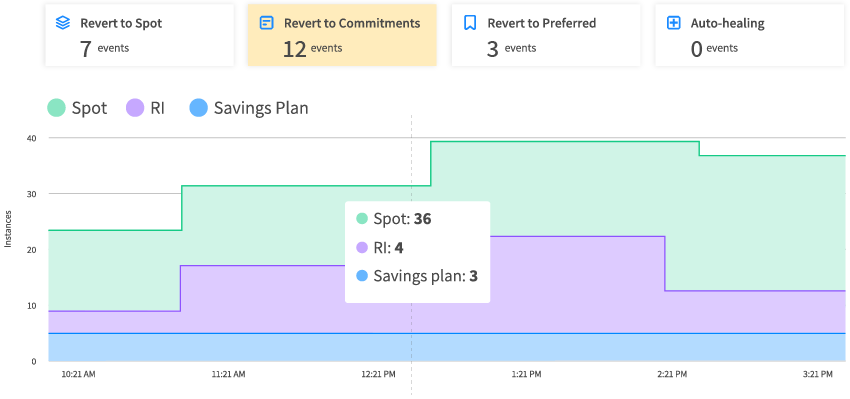

Maximized commitments utilization

Ensure your commitments are fully utilized before provisioning on-demand or preemptive instances.

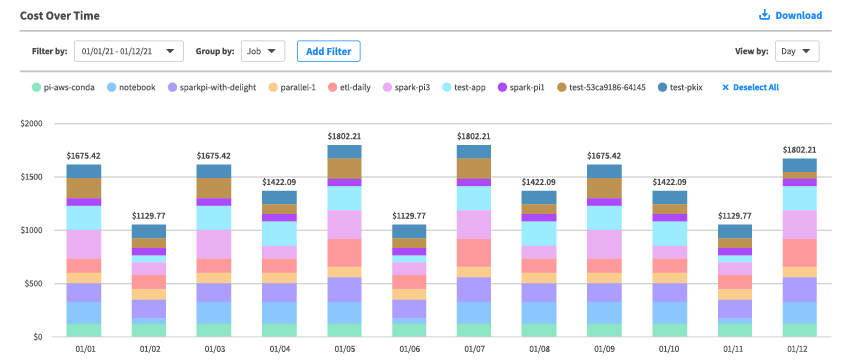

Comprehensive cost analysis

Get insights across compute, storage, and network resources, while analyzing application costs and enabling chargeback, all without extensive resource tagging.

User customizable

Users can configure infrastructure parameters if desired via infrastructure-as-code tools like Terraform, kops, eksctl, CloudFormation and data analysis tools, as well as Ocean’s UI or API.