Spot Ocean delivers container-driven autoscaling to continuously monitor and optimize your cloud environment. Positioned at a busy crossroads in the application deployment pipeline, Ocean has a critical role when shipping new containers. Given the highly dynamic nature of Kubernetes environments, events happen constantly and take shape as logs in Ocean. These can help you understand the chain of events in different scaling scenarios, from debugging cluster issues to incident analysis.

In Ocean, these system logs are available for consumption through both the web console and a REST API. Users are able to build tooling around API calls in order to pull log data and incorporate it into a centralized logging store. Feedback from them indicates that the autoscaling information generated by Ocean has significant value when incorporated into log monitoring pipelines. To enable customers to leverage this data across their APM (Application Performance Management) tools, we are enhancing Ocean’s data integration capabilities. Starting today, Ocean automates the continuous export of logs to AWS S3 storage.

AWS S3 Integration

This new integration allows a generic export of all Ocean’s logs into a predefined S3 bucket. These log files can then be easily pulled or pushed into different logging systems.

For example, using S3 plugins like ELK, NewRelic or DataDog can create a seamless streaming pipeline of Ocean logs.

For Ocean users, the new integration is designed as a managed service. It handles the continuous export of newly logged events every few minutes and prevents duplicate entries between files. Implementation is as easy as connecting Ocean to an existing S3 bucket.

Connect Ocean to S3

Establishing the integration is a two step process:

- Define an S3 bucket connection: use the https://docs.spot.io/api/#operation/DataIntegrationCreate API call to define an existing bucket in S3 and give the connection an ID in order to use it in the next step. It is also possible to specify an existing subdirectory inside the bucket.

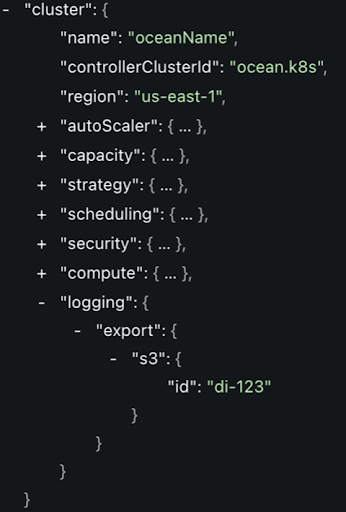

- Use the defined connection in each Ocean cluster you wish to have the logs exported. Do this by editing the Ocean entity with the new

loggingconfiguration.

Ocean logs will appear as files in the S3 bucket. It is possible to use the same integration for multiple Ocean clusters. Please review the Spot Ocean documentation for additional details.

What’s Next?

This is just a first step in integrating Ocean log data to unlock its potential for the DevOps/SRE stack. We are working hard to provide additional native integrations for logs and metrics.

To learn about Ocean’s features for infrastructure automation, please visit our Ocean product page or create a Spot account to get started.