By Shon Harris, Developer Relations Lead at Spot, and Dhammika Sriyananda, AWS Sr. Partner Solutions Architect, AWS SaaS Factory

SaaS providers prefer to host their solutions centrally on the cloud as a single deployment unit and share them across all their customers (tenants) in order to achieve strategic business objectives. To support this, SaaS generally demands cloud-managed services to help implement necessary automation, security, and observability specifically for their multi-tenanted compute layers. AWS container managed services such as Amazon ECS (Elastic Container Service) and Amazon EKS (Elastic Kubernetes Service) provide mechanisms to support these features by taking over the undifferentiated lifting from SaaS providers. Further, these services support different multi-tenant strategies for isolating tenants while managing underlying infrastructure efficiently with reliability and scalability, making them a great fit for SaaS solutions.

Often, these workloads tend to be highly dynamic and unpredictable, especially when the SaaS business grows and scales with an increasing number of tenants. Therefore, SaaS providers require additional instrumentation to ensure optimized container resource allocation and auto-scaling for better performance and operational efficiency. Additionally, the need to have tenant-wise observability presents a unique challenge that requires further operational tooling within the compute layer.

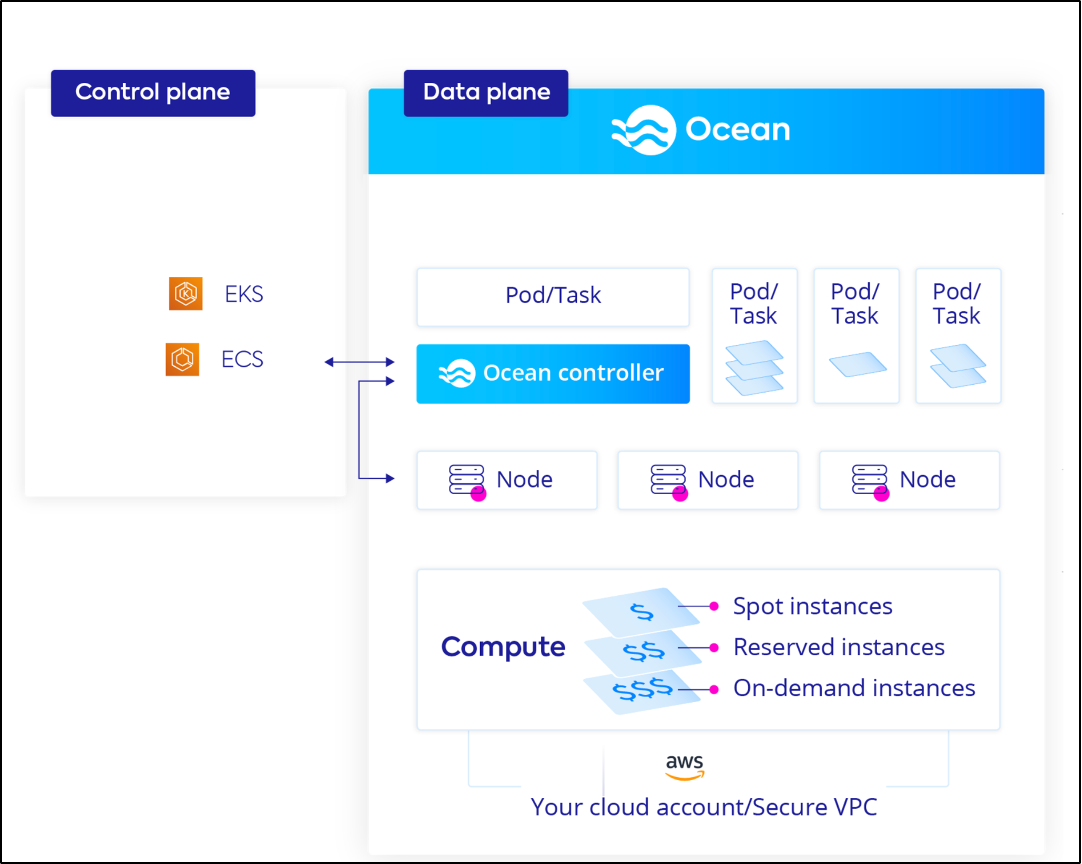

Spot Ocean from Spot is a managed solution for containerized workloads that is purpose-built to operate compute clusters with automated infrastructure optimization by reducing the overall cost while ensuring the availability and performance metrics of the workloads inside the cluster. Ocean utilizes advanced artificial intelligence (AI) and machine learning (ML) models as well as performing on-cluster near-real-time simulations of your workload to choose the best instance types to launch and scale the compute clusters effortlessly. Ocean also introduces a machine speed result to automation and visibility about the infrastructure usage of the container clusters that are running within the ECS and EKS services.

In this post, we will discuss how Spot Ocean can help SaaS providers optimize the resource utilization of ECS and EKS SaaS workloads, reducing the Total Cost of Ownership (TCO) and improving the operational efficiency of DevOps and Platform Engineering teams.

Let’s begin by exploring different SaaS architectures that we see within the AWS Container Service offerings.

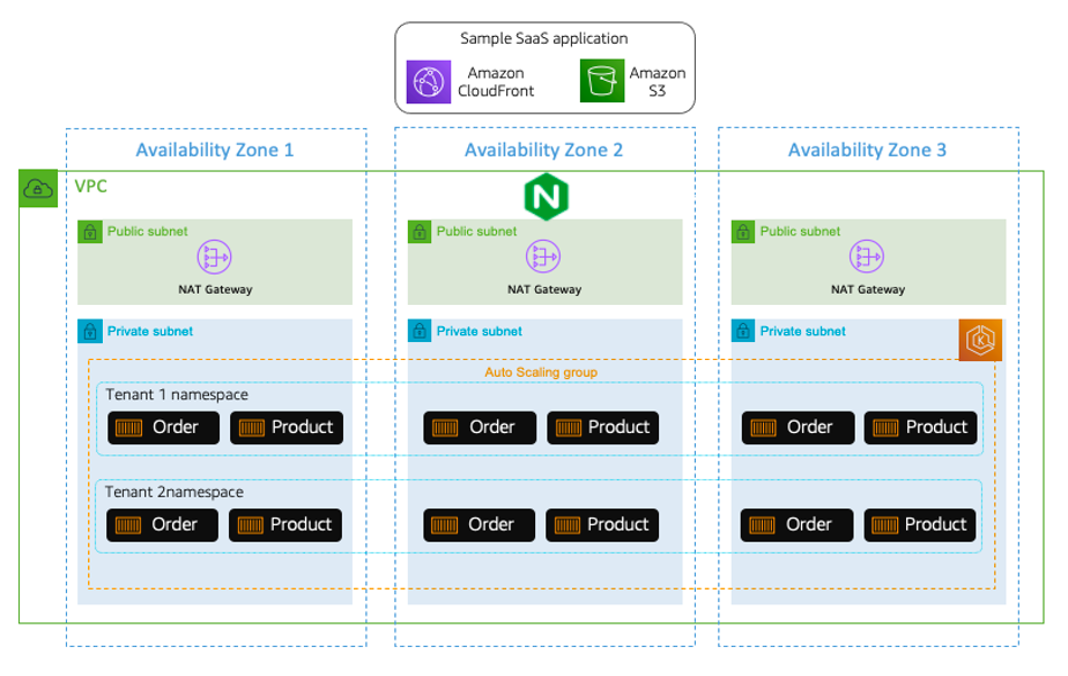

Building a multi-tenant SaaS with Amazon EKS

Amazon EKS provides flexibility to build multi-tenanted SaaS solutions with native Kubernetes features. AWS SaaS Factory has built a fully integrated EKS reference architecture that provides implementation details for building SaaS on EKS. The core design pattern that it describes is the tenant-wise compute isolation by leveraging Kubernetes namespaces that helps to build SaaS architectures, as shown in the following diagram. More details can be found in this blog post.

Here, the Kubernetes namespaces provide a boundary to group the tenant-specific microservices within Amazon EKS, which enables the implementation of tenant isolation using fine-grained network policies at the namespace level using solutions like Tigera Calico. Further, it allows to leverage resource requests and limits per namespace to control the infrastructure that manages potential noisy neighbors in the solution.

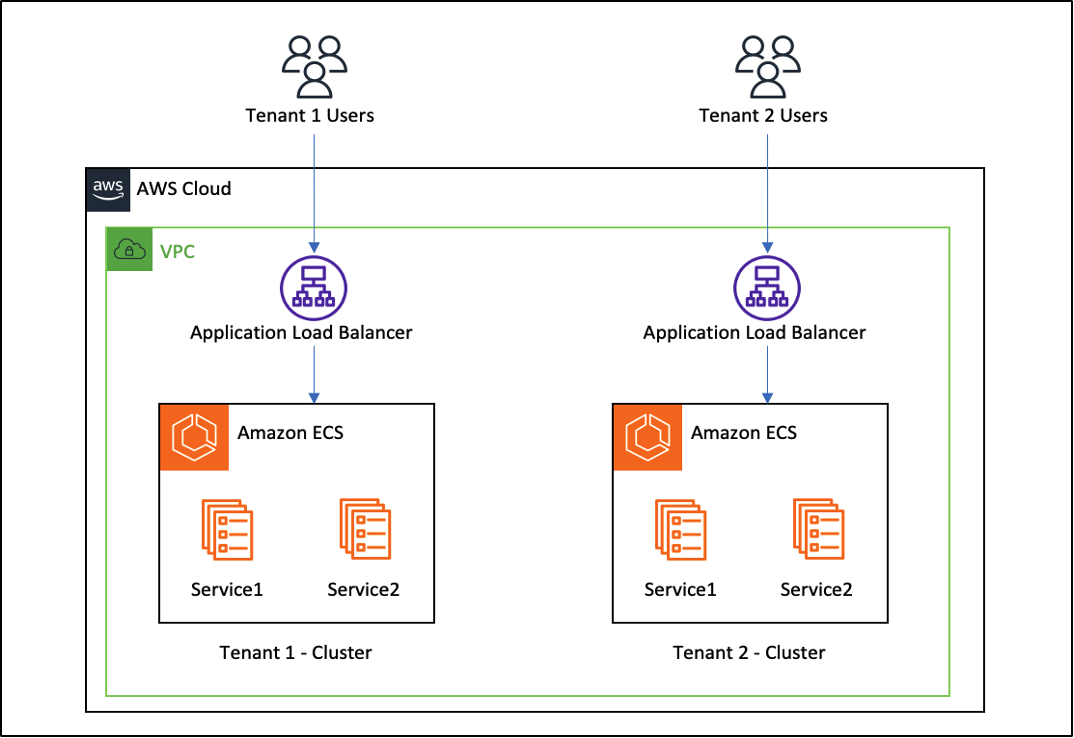

Building a multi-tenant SaaS with Amazon ECS

Amazon ECS allow customers to create ECS clusters at no cost, and it will charge only for the actual compute workload that are deployed within the clusters. Therefore, organizations can leverage Amazon ECS clusters in their SaaS solutions to isolate compute workloads tenant-wise. This provides a great tenant isolation strategy to build SaaS with no additional cost or instrumentation.

Here, each tenant will be assigned a dedicated ECS cluster with own set of microservices deployed separately. That will help to simplify the tenant routing components, controls noisy neighbors, and makes it easy to meter tenant specific compute resource consumption without any additional tooling or configurations in the architecture.

While these patterns are great and well-received by SaaS partners and customers, there is still room to optimize overall compute performance and cost while improving operational efficiency. And that is where the Ocean platform from Spot comes in.

Let’s explore how Spot Ocean can be integrated to streamline multi-tenant SaaS solutions running on Amazon ECS and Amazon EKS.

Overview of Spot Ocean: The serverless infrastructure container engine

Ocean from Spot is a managed solution that is purpose-built to help you manage workloads in EKS and ECS. Ocean will not only choose the best blend of instance types for your unique workloads but also brings a new level of automation and visibility. In terms of visibility, Ocean provides a unique 360-degree view of your costs, including compute, storage, and network. Considering the importance of increasing automation and repeatability in these environments, Ocean automatically replaces unhealthy nodes, brings a rolling nodes option, and performs automatic upgrades for EKS clusters. Lastly, Ocean is an enterprise-grade product that provides 24/7 immediate support and active monitoring from a NOC team to identify performance and infrastructure anomalies while ensuring that you have a monitored SLA for your nodes.

How Spot benefits multi-tenant and SaaS workloads

Cluster-level automation

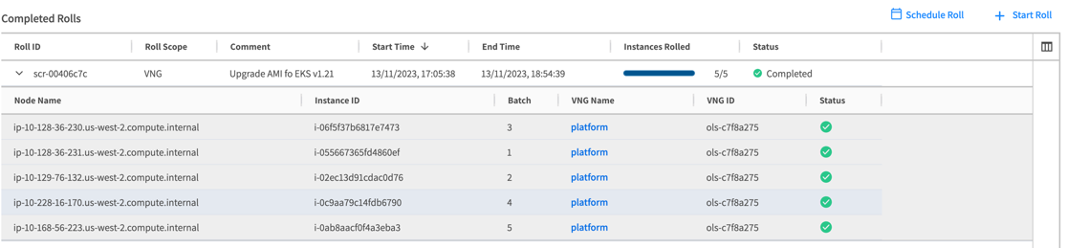

Ocean is a powerful Kubernetes automation solution designed around the idea of being able to free up DevOps and Platform Engineering teams from manual infrastructure management and provisioning tasks. The key benefit is that these valuable resources can allocate their time to more strategic and higher-priority tasks. For example, once you change your user data scripts or the AMI your cluster is using, how can you ensure that the change is gracefully applied? With Ocean, you can use a single command via the interface or command line tools to roll the entire cluster.

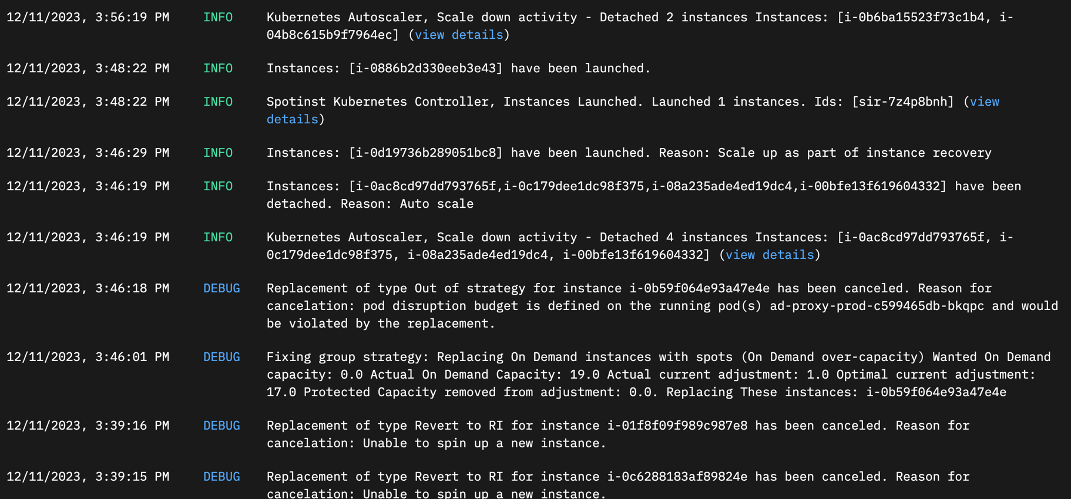

What if a node is not healthy? Because of the proactive workload monitoring, your infrastructure will now have a trusted set of eyes to identify and replace the unhealthy node without knocking services offline that could impact customers in a multi-tenant workload or SaaS application. The advanced bin-packing algorithm will begin moving pods around and replacing the unhealthy node(s) with no interaction necessary from an engineer.

This strategy replaces manual “click-ops” tasks while reducing the time to identify and resolve an issue with machine-learning-driven automation and repeatability.

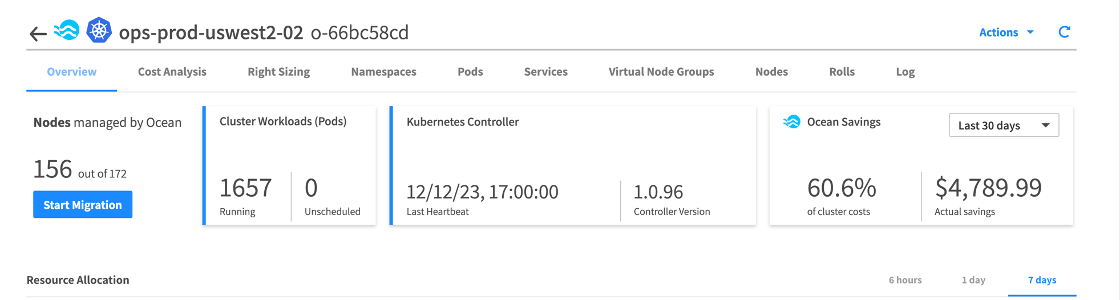

Visibility

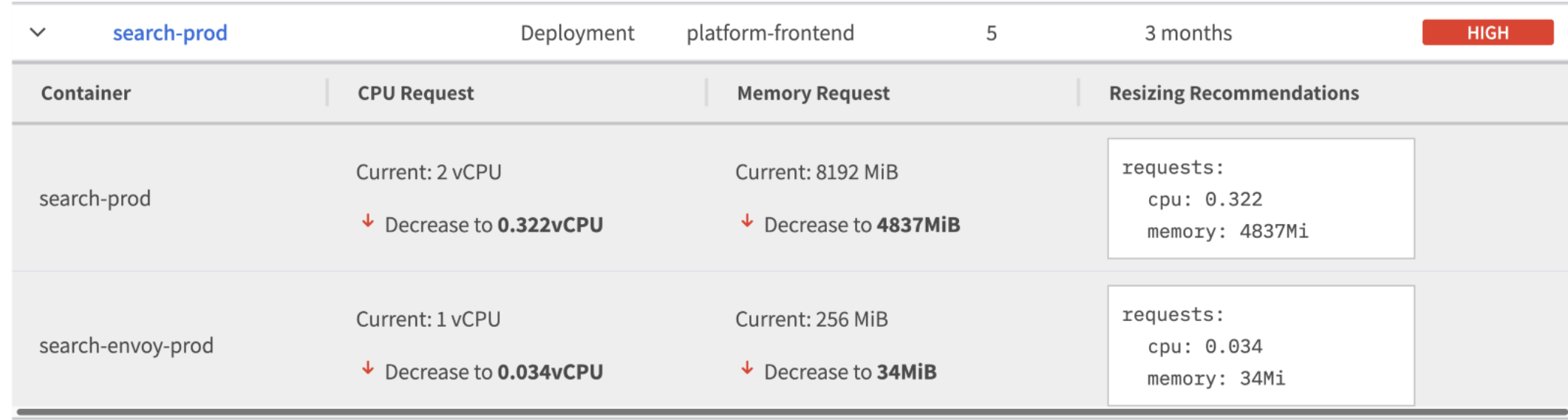

How much does my specific workload cost? What about the network egress costs of a specific pod or VNG? How do you answer those questions today? Because Ocean is built specifically to help with this issue, you have a clear UI that shows you all that information and the ability to automate further using the API provided out of the box. Another challenge that Ocean was built to solve was to better understand the request rates and performance for your containers. Ocean will answer that for you, using right sizing recommendations.

Cost

Visualizing and keeping the cost of your workload(s) under control is a constant challenge for any user in the cloud. Even more so when considering the need to charge and show back cost data to specific tenants and customers. Ocean brings you visibility at the application level while ensuring continuous optimization for both performance and cost. While providing you with a real-time view into the automation occurring behind the scenes.

Behind the scenes: How Ocean works

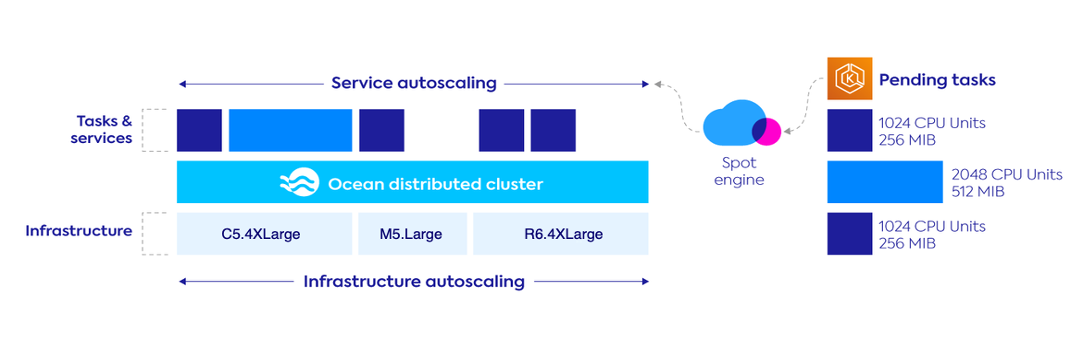

Ocean collects information about your workload(s) from a lightweight controller inserted in your cluster(s) during onboarding. Using this information about the state of the pods and the nodes, Ocean begins to run simulations and run its advanced ML-based algorithm to recommend not only the optimally sized instances for the workload but also the optimal alignment for the pods being packed into those nodes.

Before a call to scale up, Ocean is continuously monitoring and simulating potential performance impacts and the daemon sets that are running on each node and performs simulations to determine which instance types and sizes will be best for your pods while factoring key concepts like cost, availability, and node and pod density. Similarly, the Ocean autoscaler constantly checks if it is possible to bin-pack the cluster to achieve operational performance while ensuring the best utilization of your K8s infrastructure’s processor, memory, and network bandwidth.

While scale-up events and being prepared for increased utilization are important, Ocean also gives you the flexibility to control the aggressiveness of the scale-down events to ensure your expensive infrastructure is not needlessly sitting idle.

While Kubernetes provides a built-in priority scheduler at the pod level and a cluster-overprovisioner, these values are static. So, what happens if your resource utilization grows (or shrinks) over time? Do you have processes in place to adjust these values? You could find yourself on the wrong side of the Kubernetes headroom challenge. This is in addition to the learning curve to run the administration of the cluster-overprovisioner, and left configured incorrectly, could set pods with the wrong priority for eviction or rebalancing. This is what the Ocean Headroom automation was built to solve. Allowing you to define specific values for your headroom or allowing Ocean to use the performance data to automatically manage it for you based on the simulations from the controller.

Using your specific workload’s metrics, in addition to the advanced simulations that the controller is performing constantly to maintain optimal pod and node alignment, Ocean can optimize for cost and right-sizing visualizations that help you better automate the management of your environments learning the current state within hours of deploying giving you a holistic view of the performance, issues, and the fully loaded cost on a workload by workload level.

5 ways Ocean enables you to do more with your EKS and ECS compute resources

1. Utilizing the best lifecycle

Ocean is purpose built to address the burden of manually managing Kubernetes environments. So, while Ocean enables production stability on spot instances, it isn’t the only level of optimization we provide. Ocean is designed to assist in choosing the best lifecycle for your workload. For example, in the event RIs are not in use, Ocean will utilize them before scaling a spot instance. The same goes for savings plans. In the case that Spot Instances, RIs, or Savings Plans are not available, we will intelligently launch an on-demand (OD) instance. And as soon as Ocean can find a better price or instances that can burn down RI and Savings Plan commitments, we will move to them as fast as possible to lower your cost.

2. Pod-driven autoscaling

Behind the scenes, Ocean constantly simulates and identifies the infrastructure requirements and instance size to scale to meet the demands of your unique workload. As a result, the autoscaler also considers, with 95% accuracy the probability of interruptions in that market. This ensures that Ocean will scale the best type in terms of availability and price. If you wish to adjust the way the autoscaler works, it is totally up to you. You can set the cluster orientation on “cost” so that the main factor when determining performance will be to operate at the lowest cost possible. Alternatively, you can select performance so that the autoscaler is always leaning towards performance over cost.

3. Automation

Ocean is constantly scanning your clusters and identifying opportunities for better performance or lower cost, such as switching to a smaller instance type or family. In the case of an unhealthy cluster, Ocean will take care of it proactively and begin the process to replace it.

4. Visibility

Ocean provides full visibility into cost and architecture with a full view of all pods and nodes, quick filters, with a clear UI. Metrics are exported to Prometheus so that you can see exactly what automation decisions were made and set up alerts about anomalies and other functions based on your department’s needs.

5. Identifying and eliminating waste

Cloud workloads are notorious for enabling waste. The Spot platform is purpose-built to identify and prevent waste and ensure that your workloads always have the resources they need specifically by predicting interruptions and transferring the endangered resources to a safer market or a better-suited cost mechanism.

Spot Ocean is built in close partnership with AWS. This partnership has enabled Spot to integrate directly into ECS and EKS using the AWS Marketplace. Ocean is also built to meet you where you are on your journey, which is why Spot has worked to provide integrations with popular infrastructure tools like Ansible, Terraform, Pulumi, and a fork of ecsctl called spotctl. Ocean also integrates into the AWS CLI, CDK, and SDKs.

Adding Ocean support for an Amazon EKS SaaS cluster

You can follow the instructions given here to add the support for an EKS SaaS compute layer. That will install the lightweight controller, which will communicate to Ocean the state of the cluster. If you wish to implement any of the built-in Ocean labels for scaling limitations, you would need to add it to the pod level.

Metering tenant-wise metrics is a critical function in multi-tenant and SaaS environments. Ocean provides an exporter for Prometheus, enabling the ability to slice and dice the metrics as you wish. Find more details here. In addition to the Prometheus export functionality, there is a Grafana dashboard you can use here.

We find that multi-tenant clients are often separated, each tended into a specific VNG, and inside Ocean’s metrics, the VNG name and ID are displayed individually so they can be monitored and reported on separately.

Since flexibility is the main aspect of the Spot platform’s user experience, there are multiple ways to connect existing EKS observability tools that may be in use while also providing built-in observability for individual workloads at the cluster, VNG, or actual physical instance level. This allows for modifications inside of the environment by the operator(s) or enabling and managing the environment from the Spot console or with popular tools and integrations to automate your environment even further.

“It has been seamless for us. We don’t spend time worrying about underlying resources since we moved to Ocean. It’s a very big win for us because most of our apps are now stateless and we don’t have to do hands-on infrastructure management. We are almost always at 80% cluster utilization, which is where we want to be. As soon as it hits 85%, Ocean takes care of scaling up.”

— Ganesh Narasimhadevara, Director of DevSecOps and Platform Engineering, ZestMoney

Adding Ocean support for an Amazon ECS SaaS cluster

Out of the box, and as part of the same onboarding experience, you can deploy Ocean to your ECS workloads as well. Ocean monitors incoming tasks and adjusts the size of the cluster based on the constraints set at onboarding. This ensures that the cluster resources are sized and utilized correctly and scales down underutilized instances to ensure maximum performance at the lowest compute cost unit possible.

As tasks are submitted, using the Amazon CLI, API calls, or Console, Ocean analyzes the cluster and identifies the readiness for the incoming job. All while constantly running simulations on the cluster working to optimize the resources using our bin-packing algorithms. As a part of this same simulation process, when it is identified there are over-provisioned resources for the workloads, the cluster automatically begins a scale-back process, all while respecting the constraints, taints, and cluster configuration like how Ocean works on EKS.

“Spot Ocean automates cluster optimization for us, rapidly scaling nodes up and down as needed and bin-packing the remaining pods. This increased our compute utilization and cost efficiency by 20%.”

— Matthew Zeler, Senior Director of Production Engineering and Operations, Lacework

Conclusion

There are different strategies that can be leveraged in building SaaS on AWS with Amazon EKS and Amazon ECS. While these strategies provide a greater value proposition to build SaaS, there is always room for optimizing cost and improving operational efficiencies and Ocean platform from Spot can leverage mechanisms to improve these areas for SaaS workload running on AWS.

Without intensive and detailed DevOps management, Kubernetes can quickly become inefficient and lead to a significant waste of cloud resources, and eventually, DIY approaches become unsustainable. Ocean is more than just a pod autoscaling tool. It is a purpose-built solution designed to take away the headaches around the operational complexity that Kubernetes and Microservices introduce specifically when architecting a new multi-tenant platform. Automation and repeatability are two key concepts that must be considered when developing a service or product that eventually will scale; Ocean enables this from day one. Turning manual processes into automated functions will empower your development teams to be 41% more productive and deliver your products to market 26% faster at 53% lower costs for your infrastructure.

Ocean is just one part of the Spot suite of solutions that can benefit building SaaS applications on AWS. When combined with Spot Eco (FinOps), Elastigroup (Virtual Machines and Load Balancers), and even an innovative CI/CD tool specifically designed for Kubernetes Workloads (Ocean CD), and more recently an anomaly detection and response tool (Spot Security), your organization can benefit from a single pane of glass for observability, cost, and infrastructure management that is uniquely suited for the needs of the modern SaaS vendor.

About Spot

Spot helps companies optimize and simplify their cloud environments by introducing automated continuous optimization. Learn more at spot.io and explore the Spot documentation, API references, and step-by-step labs by visiting developer.netapp.com.

Join the NetApp Official Discord Servers for conversation, help, and discussions about CloudOps best practices with our subject matter experts and fellow customers at netappdiscord.com.

About AWS SaaS Factory

AWS SaaS Factory helps organizations at any stage of the SaaS journey. Whether looking to build new products, migrate existing applications, or optimize SaaS solutions on AWS, we can help. Visit the AWS SaaS Factory Insights Hub to discover more technical and business content and best practices.

Sign up to stay informed about the latest SaaS on AWS news, resources, and events.