Recently we announced the availability of a new version of Ocean for Azure Kubernetes Service (AKS). I decided to migrate some of our production services. While the service was already running on native AKS, we decided to leverage Spot’s Ocean for AKS to manage it more effectively, and, as they say, drink our own champagne.

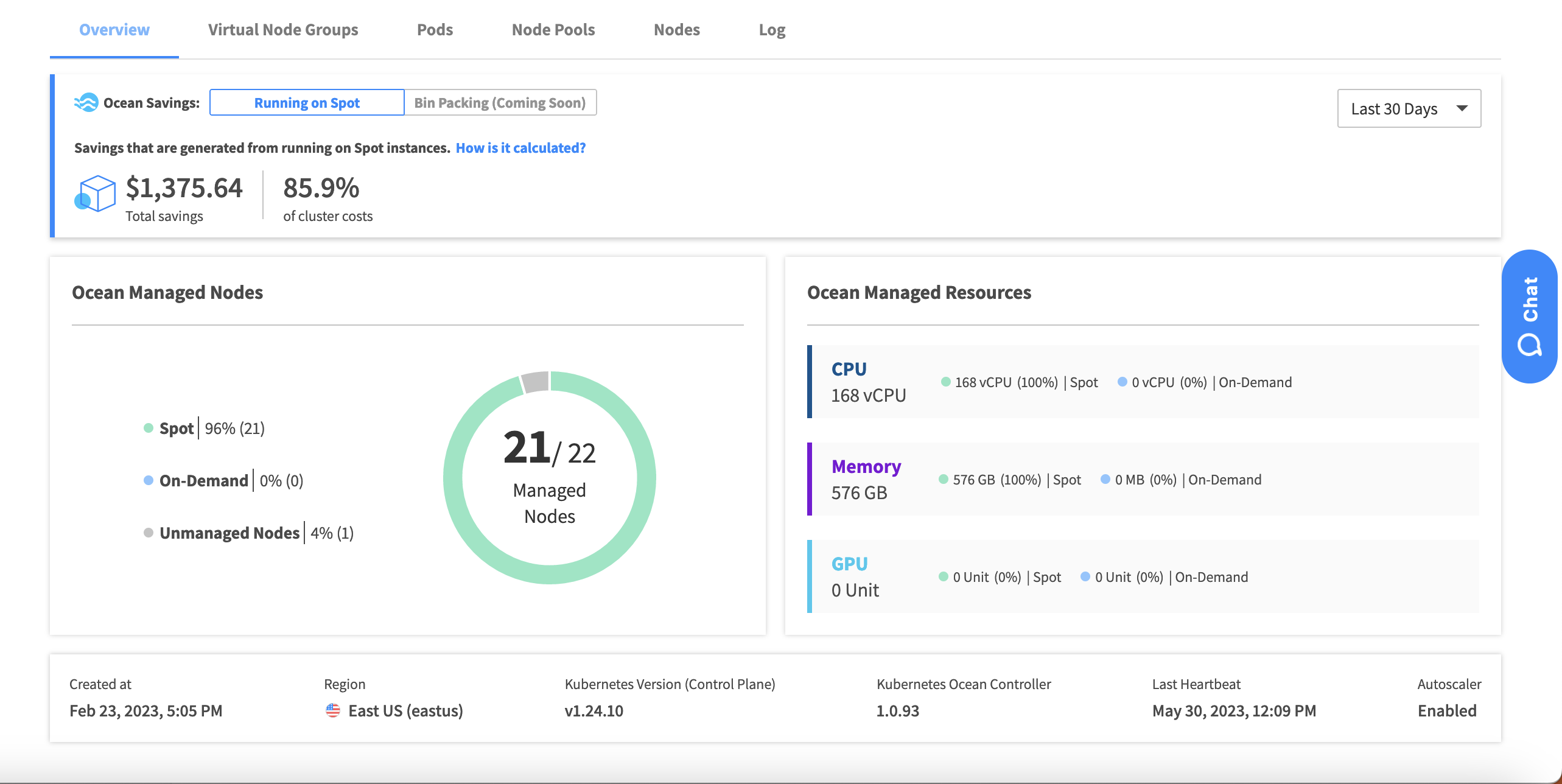

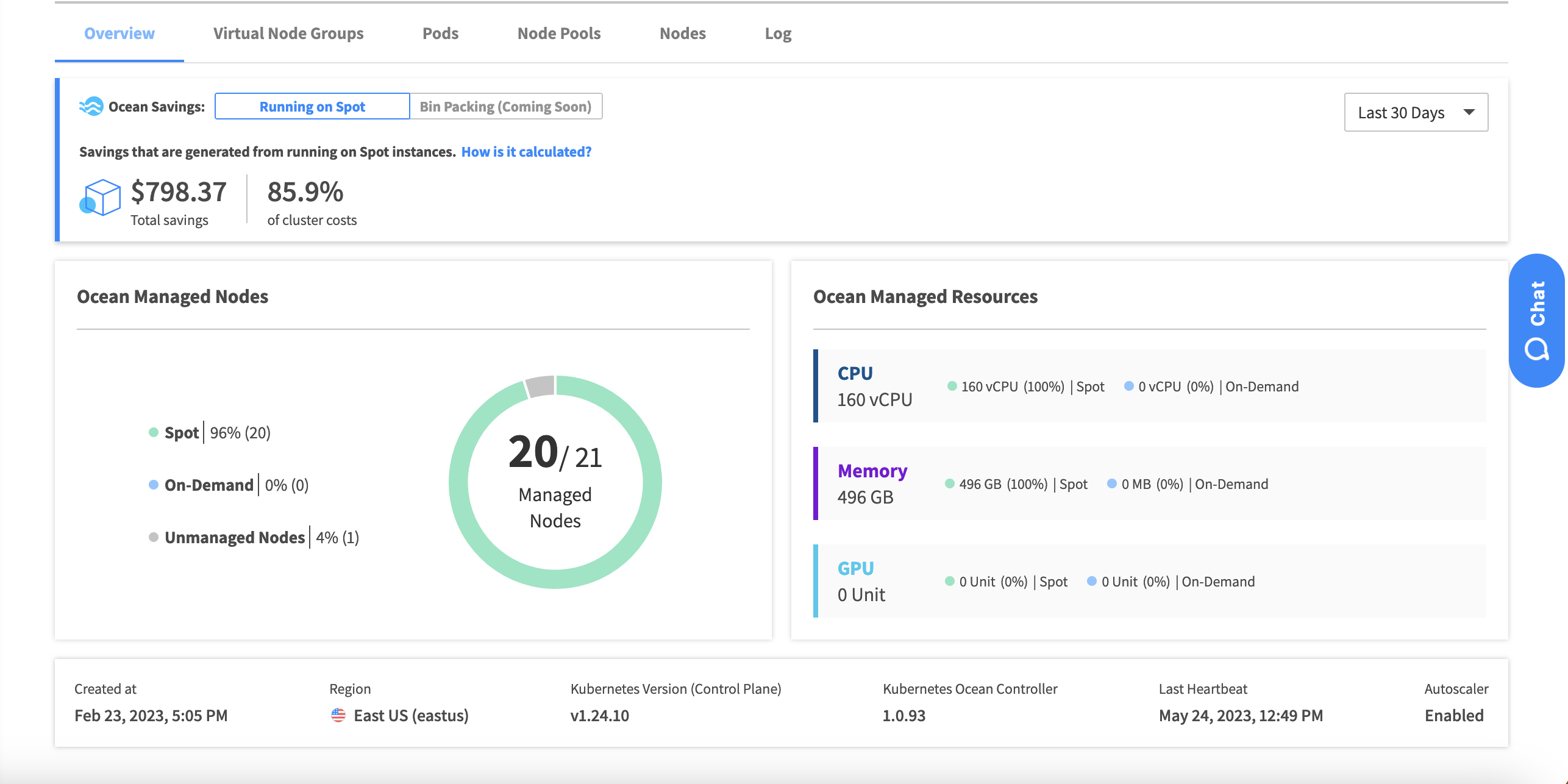

The outcome has been quite impressive. I managed to reduce our spending for this AKS cluster by a staggering 85%. You can see the results in the screen capture provided below.

Interested? Learn how you can take these same steps in just five minutes.

Our AKS production cluster configuration was as follows:

- Network type (plugin): Azure CNI

- Node pools: 2 node pools (User, System)

- Node sizes: Standard_D8_v3 and v4, Standard_D8as_v4

- Region: EastUS

- Network Policy: Default

- Cluster is using Managed Service Identities

- Autoscaling: Enabled

This production application consisted of a front-end and a back-end for developer use. It offered various utilities to create and manage test, staging, and production environments. However, we were dissatisfied with the node utilization in this AKS cluster, and the expenses were soaring. Moreover, we had budget restrictions and limitations on the number of nodes. Consequently, we experienced numerous instances where pods remained unscheduled, awaiting the completion of other jobs.

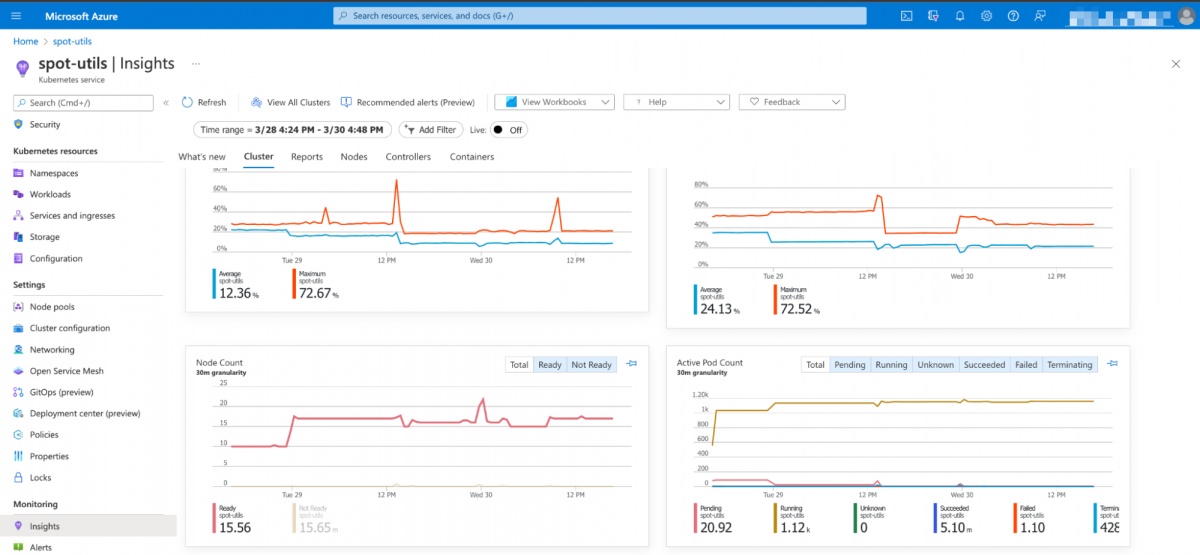

The following metrics depict the state of the AKS cluster before migrating to Spot Ocean.

As you can observe, there were frequent occurrences of pending pods, and the average memory utilization stood at 24%, while CPU utilization was only 12.36%.

Ocean’s main features are optimization of node utilization, reduction of cluster costs, and maintaining high availability and performance. Considering the underutilized resources, pending pods, and budget constraints of this cluster, I decided to migrate it to Spot Ocean for AKS.

Configuring and connecting to Ocean

Before connecting the AKS cluster to Ocean, I followed the steps outlined in the Spot Ocean documentation:

- Connected an Azure account to Spot.

- Verified access to our AKS cluster.

- Installed and configured

kubectl(the Kubernetes command-line tool)

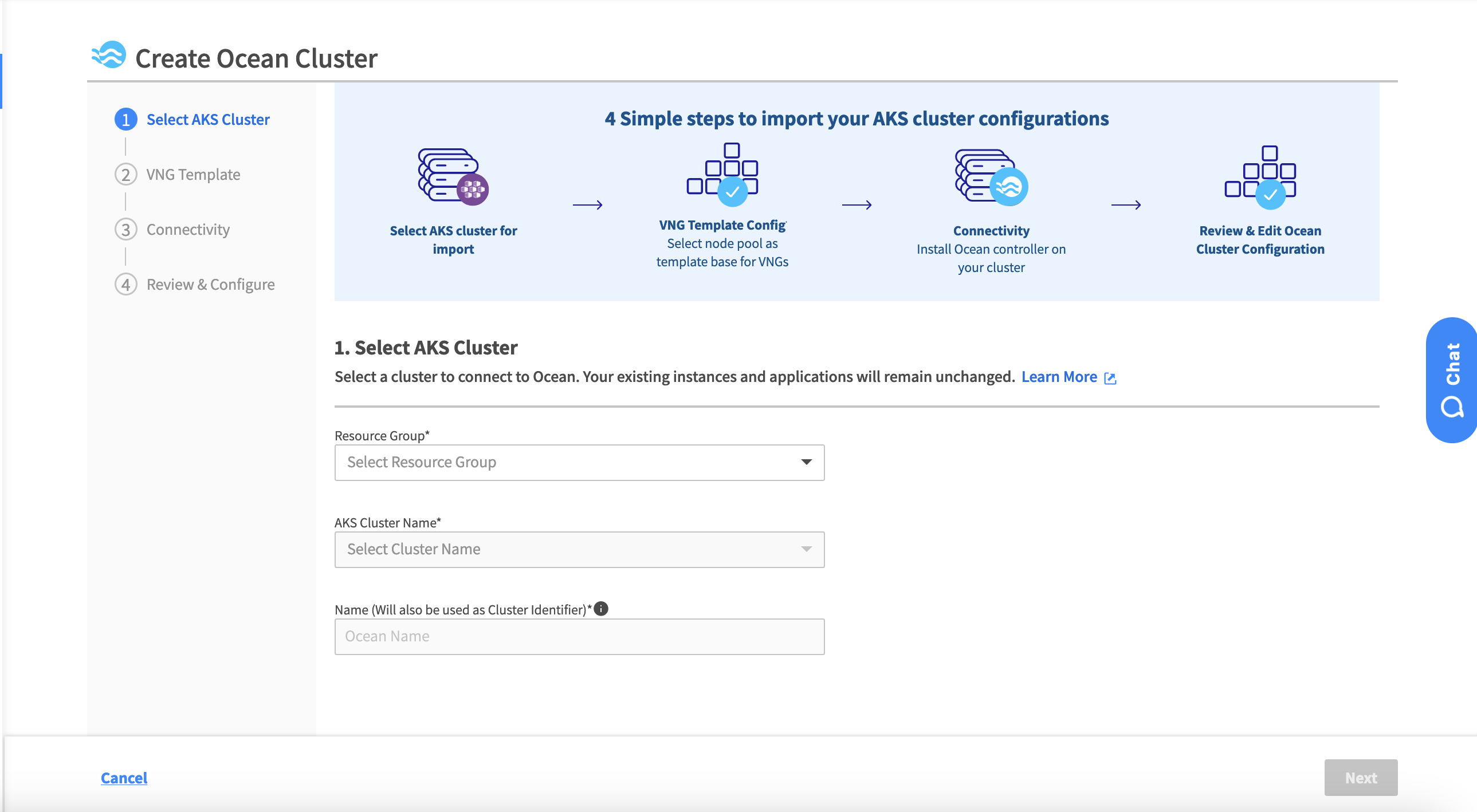

The setup process to enable Ocean’s operation on the AKS cluster (import process) is straightforward and involves just several clicks:

- Find the cluster you want to manage with Ocean.

- Select an existing node pool to generate a VNG template.

- Generate a token.

- Install the controller on the cluster using a simple command.

- Review the configuration you defined in previous steps (JSON format).

The setup page is displayed below.

Once the connectivity at step 3 was established, review the configuration in step 4 (it encompasses Images, Networks, Cluster tags, Login, Load Balancers, Disks, Extensions, and Authentication). Click create and the import process is finished.

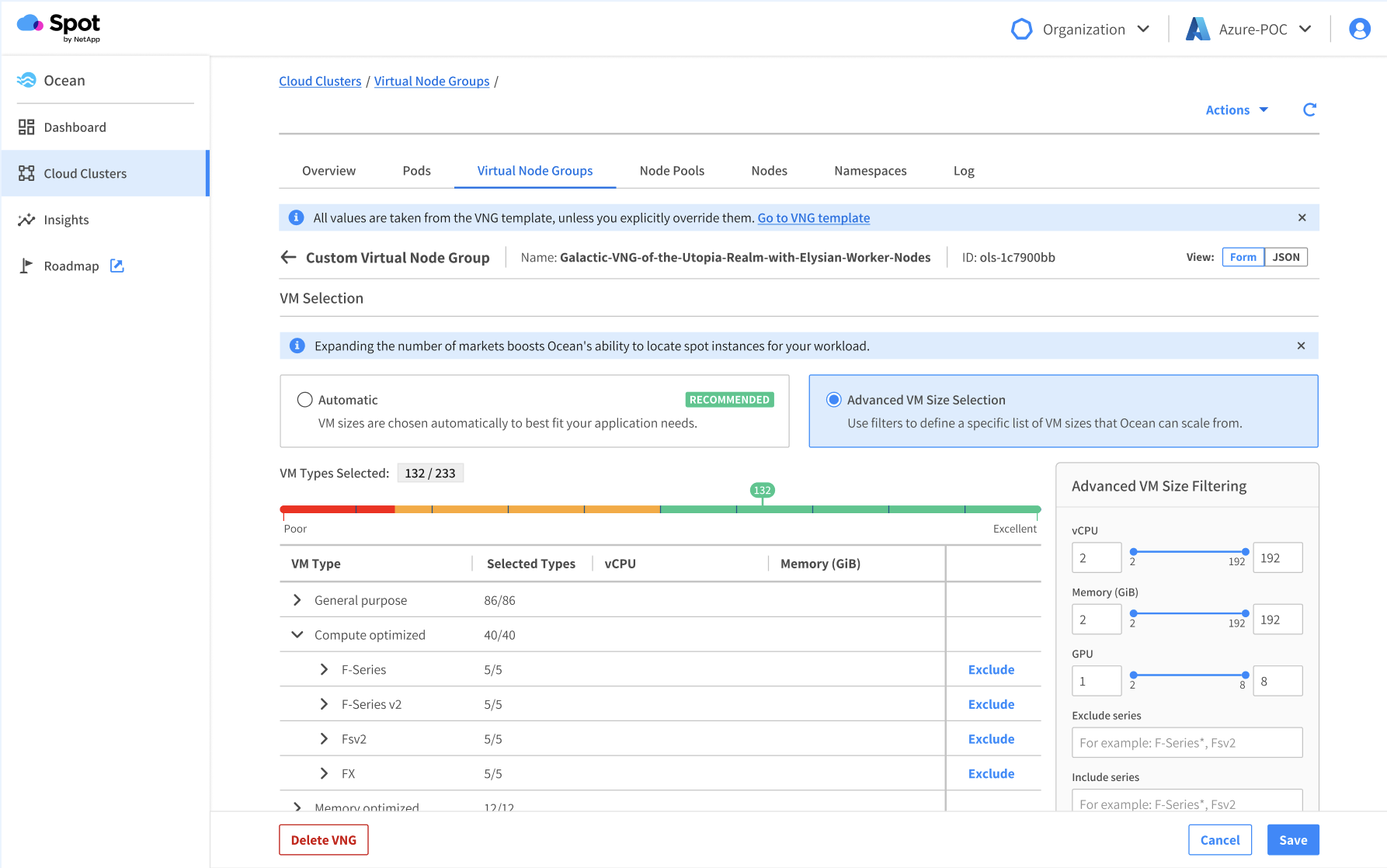

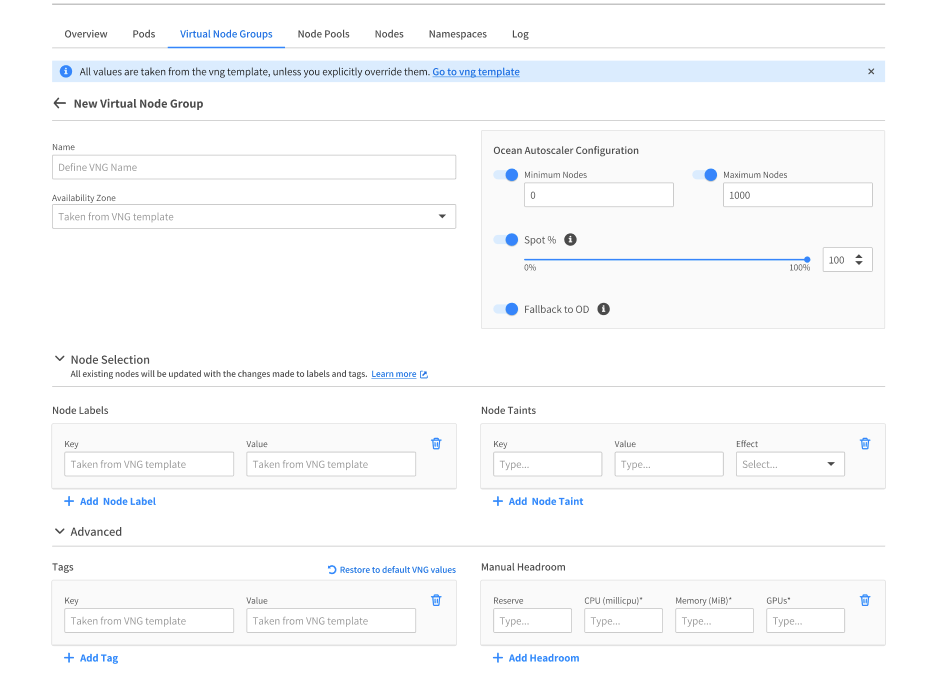

The last step is to configure a Virtual Node Group (VNG). What is a VNG? Virtual Node Groups act as an abstract layer, allowing the management of different workloads on the same cluster. Virtual Node Group settings are where you can configure the labels, taints, availability zones, disk configuration, and more. Ocean will use those settings to apply them to all the node pools and virtual machines that it is going to launch.

Spot Ocean configures a VM family pool based on the VM types and resource limits for every VNG. I selected a diverse range of instance types, as shown in blue in the screenshot below.

Note: You can remove instance families that you don’t intend to use.

Additionally, when creating a Virtual Node Groups (VNG) you can start from scratch or create one based on the node pool configuration in Azure. The respective VNGs inherit the labels, taints, availability zones, and disk configurations already defined in Azure.

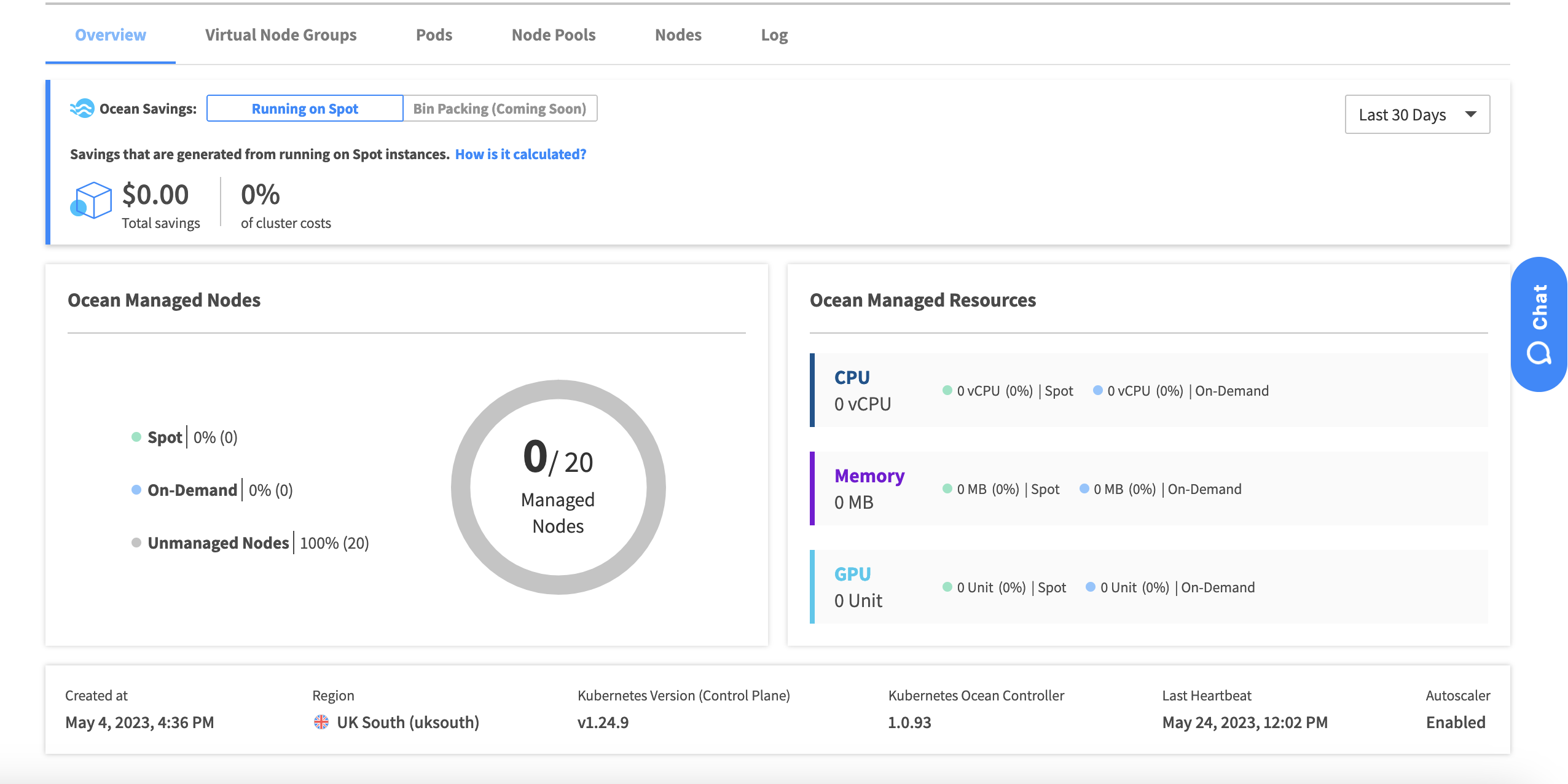

The Ocean cluster was created, but it manages 0 out of the 20 nodes in the AKS cluster. By design, Ocean will not interrupt node pools managed by AKS. To make Ocean manage all the workloads, we must “migrate” workloads from AKS managed node pools to Ocean managed node pools.

Migrating workloads from AKS node pools to Ocean

To ensure my cluster is managed by Ocean, it is time to migrate the workloads from AKS to Ocean.

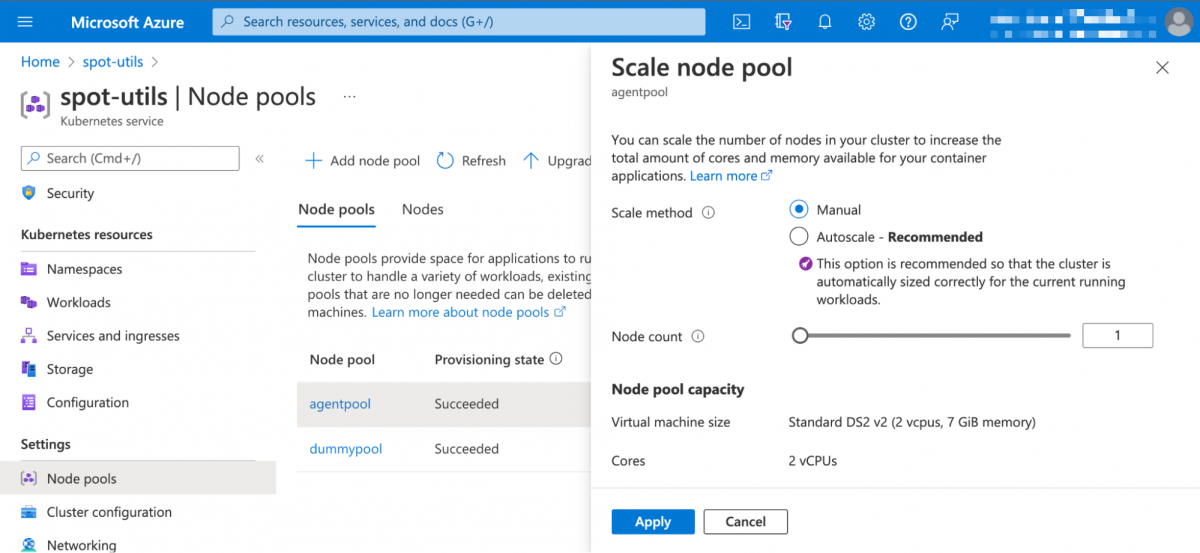

First, I disabled AKS node pool autoscaling to allow Ocean to take over the management of the cluster and accommodate new pods and workloads.

Subsequently, I started to scale down the VMs in the AKS node pools for Ocean to detect the need for capacity and scale up.

To summarize: To grant Ocean control over the AKS cluster, the following actions were performed:

- Cluster Autoscaling for the node pools was disabled (needs to be done for every node pool)

- Kept at least one node running in the default System pool, as recommended by Azure. For User pools, the count can be scaled down to 0.

To accomplish this, I disabled the AutoScaling in the AKS node pools manually via the Azure UI, but it can also be done by using the following CLI commands:

CLI:

$ az aks nodepool update --disable-cluster-autoscaler -g ${resourceGroupName} -n ${nodePoolName} --cluster-name ${aksClusterName}

$ az aks nodepool scale --resource-group ${resourceGroupName} --cluster-name ${aksClusterName} -n=${nodePoolName} --node-count 0

Now, as the pods that were scheduled on the scaled-down nodes become unscheduled, Ocean takes charge by launching new compute for them.

As you can see in the screenshots below, the cluster is now managed by Ocean. (Except a single system node that is used to run Azure control plane deployments.)

Finally, after the first day of running all the workloads on Spot VMs managed by Spot Ocean, the following observations were made:

- The cluster utilization initially reached 97%, a significant improvement. To ensure faster scheduling of new pods, I configured a 5% headroom for the “User” VNG. Although this reduced utilization to 92%, it allowed workloads to scale faster with a reasonable buffer of spare capacity.

- The cluster achieved 85% savings since Ocean provisions a variety of spot VM sizes, offering more flexibility than the AKS managed node pool. Workload placement optimization through bin-packing reduced the number of required nodes.

- The number of unscheduled pods decreased significantly.

- During the first day, there were no interruptions for the spot VMs. However, an average of three interruptions per week has been observed since then. Fortunately, Ocean handles these seamlessly without adversely affecting the running workloads.

What does this mean for your AKS cluster costs?

If you are already running workloads on AKS, adopting this solution can greatly reduce your costs and operational overhead. With Spot Ocean for AKS, we were able to cut more than 85% of compute costs for this cluster. In addition to the substantial cost savings, workloads now perform better, and the occurrence of unscheduled pods has decreased significantly.

Are you ready for better performance and slashing your AKS cluster costs by 85%? Book a demo!