AWS ECS vs. EKS |

|

| What is AWS ECS? | What is AWS EKS? |

| Amazon Elastic Container Server (ECS) is Amazon’s home-grown container orchestration service. It lets you run and manage large numbers of containers. Importantly, it is not based on Kubernetes.ECS runs clusters of compute instances on Amazon EC2, managing and scaling your containers on your machines. It provides an API you can use to check cluster state, perform operations on clusters or containers, and access Amazon services like IAM and CloudWatch. | Amazon Elastic Kubernetes Service (EKS) is a managed Kubernetes service. It lets you deploy Kubernetes clusters on AWS without having to manually install Kubernetes on EC2 compute instances. With EKS, Amazon manages and secures the Kubernetes control plane (components like etcd and API Server), while your organization is responsible for managing Kubernetes worker nodes.EKS is certified by the Kubernetes, which means that your existing clusters and tools from the Kubernetes ecosystem should migrate and work smoothly. |

If you want to learn about what container orchestrators are used for and why you should use them, see our quick primer on EKS vs. ECS below.

In this article, you will learn:

- ECS vs EKS: Key Differences

- Bottom Line: Should You Choose ECS or EKS?

- Quick Primer: Why Use a Container Orchestrator?

- Spot’s Ocean: Abstracting Containers from the Infrastructure

AWS ECS vs EKS: Key Differences

Let’s compare AWS ECS vs. EKS to highlight the differences between both platforms and the advantages of each one, so you can choose the best option for you.

Pricing

Generally speaking, both ECS and EKS clusters running on EC2 instances are debited to the same compute costs, based on the instance type they are using and the running time of that instance.

In addition, Amazon EKS charges $0.1 per hour, which amounts to $74 per month, per Kubernetes cluster. There is no separate charge for master nodes.

Learn more in our detailed guide to eks pricing.

Deployment Effort

As mentioned in the intro to this blog, both Kubernetes and ECS are similar in their orchestration concepts. Both AWS EKS and ECS can be initially set up via the AWS management console. However, there is a difference in the ease of deployment.

ECS is considered an “out of the box” solution for container orchestration due to its deployment simplicity. Deploying Kubernetes clusters on EKS is a bit trickier and requires a more complex deployment configuration and expertise. With ECS there is no control plane, as there is in EKS. After the initial cluster setup this is where the deployment simplicity of ECS kicks in, as the user is able to configure and deploy tasks directly from the AWS management console

In EKS, the user is required to configure and deploy Pods via Kubernetes. This requires more expertise from DevOps engineers.

Networking

With ECS, you have the option to associate an elastic network interface (ENI) directly with a task, by choosing to launch a task in “awsvpc” mode. However, the maximum number of ENIs (read Virtual NICs) that can be assigned to each EC2 instance varies according to the EC2 type, and that ranges from 8-15 ENI’s per EC2 instance, potentially not enough to support all the containers we wish to run on that particular instance.

However, AWS has increased its support for ECS clusters running in “awsvpc” mode, and now users are able to assign 3 to 8 times more ENI’s than the previous limit (depending on the instance type), therefore increasing elasticity and enhancing container security.

With EKS, the user has the option to assign a dedicated network interface to a Pod. This means that all containers inside that pod will share the same internal network and public IP. On top of that, with EKS it’s also possible to share an ENI between several pods, thus enabling the user to place many more Pods per instance.

EKS allows up to 750 Pods, depending on instance size, significantly more than the capacity of ECS, which accommodates only to a maximum of 120 tasks per instance.

Why is it important to assign a network card directly to a task/pod? Improved security. This way the user is able to assign a Security Group dedicated to that individual task/pod, rather than simply opening all network ports for the hosting EC2 instance.

Security

Both ECS and EKS have their Docker container images stored securely in ECR (Elastic Container Registry). Every time a container spins up, it securely pulls its container image directly from ECR.

ECS supports IAM roles per task. The ability to assign an IAM role per task/container provides an additional layer of security, by specifically granting containers access to various AWS services such as S3, DynamoDB, Redshift, SQS and more.

Multi-Cloud Compatibility

As the cloud-computing world evolves, more and more organizations are decentralizing their workloads across multiple cloud providers, thereby benefiting from the different services and pricing each cloud offers.

ECS is an AWS-native service, meaning that it is only possible to use on AWS infrastructure, resulting in vendor lock in. EKS is based on Kubernetes, an open-source project which is available to users running on multi-cloud (AWS, GCP, Azure) and even on-Premise. This provides extra flexibility and allows users to run containers across multiple clouds.

Bottom Line: Should You Choose ECS or EKS?

We have covered the key differentiators between Amazon EKS and ECS, and now the only issue left is to decide what is most suitable for your team.

If you are new to containers and are looking for a simple way to set up and deploy clusters, perhaps ECS is the easier choice.

On the other hand, if you are experienced and are looking for a better way to scale your clusters and avoid vendor lock-in, EKS may be the solution for you.

If you already have containers running on Kubernetes or want an advanced orchestration solution with more compatibility, you should use Amazon EKS.

When you’re looking for a solution that combines simplicity and availability, and you want to have advanced control over your infrastructure, then ECS is the right choice for you.

While ECS offers tighter integration with AWS services, users who run Kubernetes get the chance to enjoy the additional capabilities which derive from working in an open-source ecosystem.

Quick Primer: Why Use a Container Orchestrator?

In the past few years, containers have dramatically changed the way organizations develop, package and deploy applications.

Running applications in containers rather than traditional VM’s brings great value due to the fact that they are easily scalable and ephemeral.

However, when managing large clusters, scalability can often become an overhead for engineering teams.

When operating at scale, a container orchestration platform that automates the deployment, management, scaling, networking, and availability of the container clusters, has become necessary.

Learn more in our detailed guide to eks autoscaling.

Container orchestration is all about managing the lifecycle of containers in large environments, and that includes various tasks such as:

- Provisioning and deployment of containers on instances

- Redundancy and availability of containers

- Scaling up\down based on a given load

- Resource allocation

- Health monitoring of containers and hosts

- Seamless deployment of new application versions

Spot’s Ocean: Abstracting Containers from the Infrastructure

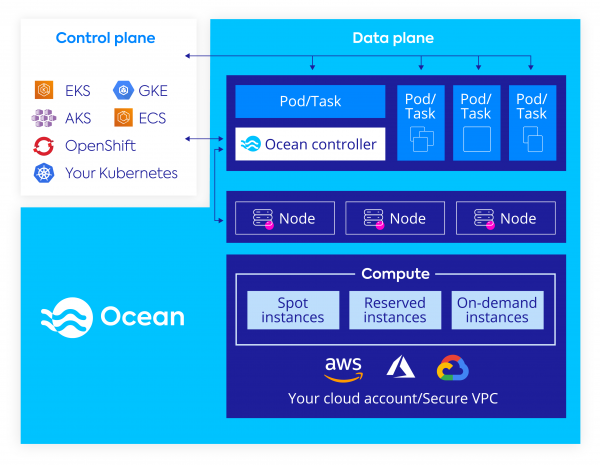

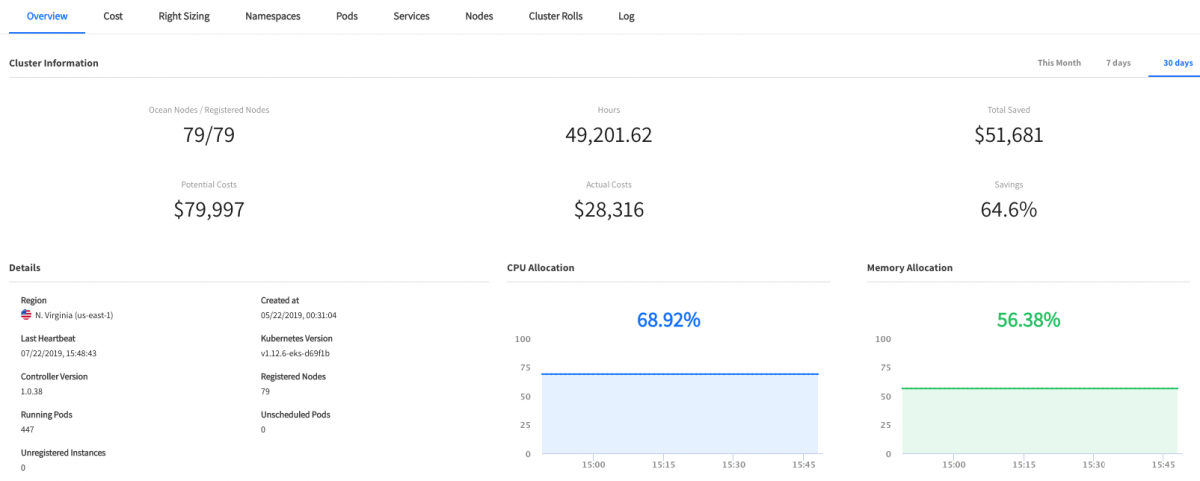

Spot’s Ocean is our serverless compute-engine that provides data-plane (worker nodes) end to end management, and it abstracts Kubernetes Pods and Amazon ECS tasks from the underlying VMs / infrastructure. Ocean relieves engineering teams from the overhead of managing the most complicated part of Kubernetes or ECS clusters by dynamically provisioning, scaling and managing the data-plane component (worker nodes and EC2 instances).

Advanced Automation

Ocean takes advantage of multiple compute purchasing options like Reserved and Spot instances and uses On-Demand only as a fall-back, providing an 80% reduction in cloud infrastructure costs while maintaining high-availability for production and mission-critical applications.

Please check out this blog explaining the capabilities you can achieve with Spotinst Ocean and why it is considered as the go-to product to run container workloads in the public cloud, while significantly lowering cloud-compute costs.

Are you already running containers and are looking to automate your workloads at the lowest costs and gain deeper visibility into your clusters?

Take Ocean for a spin!