What Is Azure Kubernetes Service (AKS)?

Azure Kubernetes Service (AKS) is a managed container orchestration service provided by Microsoft Azure, which simplifies the deployment, scaling, and management of containerized applications.

AKS is built on the popular open-source Kubernetes system, allowing developers to leverage Kubernetes features and tools while benefiting from the scalability, security, and integration capabilities of the Azure cloud platform.

By automating key aspects of Kubernetes cluster management, AKS helps to reduce the complexity and operational overhead for developers and DevOps teams.This is part of an extensive series of guides about cloud security.

In this article:

- Azure Kubernetes Service Features and Capabilities

- Azure Kubernetes Service Architecture

- Azure Kubernetes Service Pricing

- AKS Tutorial: Create an AKS Cluster

Azure Kubernetes Service Features and Capabilities

Azure Kubernetes Service (AKS) offers a range of features and capabilities that make it an attractive choice for managing containerized applications. Some key features and capabilities include:

- Managed Kubernetes control plane: AKS provides a fully managed Kubernetes control plane, automating critical tasks such as patching, upgrading, and monitoring, which helps reduce the operational overhead for developers and DevOps teams.

- Scalability: AKS enables easy scaling of applications and resources to meet changing demands. It supports horizontal pod autoscaling, cluster autoscaling, and manual scaling to ensure optimal resource utilization.

- Integrated Azure services: AKS allows seamless integration with various Azure services such as Azure Active Directory, Azure Monitor, Azure Policy, and Azure DevOps, providing a comprehensive cloud-based solution for container management.

- Security and compliance: AKS offers built-in security features such as role-based access control (RBAC), private clusters, and Azure Private Link for secure communication. Additionally, it is compliant with various industry standards and certifications.

- DevOps and CI/CD integration: AKS supports integration with popular DevOps and CI/CD tools like Jenkins, GitLab, and Azure Pipelines, which simplifies deployment and management of containerized applications.

- Multi-node pools: AKS supports the use of multiple node pools within a single cluster, allowing you to run different types of workloads with varying resource requirements, operating systems, or availability zones.

- Networking options: AKS provides flexible networking options, including Azure Virtual Network (VNet) integration, network policies, and ingress controllers, to tailor the network architecture based on your needs.

- Persistent storage: AKS supports various storage options for persistent data, such as Azure Disks, Azure Files, and Azure NetApp Files, enabling stateful applications to retain their data across pod restarts and updates.

- Windows container support: In addition to Linux containers, AKS also supports Windows containers, which allows developers to run Windows-based applications on the same Kubernetes cluster.

Global availability: AKS is available in multiple Azure regions across the globe, ensuring high availability and minimal latency for applications deployed in the service.

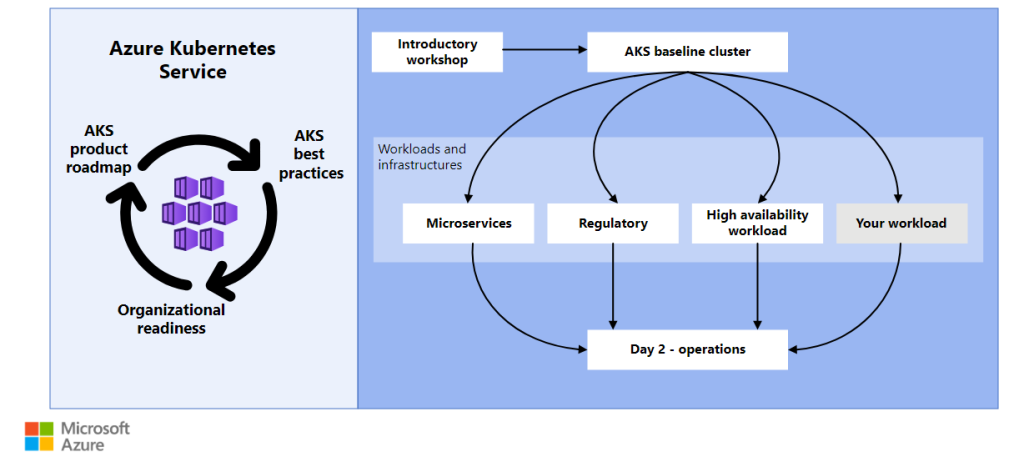

Azure Kubernetes Service Architecture

The Azure Kubernetes Service (AKS) architecture consists of several components working together to manage containerized applications. Here’s an overview of the key architectural elements:

Control plane

The control plane is a set of managed Kubernetes components that maintain the overall state and configuration of the cluster. These components include the API server, etcd, controller manager, and scheduler. AKS manages the control plane for you, ensuring high availability, automatic updates, and seamless integration with Azure services.

Node pools

Node pools are groups of virtual machines or virtual machine scale sets that act as worker nodes in the Kubernetes cluster. Each node pool can have different configurations, such as VM sizes, operating systems, or availability zones. Nodes within the same pool share the same configuration.

Pods

Pods are the smallest deployable units in Kubernetes and consist of one or more containers that share the same network namespace and storage volumes. AKS schedules and runs your application’s containers inside these pods across the worker nodes.

Services

Kubernetes services are abstractions that define a logical set of pods and a policy to access them. In AKS, services enable communication between different components of an application, as well as between internal and external users.

Ingress

Ingress in AKS is a set of rules that control external access to services running in a cluster. It can include load balancing, SSL termination, and name-based virtual hosting. Ingress resources in AKS can be managed using Azure Application Gateway or Kubernetes-native ingress controllers.

Common Use Cases For Azure Kubernetes Service

Azure Kubernetes Service is a versatile platform suited to a wide range of application scenarios. Here are some of the most common use cases:

- Lift and Shift to Containers: Organizations can migrate their existing applications to containers and host them in AKS. This approach allows them to modernize legacy applications while benefiting from Kubernetes features like scaling and automated updates. AKS simplifies the transition to containerized environments by providing a managed Kubernetes service that reduces operational complexity.

- Microservices management: AKS is ideal for deploying and managing microservices-based architectures. It supports horizontal scaling, self-healing, load balancing, and secret management, which are critical for the efficient operation of microservices. By leveraging these capabilities, developers can focus on building applications without worrying about the underlying infrastructure.

- Secure DevOps: With AKS, organizations can implement secure DevOps practices. This includes automating CI/CD pipelines, managing secrets, and ensuring compliance with security policies. AKS integrates seamlessly with tools like Azure Pipelines and GitHub Actions, allowing teams to balance speed and security during the software development lifecycle.

- Bursting from AKS with Azure Container Instances (ACI): For workloads with fluctuating demand, AKS can use Azure Container Instances (ACI) for rapid scaling. Virtual nodes provisioned in ACI allow pods to start in seconds, enabling applications to handle traffic spikes without requiring additional infrastructure investments.

- Machine Learning Model Training: AKS is an effective platform for training machine learning models, especially when working with large datasets. Tools like TensorFlow and Kubeflow can be deployed on AKS to create scalable, containerized environments for training and testing. This ensures efficient resource utilization and faster iteration cycles.

- Data Streaming: AKS supports real-time data streaming and processing, making it a powerful choice for applications that require fast insights from large volumes of sensor or user data. Organizations can build data pipelines to process, analyze, and act on complex data streams in near real-time.

Using Windows Containers on AKS: For businesses running Windows-based applications, AKS offers robust support for Windows Server containers. This allows organizations to modernize their existing Windows applications and infrastructure while using the same Kubernetes environment to manage both Linux and Windows workloads.

Azure Kubernetes Service Pricing

Here’s a breakdown of the pricing options available for AKS:

Azure Free Tier

AKS itself doesn’t have a separate free tier, but you can use the Azure free tier to create an AKS cluster. The Azure free tier provides a set of free services and a limited amount of resources each month for 12 months. However, this might not be suitable for production workloads due to resource constraints. The Kubernetes control plane management is provided free of charge in AKS, but you still need to pay for the worker nodes that run your applications. The free tier differs from the Standard pricing tier, so it’s important to know what is and isn’t covered in the free services.

Pay-As-You-Go

With the Pay-As-You-Go pricing tier, you only pay for the resources you consume. This includes the virtual machines, storage, and networking associated with your AKS cluster. The cost of the virtual machines depends on the size, region, and operating system you choose. Similarly, storage and networking costs depend on the resources you consume. This pricing tier provides flexibility and is suitable for workloads with variable resource requirements.

Reserved Instance Pricing

Azure offers Reserved Virtual Machine Instances, which allow you to reserve virtual machines for a 1-year or 3-year term in exchange for discounted rates compared to the pay-as-you-go pricing. By committing to a longer-term, you can achieve significant cost savings. This option is ideal for workloads with predictable resource requirements.

Azure Spot VMs

Spot Virtual Machines (VMs) are Azure’s unused virtual machines that are available at a discounted rate compared to the pay-as-you-go pricing. They can be used with AKS node pools to run workloads at a lower cost. However, Spot VMs come with the risk of being evicted if Azure needs the capacity back. This pricing option is suitable for fault-tolerant and flexible workloads that can handle interruptions.

Savings Plans

Azure Savings Plans are also available for a variety of Azure compute services, including Azure Kubernetes Service. Suited for dynamic workloads, Savings Plans offer predictable hourly spend that customers commit to over one or three years. Savings Plans can reduce compute spend by up to 65% and can be applied to multiple services.

Learn more in our detailed guide to AKS pricing

Other Kubernetes Services on Azure

Azure Container Instances

Azure Container Instances (ACI) provide a lightweight, serverless solution for running containers without managing any underlying virtual machines or Kubernetes clusters. This service is particularly useful for running quick, stateless tasks, batch processing, or temporary workloads.

Key features of ACI include:

- Simplified Deployment: Containers can be started and run in seconds without the need to manage the underlying infrastructure.

- Elasticity: ACI supports rapid scaling up or down to accommodate fluctuating workloads, enabling cost-effective operation.

- Seamless Integration with AKS: When paired with AKS, ACI acts as a virtual node, allowing AKS clusters to offload excess workloads during high traffic periods.

- Networking Support: ACI supports both public IPs for internet-facing workloads and private networking for secure, internal applications.

- Cost Efficiency: You pay only for the compute and memory resources consumed by the containers, with no need for reserved capacity.

Azure Container Registry

Azure Container Registry (ACR) is a fully managed Docker-compatible registry service for storing and managing container images at scale. ACR simplifies the development and deployment of containerized applications by providing a secure and reliable image repository.

Key capabilities of ACR include:

- Centralized Image Management: ACR serves as a single hub for all your container images, including private registries for enhanced security.

- AKS Integration: Ensures smooth deployment workflows by enabling AKS clusters to directly pull images stored in ACR without additional configuration.

- Geo-Replication: Automatically replicates images across multiple Azure regions, improving image pull times and reducing latency.

- Build Automation: Supports automated container builds and image updates using integrations with CI/CD pipelines such as Azure DevOps or GitHub Actions.

- Security Features: Includes advanced security with image scanning for vulnerabilities and the option to enable content trust using Docker Content Trust to verify image integrity.

- Performance Optimization: Offers accelerated networking for faster image push and pull speeds, ensuring rapid deployment.

Azure Container Apps

Azure Container Apps is a serverless container platform tailored for building and running microservices and event-driven applications with minimal operational overhead. It combines the flexibility of Kubernetes with the simplicity of a managed service.

Key features of Azure Container Apps include:

- Scalable and Event-Driven Architecture: Automatically scales applications based on metrics such as HTTP requests, events, or queue workloads, and supports scale-to-zero for inactive apps to minimize costs.

- Dapr Integration: Comes pre-integrated with the Distributed Application Runtime (Dapr), simplifying microservice communication, state management, and pub/sub messaging.

- Environment Isolation: Provides options for private, public, or hybrid networking configurations to meet security and connectivity requirements.

- Streamlined Development: Enables developers to focus on building applications without worrying about managing Kubernetes infrastructure or setting up complex deployment pipelines.

- Open Standards: Supports Kubernetes-compatible technologies, allowing portability and integration with existing tools and processes.

- Optimized for Microservices: Suited for applications that need distributed architectures or asynchronous processing.

Azure Arc-Enabled Kubernetes

Azure Arc-enabled Kubernetes provides a bridge between Azure services and Kubernetes clusters running outside Azure, such as on-premises or in other cloud environments. It empowers organizations with a unified management and governance model for hybrid and multi-cloud setups.

Key features of Azure Arc-enabled Kubernetes include:

- Centralized Management: Unifies operations by enabling users to register Kubernetes clusters with Azure and manage them through a single control plane in the Azure portal.

- Policy Compliance: Allows consistent application of policies across all clusters using Azure Policy, ensuring governance and regulatory compliance.

- Azure Service Integration: Extends the capabilities of external clusters by enabling the use of Azure-native services such as Azure Monitor for telemetry, Azure Security Center for threat protection, and Azure Key Vault for secrets management.

- GitOps Deployment: Facilitates GitOps-based workflows for managing configurations and application deployments, making it easier to maintain consistency and reduce manual errors.

- Works Anywhere: Supports clusters running in any environment, including VMware, bare-metal servers, AWS, GCP, or edge locations, providing complete flexibility.

Enhanced Security: Centralizes identity and access management using Azure Active Directory, enabling secure and streamlined access control for distributed teams.

AKS Tutorial: Create an AKS Cluster

Before you start, ensure that you have an Azure subscription. If you don’t, sign up for a free Azure account.

Follow these steps to create an AKS cluster:

- Log in to the Azure portal.

- In the Azure portal menu or on the Home page, click on Create a resource.

- Choose Containers > Kubernetes Service.

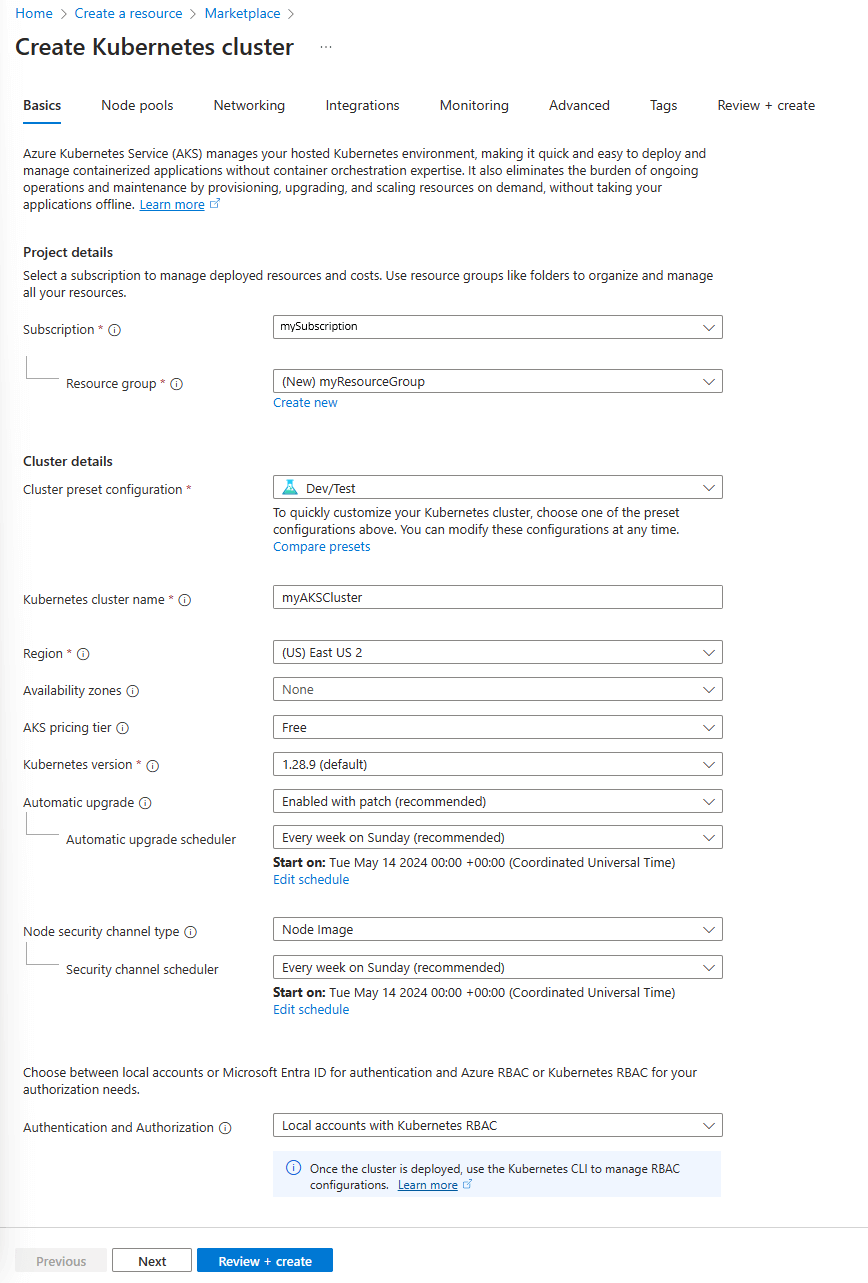

- In the Basics tab, set the following options:

Project details:

- Choose an Azure Subscription.

- Pick or create an Azure Resource group, such as myResourceGroup.

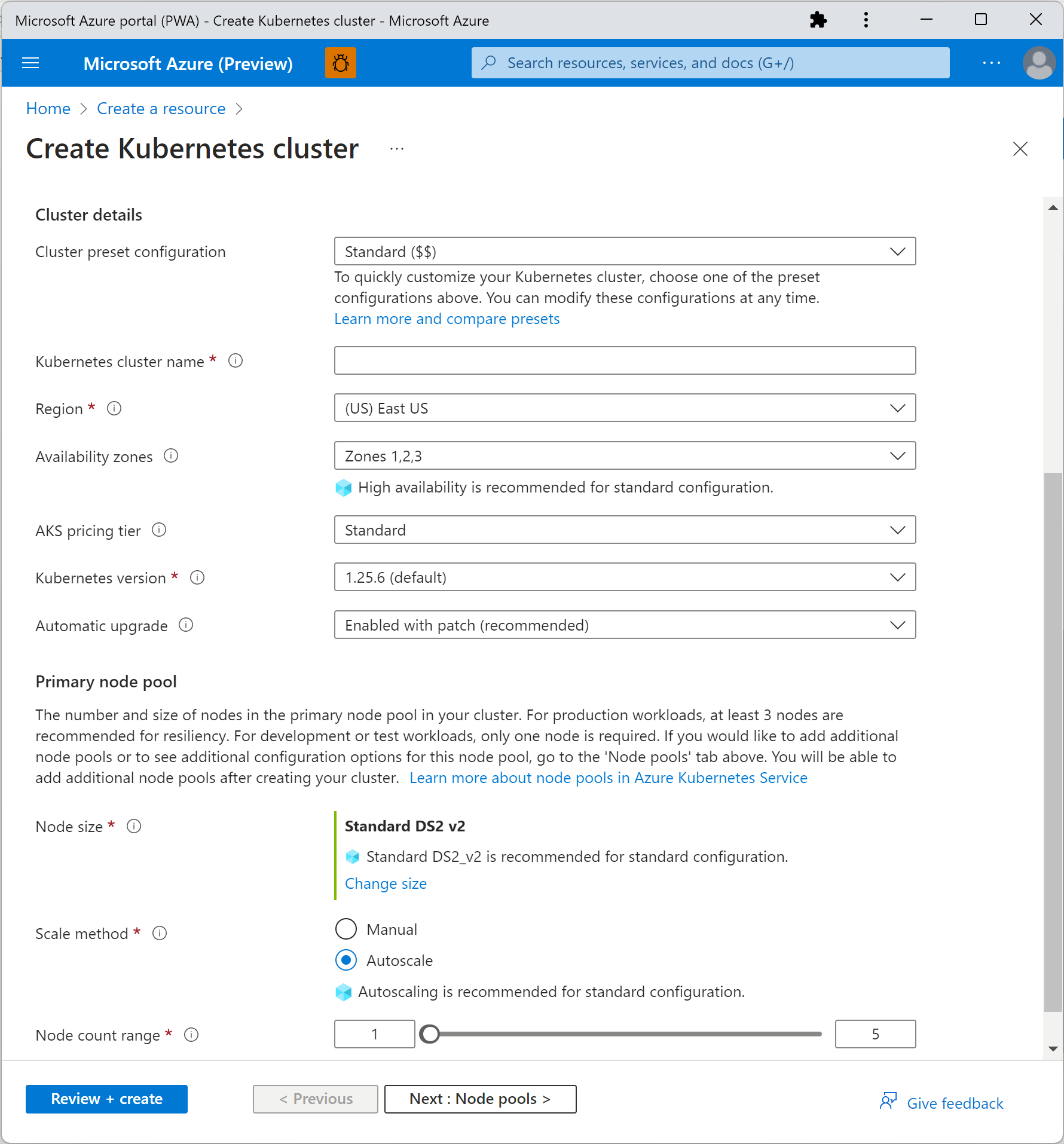

Cluster details:

- Set the Preset configuration to Standard ($$). For additional information on preset configurations, refer to the Cluster configuration presets in the Azure portal.

- Provide a Kubernetes cluster name, like myAKSCluster.

- Choose a Region for the AKS cluster and retain the default value for the Kubernetes version.

- Set API server availability to 99.5%.

Primary node pool:

- Keep the default values.

Image Source: Azure

- Click Next: Node pools when done.

- Retain the default Node pools settings and click Next: Access at the bottom of the screen.

- In the Access tab, configure these options:

- The default value for Resource identity should be System-assigned managed identity. Managed identities offer an identity for applications to use when connecting to resources that support Azure Active Directory (Azure AD) authentication.

- The Kubernetes role-based access control (RBAC) option should be the default value for more granular control over access to the Kubernetes resources deployed in your AKS cluster.

- Click Next: Networking when finished.

- Retain the default Networking settings and click Next: Integrations at the bottom of the screen.

- In the Integrations tab, enable the recommended out-of-the-box alerts for AKS clusters by selecting Enable recommended alert rules. You can view the list of alerts that are automatically enabled by choosing this option.

- Press Review + create. Upon navigating to the Review + create tab, Azure performs validation on the chosen settings. If the validation is successful, proceed to create the AKS cluster by clicking Create. If the validation fails, it will highlight the settings that need adjustment.

- It will take a few minutes to create the AKS cluster. Once your deployment is complete, access your resource by either:

- Clicking Go to resource, or

- Navigating to the AKS cluster resource group and selecting the AKS resource. In this example, search for myResourceGroup and choose the resource myAKSCluster.

Ensure availability and optimize Azure Kubernetes Service with Spot

Spot’s portfolio provides hands-free Kubernetes optimization. It continuously analyzes how your containers are using infrastructure, automatically scaling compute resources to maximize utilization and availability utilizing the optimal blend of spot, reserved, and pay-as-you-go compute virtual machine instances as well as Savings Plans.

- Dramatic savings: Access spare compute capacity for up to 91% less than pay-as-you-go pricing

- Cloud-native autoscaling: Effortlessly scale compute infrastructure for both Kubernetes and legacy workloads

- High-availability SLA: Reliably leverage Spot VMs without disrupting your mission-critical workloads

Learn more about how Spot supports all your Kubernetes workloads.

See Additional Guides on Key Cloud Security Topics

Together with our content partners, we have authored in-depth guides on several other topics that can also be useful as you explore the world of cloud security.

AWS EKS

Authored by Spot

- [Guide] Complete Guide to AWS EKS: Architecture, Pricing and Tips

- [Guide] Understanding EKS Pricing and 5 Ways to Reduce Your Costs

- [Report] 2023 State of CloudOps

- [Product] Spot Ocean | Kubernetes Infrastructure Management

Google Kubernetes Engine

Authored by Spot

- [Guide] Google Kubernetes Engine: Architecture, Pricing & Best Practices

- [Guide] GKE Pricing Models Explained and 4 Ways to Optimize Your Costs

- [Report] Optimizing in a Multi-Cloud World

- [Product] Spot Ocean | Kubernetes Infrastructure Management

What is MDR

Authored by Cynet