What Is AWS ECS (Elastic Container Service)?

AWS ECS (Elastic Container Service) is a container orchestration service that is provided and fully managed by Amazon Web Services (AWS). It allows developers to run and manage Docker containers in a scalable, highly available, and fault-tolerant manner.

With ECS, users can easily deploy, scale, and manage container-based applications without the need to manage underlying infrastructure. ECS provides a simple and intuitive API, command-line interface, and web console to manage containers.

Some of the key features of AWS ECS include:

- Integration with various AWS services, including Elastic Load Balancing (ELB), Amazon Route 53, and AWS Identity and Access Management (IAM)

- Auto scaling of container instances based on demand and resource usage

- Load balancing of traffic between containers and services

- High availability and resiliency through multiple availability zones

- Security through integration with AWS IAM for resource-level access control

- Monitoring and logging through integration with AWS CloudWatch and AWS CloudTrail

In this article:

- Options for Running Container Workloads in AWS

- Amazon Elastic Container Service vs. Plain Kubernetes

- AWS ECS: Architecture and Components

- AWS ECS Pricing Models

- AWS ECS Best Practices

Options for Running Container Workloads in AWS

On AWS, there are different container management approaches that can be used, including:

- Amazon ECS: A managed container orchestrator provided by AWS. It allows developers to run and manage Docker containers in a scalable, highly available, and fault-tolerant manner. With ECS, users can easily deploy, manage, and scale containerized applications without the need to manage underlying infrastructure.

- Amazon Elastic Kubernetes Service (EKS): A fully managed Kubernetes service provided by AWS. It allows users to run Kubernetes clusters on AWS without the need to manage the underlying infrastructure. With EKS, users can deploy, scale, and manage containerized applications using Kubernetes APIs and tools.

- AWS Fargate: A serverless computing engine for containers that allows users to run containers without requiring the management of underlying infrastructure. With Fargate, users can launch and manage containers at scale, without the need to provision or manage servers.

- Self-managed Kubernetes: AWS also provides the option to deploy and manage Kubernetes clusters on EC2 instances through a self-managed approach. This gives users more flexibility and control over their container orchestration environment, but requires more setup and management on the user’s part.

Amazon ECS vs. Kubernetes

What is Kubernetes?

Kubernetes is a widely used, open-source platform for orchestrating containers. It automates the deployment, management, and scaling of containerized applications. It was originally developed by Google and is now maintained by the CNCF (Cloud Native Computing Foundation).

Kubernetes provides a set of APIs for containerized applications across clusters of hosts. It allows users to define and manage complex application environments with multiple containers, across multiple hosts, and provides features such as auto-scaling, load balancing, rolling updates, and self-healing capabilities.

Amazon ECS vs. Kubernetes: key differences

While both Amazon ECS and Kubernetes are container orchestration platforms, there are some important differences between the two:

| Amazon ECS | Kubernetes | |

| Managed | Yes | No |

| Integration | Tightly with AWS services | Can run on any cloud or on-premises infrastructure |

| Simplicity | Simple and streamlined solution | More complex and customizable |

| Ease of use | Generally considered easier to use | Steeper learning curve |

| Load balancing | Built-in | Requires configuration |

| Auto scaling | Built-in | Requires configuration |

| Scaling limits | Depends on Amazon ECS instance types | Depends on the underlying infrastructure |

| Community support | Large and growing | Large and growing |

Ultimately, the choice between Amazon ECS and Kubernetes depends on the specific needs and preferences of the user.

Related content: Read our guide to AWS Fargate vs. ECS (coming soon)

AWS ECS: Architecture and Components

ECS Clusters

An ECS cluster is a logical grouping of EC2 instances or Fargate resources that are used to run containers. ECS clusters provide a way to manage and scale container deployments across a group of instances or resources.

Containers and Images

Containers are lightweight, portable, and executable packages that contain all the necessary dependencies and configuration to run an application. Containers are based on container images, which are read-only templates that define the container’s operating system, runtime, and application code. Docker is a popular container technology used with ECS. Users can create their own custom container images or use existing ones from a public or private container registry.

Task Definitions

A task definition is a blueprint that defines how a container should run within an ECS cluster. It includes information such as which container image to use, how much CPU and memory to allocate, network configuration, and storage requirements. A task definition can define one or more containers that are launched together as a task.

Tasks and Services

A task is a running instance of a task definition that runs on an EC2 instance or Fargate resource. A task can include one or more containers that are launched together. A service is a way to manage and scale a set of tasks that are running the same task definition. Services ensure that the desired number of tasks are running at all times, and can automatically scale up or down based on demand.

Container Agent

The container agent is a component that runs on each EC2 instance in an ECS cluster. It is responsible for communicating with the ECS control plane to receive tasks and start or stop containers. The container agent monitors the container image’s state and reports back to the ECS control plane.

AWS ECS and Fargate

AWS ECS can work with either EC2 instances or Fargate resources. When using EC2 instances, users must provision and administer the instances themselves. When using Fargate, AWS manages the infrastructure for the user, and users only need to specify the task definition and how many tasks they want to run.

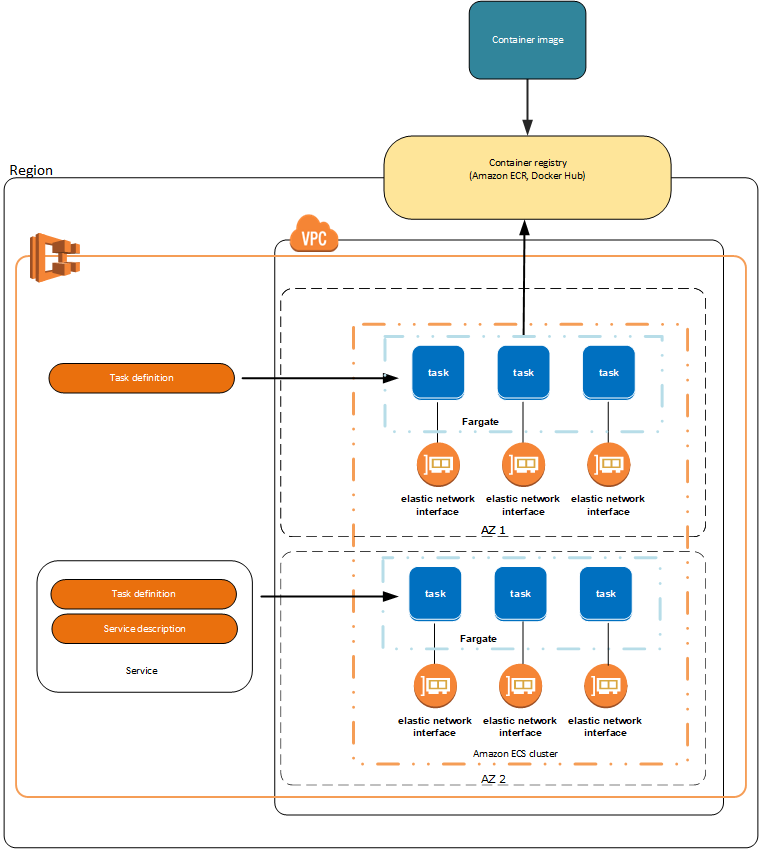

Here is a diagram that shows the architecture of an ECS environment running on Fargate:

AWS ECS Pricing Models

AWS ECS Pricing with EC2

With the Amazon EC2 launch-type model, users pay for the EC2 instances that are launched to run their containers, as well as any associated AWS resources such as Elastic Load Balancing (ELB) and Amazon EBS volumes. Pricing for EC2 instances is based on the instance type, operating system, and usage.

AWS ECS Pricing with Fargate

With the Fargate launch type model, users only pay for the memory and virtual CPU (vCPU) resources that their containers use. Pricing is based on the amount of memory and vCPU resources used, as well as the duration of the task. Users can choose to pay for resources on a per-second or per-hour basis, depending on their needs. With the Fargate launch type model, users don’t need to pay for EC2 instances or clusters.

AWS ECS on Outposts

With AWS Outposts, users pay for the AWS Outposts infrastructure, as well as any associated AWS resources. Outposts is a fully managed Amazon service that extends AWS services, infrastructure, tools, and APIs to almost any data center, on-prem facility, or co-location space. Pricing for AWS Outposts is based on the instance type and usage, and users can choose to pay on a per-hour or per-second basis.

Amazon ECS Anywhere Pricing

Amazon ECS Anywhere enables users to run and manage containerized applications on any infrastructure, including on-premises data centers, edge locations, or multi-cloud environments. Users can choose to pay for resources on a per-hour or per-second basis, depending on their needs. Pricing depends on the number of hours ECS manages the infrastructure and may incur fees for the AWS Systems Manager Agent (SSM Agent).

Learn more in our detailed guide to AWS ECS pricing

AWS ECS Best Practices

Choose the Right Amazon EC2 Instance Type

The chosen EC2 instance type determines the amount of CPU, memory, and network resources that are available for running containers, and can have a significant impact on the performance, availability, and cost of the application.

There are many different EC2 instance types to choose from, each optimized for different types of workloads. Some of the factors to consider when choosing an EC2 instance type for ECS include:

- CPU: The amount of CPU required by the application can have a significant impact on the performance of the containerized application. Applications with high CPU requirements, such as those running data processing or machine learning workloads, may benefit from instances with higher numbers of CPU cores or higher clock speeds.

- Memory: The amount of memory required by the application is also an important consideration when choosing an EC2 instance type. Applications that require large amounts of memory, such as those running in-memory databases or big data workloads, may benefit from instances with larger memory capacities.

- Network: The network performance of an EC2 instance can impact the performance of containerized applications that rely on high-speed networking, such as those running microservices or distributed systems. EC2 instances that offer enhanced networking or placement within an availability zone can help to improve network performance and reduce latency.

- Instance size: The size of the EC2 instance is also an important consideration when choosing an instance type for ECS. Users should consider the number of containers that can be run on each instance, as well as the desired level of availability and performance.

Configure Service Auto Scaling

Service auto scaling is a feature of AWS ECS that automatically scales services based on demand. It can help to ensure that the application is always available, and that resources are used efficiently.

When configuring service auto scaling, users should define scaling policies that determine when and how the service should scale. Users can define scaling policies based on CPU and memory utilization, or based on custom metrics.

Here are the three types of scaling policies that can be used with service auto scaling:

- Step scaling: Adjusts the desired number of tasks in response to changes in demand. When using step scaling, users define a set of scaling steps that specify how many tasks should be added or removed based on the scaling metric.

- Target tracking scaling: Adjusts the desired number of tasks to match the target value for a given metric. It lets users define target values for scaling metrics, and then AWS ECS automatically adjusts the number of tasks to maintain the target value.

- Scheduled scaling: Increases or decreases the number of tasks a service runs according to the date and time.

Use Fargate Spot and EC2 Spot

Spot capacity refers to the excess capacity in AWS data centers that is priced at a significant discount compared to reserved or on-demand capacity. Spot instances are suitable for batch processing tasks, machine learning workloads, development, staging environments, and other workloads that can tolerate temporary downtime.

To help minimize capacity shortages when using Spot, users can leverage the following practices:

- Deploy across multiple Availability Zones and Regions: Spot capacity availability varies by AWS Region and Availability Zone. Running workloads in multiple regions and zones can improve availability. Specifying subnets in every Availability Zone in the Region where instances and tasks are run can further improve availability.

- Combine different types of Amazon EC2 instances: When using mixed instance policies with Amazon EC2 auto scaling, launching multiple instance types into an auto scaling group ensures that requests for Spot capacity are fulfilled as required. Using instance types with similar amounts of memory and CPU in the mixed instances policy can maximize reliability while minimizing complexity.

- Use an allocation strategy to optimize Spot instances for capacity: Amazon EC2 Spot lets users choose between a cost-optimized or capacity-optimized allocation strategy. Choosing the capacity-optimized Spot allocation strategy is better when launching new instances, as this ensures that Amazon uses the most available instance type within a given Availability Zone, reducing the likelihood of the instance being terminated soon after launch.

Make Container Images Complete and Static

A container image should contain everything the application needs to function, so that it can be run by downloading one container image from a single place. All the application dependencies should be stored as static files within the container image, rather than dynamically downloading dependencies, libraries, or critical data when starting the application.

If the container image includes all the dependencies in the form of static files, you can reduce the number of potential breaking events during deployment. It also ensures the container image download process is optimized to download dependencies simultaneously.

By keeping all dependencies inside the image, the deployments can be more reliable and easy to reproduce. If dynamically loaded dependencies are changed, they could break the application in the container image. However, as long as the container remains self-contained, it can be redeployed because it already includes the correct versions and dependencies inside it.

AWS ECS with Spot

With Spot, you can utilize advanced automation, optimization, and simplicity to increase resource utilization and save 90% of cloud infrastructure costs.

Spot’s approach:

- Serverless Containers – deploy containers without infrastructure management

- Manage one cluster with multiple compute resources

- Automatic instance provisioning based on Services and Tasks requirements

- Intelligent provisioning of the optimal mix of spot, reserved, and on-demand capacity

Learn more about how Spot supports a simpler way to run Amazon ECS clusters.