Kubernetes has what you may consider an aggressive release cycle. There have been three to four releases per year since 1.0 was released in July of 2015. Perhaps you’ve found it all too easy to get behind a couple of versions. Running the latest, or nearly latest release will help protect your organization from security issues. This is because releases are deprecated once they fall three minor versions behind the latest. Staying current isn’t just about security though! You also get access to new features and enhancements.

When using a managed Kubernetes service like Amazon’s EKS, Google’s GKE, and Microsoft’s AKS, the control plane can be updated through a UI, CLI, or an API call. However, this still leaves your worker nodes running an older version. Each cloud provider has options to bring your worker nodes up to the current version. In this example, we’ll be utilizing Amazon’s EKS. Amazon has an excellent article covering the cluster upgrade process. A comment in that article is of particular relevance if you are using Spot Ocean to handle the lifecycle of your worker nodes.

We also recommend that you update your self-managed nodes to the same version as your control plane before updating the control plane.

Spot Ocean has you covered. You can update your worker nodes using a feature called “Cluster Roll.” This feature lets you update all of the nodes that are part of a virtual node group (VNG) in an orderly fashion. New nodes are brought up with whatever changes you are requesting. (In this case, we’ll be replacing the AMI with one that matches the new Kubernetes version.) Existing nodes are marked as “NoSchedule” and are drained so that the pods are transitioned to the new nodes.

If you would like to watch a demonstration of the whole process go ahead and press play. Otherwise please scroll down and continue reading.

https://youtu.be/aAzNv-rsv_A

Let’s walk through the process together.

The Demo Environment

We have an EKS cluster running K8s v1.19 and wish to upgrade to 1.20. Kubernetes upgrades should be done incrementally, one version at a time. We can verify the current version using the AWS CLI , kubectl or looking in a web UI. Provided we have the aws cli configured with appropriate credentials, running:

% aws eks describe-cluster --name knauer-eks-Zi4XDZoO

{

"cluster": {

"name": "knauer-eks-Zi4XDZoO",

...

"version": "1.19",

...

}

returns the version. An alternative method, assuming a valid kubeconfig file is available, would be to use kubectl:

% kubectl version --short Client Version: v1.21.2 Server Version: v1.19.8-eks-96780e WARNING: version difference between client (1.21) and server (1.19) exceeds the supported minor version skew of +/-1

As a side note: You also need to update the version of kubectl you are using periodically in order to be able to manage your clusters. Generally you want to stay within one release of whatever cluster version you are managing. In this example, we are getting a warning because the cluster we are managing is two versions behind.

This cluster has two worker nodes currently.

% kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-0-1-127.us-west-2.compute.internal Ready <none> 49m v1.19.6-eks-49a6c0 ip-10-0-3-146.us-west-2.compute.internal Ready <none> 45s v1.19.6-eks-49a6c0

The first one listed is part of an AWS auto-scaling group (ASG). Note: This ASG is not an EKS managed node group. The second one is part of a Spot Ocean managed VNG. The second one will be replaced during the cluster roll.

Upgrade the Control Plane

There are multiple ways to upgrade our EKS cluster version. For this example cluster, we are going to use the AWS CLI to handle the upgrade.

From the AWS documentation, we need to run:

% aws eks update-cluster-version \ --region <region-code> \ --name <my-cluster> \ --kubernetes-version <desired version>

and substitute in some values. The “region” is the AWS region this EKS cluster is provisioned in. The “name” is the name of the EKS cluster. Set “kubernetes-version” to be your desired version, which should be the currently running version plus one. Since we are at 1.19, we’ll use “1.20”.

With all the required variables replaced, we’ll run:

% aws eks update-cluster-version \ --region us-west-2 \ --name knauer-eks-Zi4XDZoO \ --kubernetes-version 1.20

This will return an ID that can be used to check the status of the upgrade.

% aws eks describe-update \

--region us-west-2 \

--name knauer-eks-Zi4XDZoO \

--update-id ffe2232e-6389-4880-9ecc-4a6d65c1e42d

{

"update": {

"id": "ffe2232e-6389-4880-9ecc-4a6d65c1e42d",

"status": "InProgress",

"type": "VersionUpdate",

"params": [

{

"type": "Version",

"value": "1.20"

},

{

"type": "PlatformVersion",

"value": "eks.1"

}

],

...

}

}

The upgrade process will take several minutes. Eventually the status will return as “Successful.”

{ "update": { "id": "ffe2232e-6389-4880-9ecc-4a6d65c1e42d", "status": "Successful", "type": "VersionUpdate", ... }

Once it has completed successfully, we can verify the control plane has been upgraded. The value returned for “Server Version” has updated and now shows 1.20.x instead of 1.19.x.

% kubectl version --short Client Version: v1.21.2 Server Version: v1.20.4-eks-6b7464

Upgrade the Worker Nodes

While the control plane has been upgraded to 1.20, our data plane or worker nodes are still running 1.19.

% kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-0-1-127.us-west-2.compute.internal Ready <none> 90m v1.19.6-eks-49a6c0 ip-10-0-3-146.us-west-2.compute.internal Ready <none> 42m v1.19.6-eks-49a6c0

Get a New AMI ID

Amazon makes updated AMIs available, but we need to get the AMI ID in order to move forward. One way to do this is to run a query using the aws command.

aws ssm get-parameter --name /aws/service/eks/optimized-ami/1.20/amazon-linux-2/recommended/image_id --region us-west-2 --query "Parameter.Value" --output text ami-0b05016e79e1e54c6

Now that we have the AMI ID we can proceed with upgrading the worker nodes that are part of the Ocean VNG.

Edit the VNG

There are a few ways to initiate a cluster roll with Spot Ocean.

- Spot UI

- Use the

spotctlCLI - Spot API

- SDK

We’ll go ahead and use the Spot UI for this example, just be aware that there are automation friendly methods available.

Note: You can find additional information on this process in the Spot documentation.

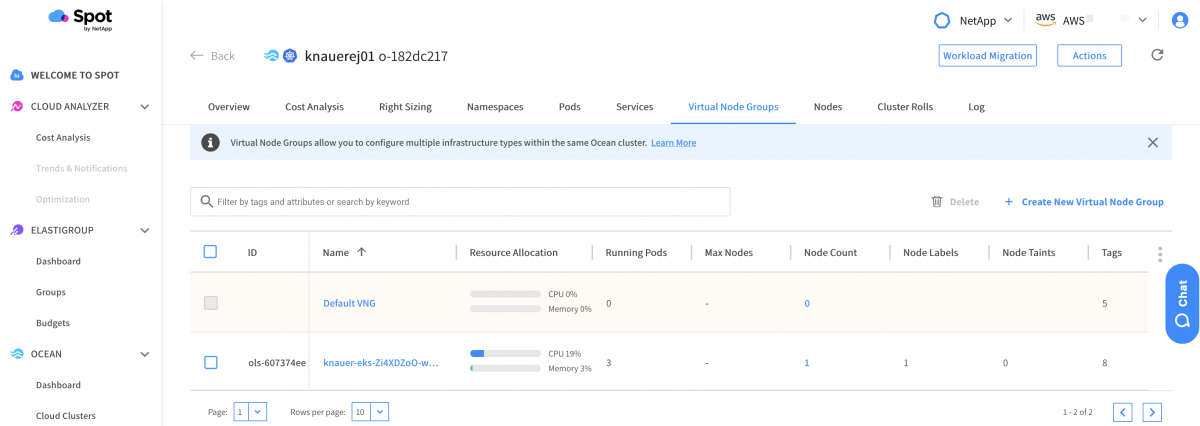

Log into the Spot UI and navigate to the “Virtual Node Groups” tab. Edit the virtual node group by clicking the VNG name.

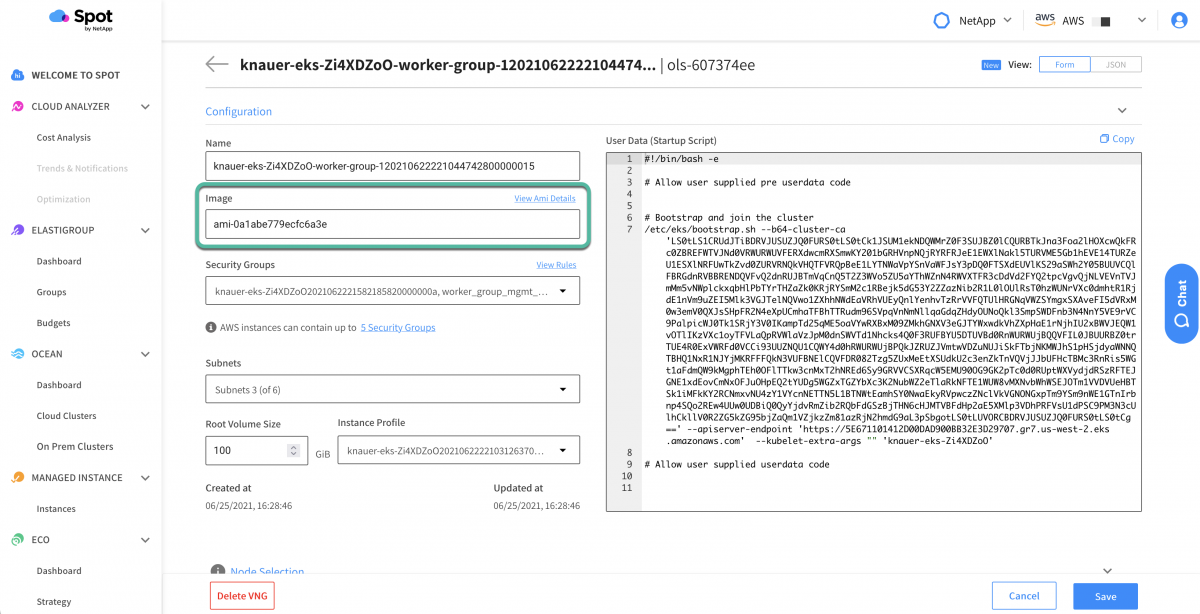

Now replace the “Image” value with the new AMI ID.

Note: We can use the “View AMI Details” link to double-check that this is the AMI we wanted.

Finally, don’t forget to press the “Save” button after you have pasted in the new AMI ID.

Start the Cluster Roll

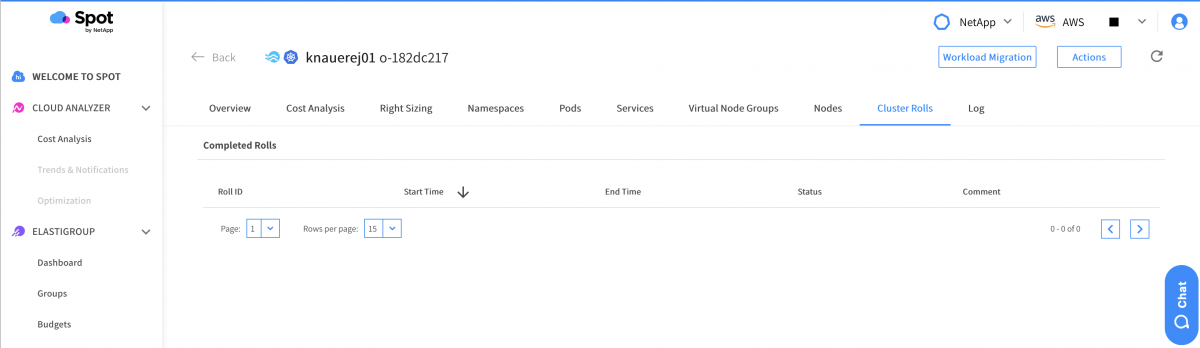

Starting the cluster roll is a quick process. First, switch to the “Cluster Rolls” tab.

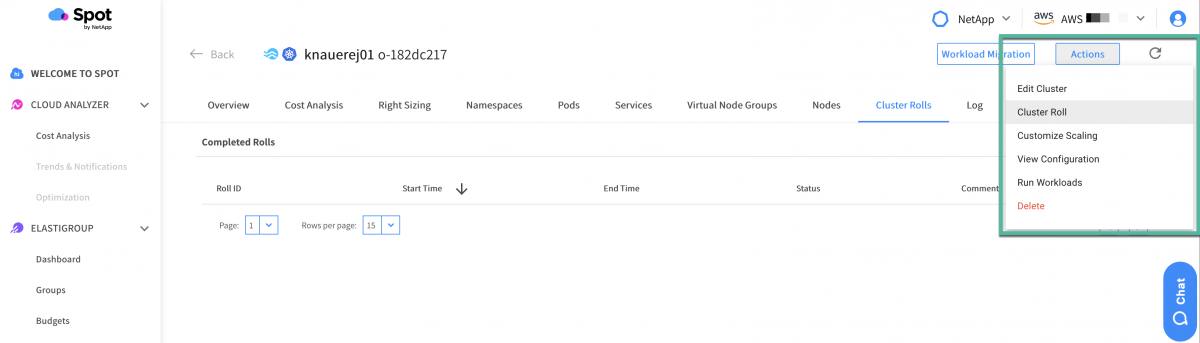

Second, select “Cluster Roll” from the “Actions” drop-down menu.

Since this VNG is only one node, there is no need to split the roll into smaller batches. The third step is to click the “Roll” button. This will submit the request and initiate the cluster roll.

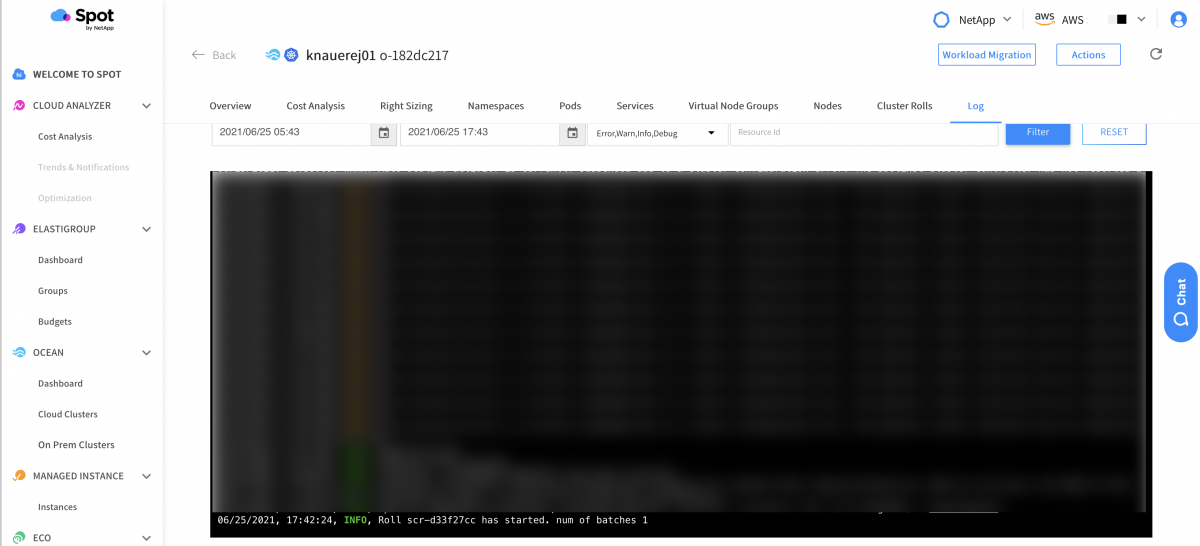

Finally, we can switch to the “Log” tab and verify that the cluster roll started.

What’s Happening in the Cluster?

Now that the cluster roll is in “InProgress”, let’s take a deeper look at what is happening inside the cluster.

Running kubectl get nodes shows that we still have our two nodes. Notice that scheduling has been disabled for the worker node that the cluster roll is about to replace.

% kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-0-1-127.us-west-2.compute.internal Ready <none> 121m v1.19.6-eks-49a6c0 ip-10-0-3-146.us-west-2.compute.internal Ready,SchedulingDisabled <none> 72m v1.19.6-eks-49a6c0

Wait a minute or so and then re-run the same command. Spot Ocean provisioned a new node in the VNG with the updated AMI. The STATUS “NotReady” tells us it isn’t ready for pods yet.

% kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-0-1-127.us-west-2.compute.internal Ready <none> 122m v1.19.6-eks-49a6c0 ip-10-0-1-33.us-west-2.compute.internal NotReady <none> 16s v1.20.4-eks-6b7464 ip-10-0-3-146.us-west-2.compute.internal Ready,SchedulingDisabled <none> 74m v1.19.6-eks-49a6c0

If we wait a minute or two, the node is now ready to go.

% kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-0-1-127.us-west-2.compute.internal Ready <none> 123m v1.19.6-eks-49a6c0 ip-10-0-1-33.us-west-2.compute.internal Ready <none> 98s v1.20.4-eks-6b7464 ip-10-0-3-146.us-west-2.compute.internal Ready,SchedulingDisabled <none> 75m v1.19.6-eks-49a6c0

Our original node running 1.19 has been removed from the EKS cluster. The new node is running v1.20.x, the same version as the updated control plane. The node that is part of the ASG, not the Ocean VNG, is still running the previous version.

% kubectl get nodes NAME STATUS ROLES AGE VERSION ip-10-0-1-127.us-west-2.compute.internal Ready <none> 136m v1.19.6-eks-49a6c0 ip-10-0-1-33.us-west-2.compute.internal Ready <none> 13m v1.20.4-eks-6b7464

Summary

We’ve successfully walked through the process of upgrading an EKS cluster’s worker nodes to a new Kubernetes version using Spot Ocean’s cluster roll feature. Kubernetes version upgrades are not the only use case for a cluster roll. You might need to update to a new AMI in response to a security CVE, or have other changes to worker nodes even though the control plane isn’t being upgraded. Please stay tuned for additional posts highlighting time-saving features of Spot Ocean. Thanks for following along!