With frequent software changes for hundreds of services over multiple environments, even a small change made by a developer can generate an unexpected blast radius in production. The standard Kubernetes deployment strategy ignores this modern complexity layer. DevOps organizations that maintain complex solutions have already understood that it’s not scalable and costly to manage.

Today, we’re excited to announce the release of Ocean CD public beta, our continuous delivery product for Kubernetes applications. (Here you can find the quick enablement documentation for Spot users.)

Spot users can now easily extend Ocean’s coverage of Kubernetes to application delivery with Ocean for Continuous Delivery, evolving Spot Ocean into a full suite of Kubernetes solutions. In this blog we will explore market trends and the most common challenges related to application delivery that led us to develop Ocean CD and provide our users with a next generation of software deployment controllers.

What differentiates ‘elite’ DevOps organizations from the rest?

The key to understanding why Continuous Delivery (‘CD’) is so challenging is to understand first what organizations are trying to achieve: fully utilize dev power, release more, provide more value to customers, and to do so while minimizing production issues.

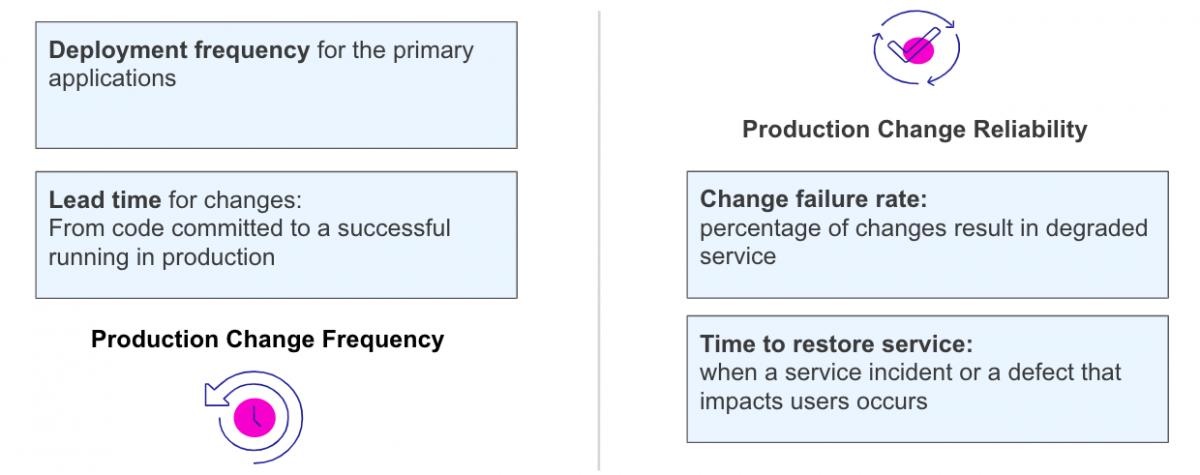

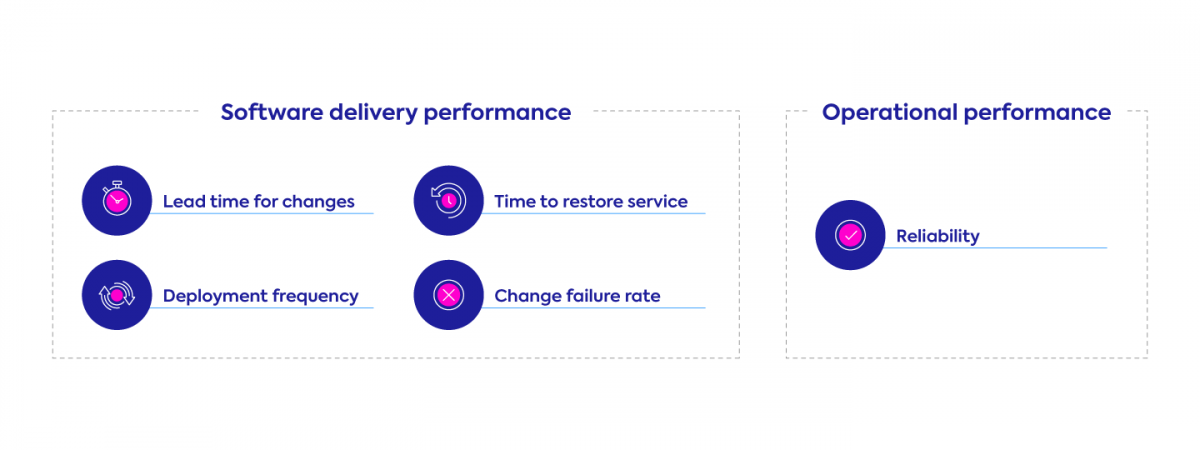

When you are looking for ways to measure success in achieving those goals, there are 4 key metrics to look at:

- The frequency with which updates and new features are deployed to production.

- The length of time it takes to release changes.

- The failure rate of releases.

- Recovery time when releases fail.

The recent State of DevOps survey 2021 show that 26% of organizations are considered elite, successfully releasing multiple times a day, with minimal failures and quick recovery time.

But what about the remaining 74% that are struggling to improve their software delivery performance?

In order to understand why so many organizations are still striving to achieve that elite level, we need to dive into modern cloud trends. As technology allows more, organizations have more considerations to take care of:

- We talked with dozens of customers that are using or migrating to Kubernetes, while trying to break their monolith into microservices. These are welcomed changes that provide tools to release more, but it also means that multiple changes can easily occur in parallel and affect each other, which is something that needs to be carefully monitored and managed.

- Being able to deploy more means adding more automations, requiring the addition of more logic into the process to reduce the need for manual intervention.

- Modern cloud technologies help to shift responsibility left to service owners and developers. This shift is crucial for organizations with limited DevOps resources, but it raises an important question: Do non-DevOps stakeholders have enough understanding and control over the delivery process to take this type of responsibility?

The secret metric no one talks about: software delivery process reliability

Since release frequency is growing, and since service owners don’t always have the tools, the attention or even capability to track the impact of all releases, a new risk related with the reliability of the deployment process and the quality of the released software changes has arisen. Kubernetes and microservices make applications and environments more complex. For service owners, troubleshooting any type of issue becomes a nightmare and failures will stay hidden in production or will be discovered when it’s too late and the damage has already been done.

So here is a more convincing answer as to why 74% of organizations are still behind the elite DevOps organizations: out of choice. The reality is that many organizations that are trying to release more, will have a higher probability of failing more. These are the 74% that will consciously choose to release less and have longer release cycles in order to stay in control and have confidence in the process and release reliability.

And thus, the fifth software delivery metric is revealed: the reliability of the deployed software. In many cases, it’s the lack of reliability that leads organizations capable of releasing more (in theory) to be less agile and not to strive to fulfill the full R&D and business potential that modern cloud technology promises.

Canary deployments to the rescue

The good news is that together with advanced cloud technologies, there has been a growth in popularity of progressive deployment strategies like canary deployments that allow gradual and controlled releases. Unfortunately, these do not exist ‘out of the box’ in Kubernetes, but with the right mix of tools and processes you may be able to achieve it. The idea is not just to gradually deploy but also to catch issues in early phases of the release and roll the application back to its stable state. So we found the solution, right?

Well, not yet.

While considered a best practice for software release by the customers we interviewed, many of them are still struggling to implement it. Defining a canary strategy is not that intuitive. Each strategy may include long and complex queries and conditions that vary between different services. While this can be bearable for a small number of services, imagine what happens for multiple services with similar deployment phases that have slight differences, or when the deployment logic needs to be updated over multiple services?

And last but not least, the fact is that as organizations’ cloud footprint grows, so do data and environment complexity. According to a recent Forrester report, that asked: “How many tools does your organization currently use for monitoring?” The average number was 8!

Now add traffic tools and Kubernetes events on top of that, and you get maybe the most challenging part of running a progressive delivery process: how do you orchestrate between multiple analysis outcomes, traffic split and automations?

This all means that in order to maintain a robust canary process teams need an engine behind the scenes and not just a collection of imperative steps.

Control all your deployments with Ocean CD gradual deployment

That’s the exact spot where Ocean CD comes in. Ocean CD is a continuous delivery product, built for Kubernetes applications, and its focus is to make canary a reality for organizations that are seeking to provide more control to R&D teams, and reduce DevOps burden.

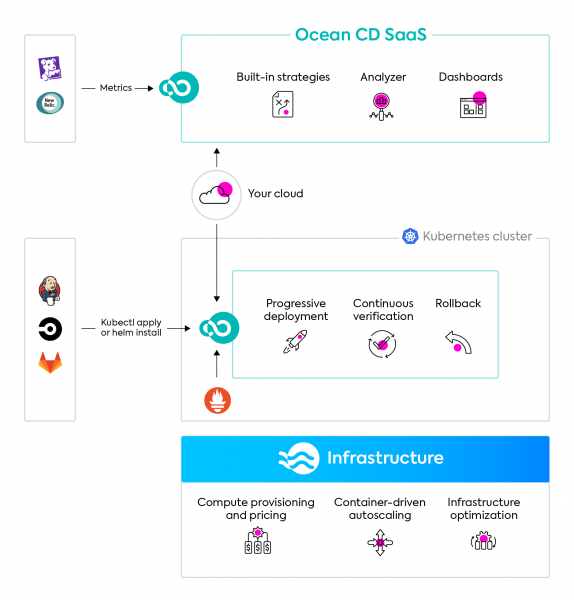

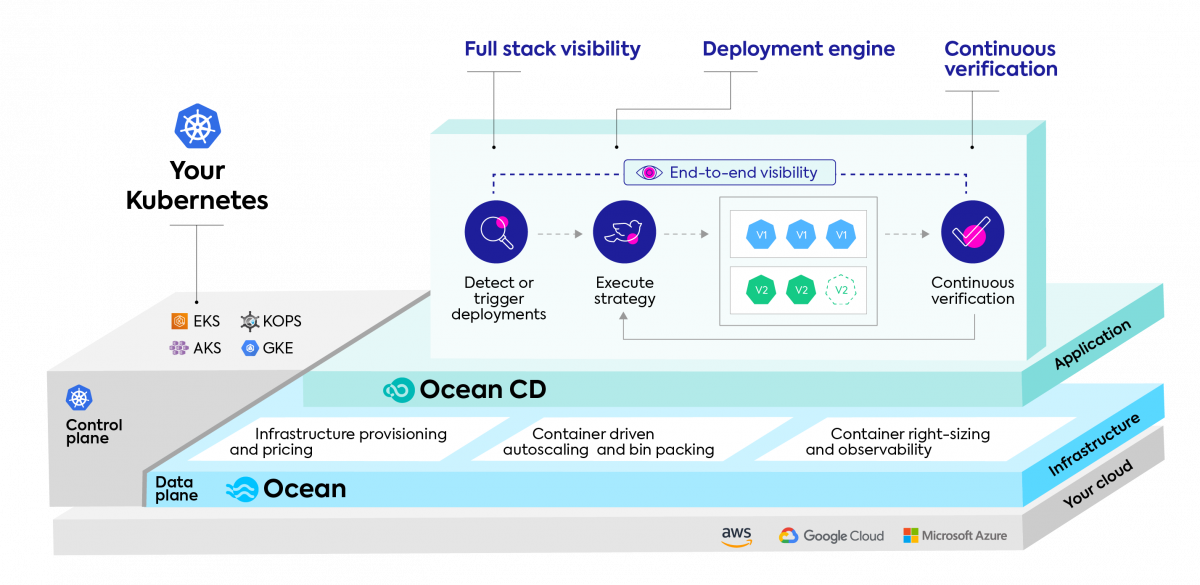

Ocean CD is a multi-cluster SaaS solution that takes popular CNCF open-source like ‘Argo rollouts’ as an engine, and layers many management capabilities on top. Ocean CD SaaS provides a clear separation between what is being deployed and how exactly to manage the deployment logic, what phases to run and how to determine success or failure.

Smart Deployments

Ocean CD architecture is built to allow quick enablement of smart deployments like Canary, Blue Green, or a simple rolling update using verifications and failure policies.

The easy setup allows users to get from zero to full enablement of progressive deployment strategies within minutes.

It includes a guided setup, validations and checkpoints to make sure the strategy has been correctly defined.

A known problem with software releases in complex environments is that in many cases a linear improvement requires an exponential effort, which is quite absurd. You ask to upgrade your deployment pipelines, and get lost between multiple services and imperative phases.

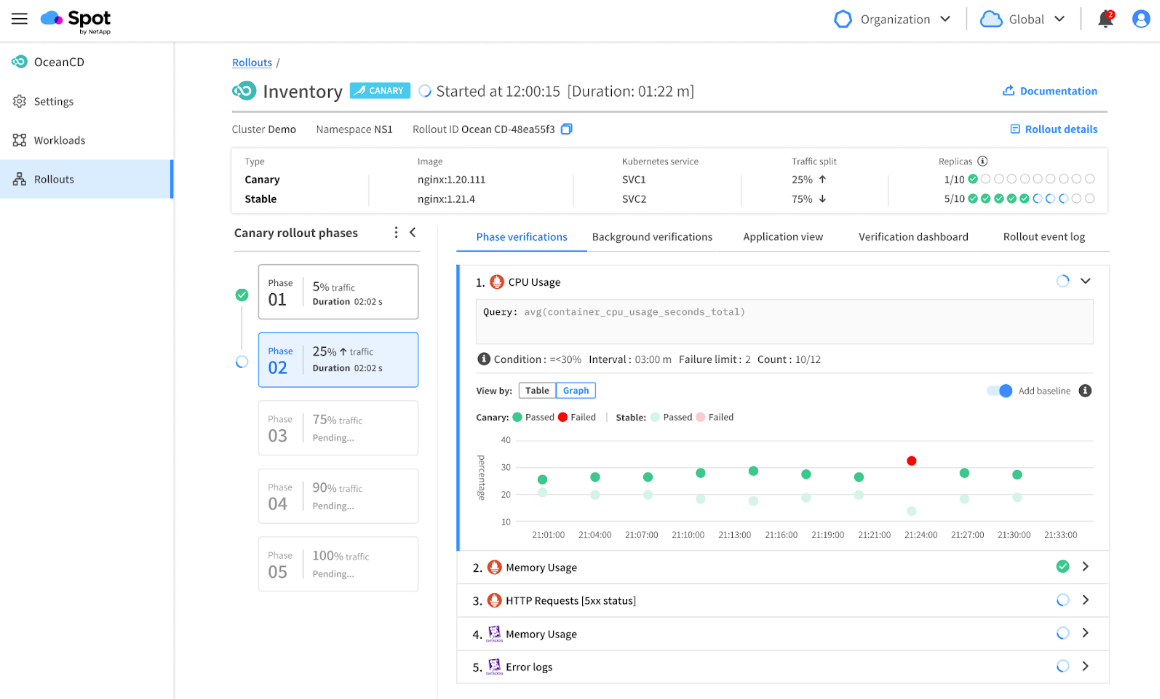

With Ocean CD, Canary mechanism can be easily customized, reused over services, and manipulated by the user. This is one of the most important capabilities for organizations that run multiple pipelines for tens to hundreds of services, because it saves tons of time on changes that can be easily implemented across environments and services.

Continuous verification

Additionally, the process is verification oriented. This means verifications that actually navigate the rollout and automatic actions that are being taken accordingly based on the Canary strategy definitions, like rollbacks or manual intervention points. This is a significant capability we keep developing and improving for our customers. The continuous verification logic is managed in Ocean CD SaaS, and allows real control and understanding of the application state in production. The most popular monitoring tools can be easily integrated, and automatically navigate the deployment process (but yet in a controlled manner).

Developer-friendly visibility

Ocean CD provides user-friendly visibility to service owners and software developers, delivering – for the first time – a tool that opens the lid of the CD ‘black box’ and allows them to get decisions, conclusions, or even automate.

The UI language was built in a way that means non-DevOps users don’t need to have deep Ops skills or waste time switching between multiple tools in order to get a clear understanding of service releases.

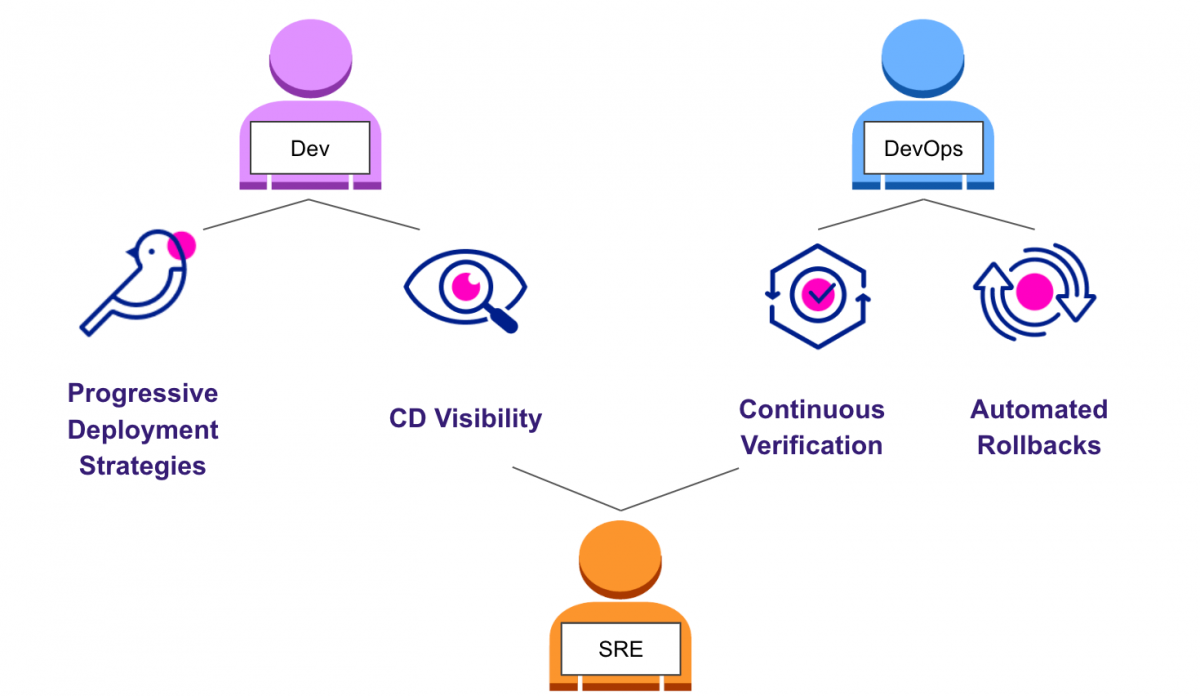

Ocean CD was built to provide a solution for each of the stakeholders that are part of the continuous delivery process:

- The developer who wants more understanding around what’s going on with a release.

- DevOps engineers who ask to provide safe automations based on clear metrics.

- And SREs who want to monitor changes to production environments in real time and investigate critical events.

Operating Kubernetes doesn’t have to be so hard

Ocean CD is a standalone solution, but also provided as part of the powerful Spot console, that includes both continuous optimization solutions for infrastructure, and now also a solution that provides structured software delivery processes. You get a multi-cloud solution for all of your Kubernetes needs in one place, for both the application and infrastructure layer.

Log in to your console today and use the quick enablement documentation to start using Ocean CD within minutes.