One of the many benefits of containers and Kubernetes by extension is greater efficiency in the usage of underlying compute infrastructure. However, incorrect assumptions regarding CPU and Memory can incur far greater cloud infrastructure waste (and cost) for microservices than any miscalculations with monolithic applications could.

To avoid this inefficiency, monitoring the resources your K8s clusters are actually consuming, coupled with a means to automatically rightsize pod requirements, is essential for improving efficiency and ensuring that applications scale at a fraction of the cost.

In this post, we describe how we’ve used K8s dynamic admission controller to automatically implement Ocean by Spot’s sizing recommendations, all without the user needing to change how they generate K8s yamls.

From Kubernetes observability to actionability

The famous management consultant, Peter Drucker, once noted, “If you can’t measure it, you can’t improve it.”

This is true with Kubernetes as well.

There are several ways of observing the amount of resources your Kubernetes application requires such as cAdvisor, Metrics-Server, Prometheus and more. Ocean offers built-in, continuous monitoring of pod resource utilization, with analysis and recommendations of the resource rightsizing needed.

However, even with those metrics being available, we still need a way to ensure the correct resources needed are defined in a painless, automatic way.

Fortunately for us, automating the process of incorporating those metrics into the pod/container resources definition can be easily done in 2 different ways:

- Changing our application K8s yamls in our CI/CD pipeline so that the resource requests will match the observed values by the systems we described above (see this step-by step example with Jenkins).

- Updating the pod resource requests “on the fly” just before it persists into the K8s datastore (i.e. ETCD).

In the following sections, we will focus on this second option.

Admission controllers

The Kubernetes site defines an admission controller as the “piece of code that intercepts requests to the Kubernetes API server prior to persistence of the object, but after the request is authenticated and authorized.” This means that whenever an API request is being made to the K8s APISERVER, the (different enabled) admission controller is responsible for doing “something” with the request.

What is this “something” you ask. Well, it depends on the configuration of the “kube-apiserver” service. Let’s list some of them by examples:

- Have you ever tried to delete a namespace that contains different K8s objects (Deployments/Pods/ConfigMaps/Secrets/etc…)? Did you notice that those objects were deleted as part of the Namespace deletion process? This is thanks to the NamespaceLifecycle admission controller. If you’ll go to the documentation, you’ll find that this admission is responsible as well for rejecting the creation of objects in a namespace that doesn’t exist.

- Are you familiar with the ResourceQuota object that can restrict the allocation of a Namespace in terms of CPU, Memory, Storage and more? This “mechanism” is working thank to the ResourceQuota admission controller.

- Creating a PVC (PersistentVolumeClaim) results in a PV (PersistentVolume) even if you didn’t specify any StorageClass? This is due to the DefaultStorageClass admission controller which watches any new PVC that is being created with no StorageClass defined in its spec and automatically adds the default StorageClass to the PVC.

All the available admission controllers are documented here. Two additional important admission controllers are(especially for this blog post are): ValidatingAdmissionWebhook and MutatingAdmissionWebhook.

Now that we know what exactly admission controllers are, let’s describe what are “Dynamic Admission Controllers”

Dynamic Admission Controllers

The Kubernetes site says that “admission plugins can be developed as extensions and run as webhooks configured at runtime”. In other words, admission plugins are “pieces of code” that can be developed by the K8s users/admins, and intercept API requests before they persist to the DB. The main advantage of this is that unlike the “regular” admission controller (whose functionality is set by the K8s maintainers), these dynamic controllers can contain any logic that the user needs (as long as it is respects the webhook’s API schemes).

A little piece of history: This mechanism of allowing users to add their own functionality (by writing their own code) to a system is not new. For those who’ve been working in the Mainframe industry (as I did), you might find admission controllers very similar to z/OS exit routine. These exits have the ability to manipulate processes (convert encoding, duplicate data streams, etc..) while maintaining the OS-specific processes in place. Enough history (although the mainframe is still up and running :-).

There are 2 types of admission webhooks: Validating and Mutating.

Mutating admission webhooks

MutatingAdmissionWebhook is the first that is being called. The purpose of this webhook is to change a K8s object before it is persisted by the APISERVER. For example, if you want to ensure that every deployment comes with an antiAffinity definition in order to spread the different pods of the deployment across different worker nodes (see my blog about K8s scheduling), you can use the mutating webhook in order to inject the antiAffinity definition in case that the deployment comes without it. One thing to consider is that although the object can/will be mutated, it doesn’t ensure that it will be persisted by the APISERVER, as later controllers (such as the following one we’ll discuss) might reject the request.

Validating admission webhooks

ValidatingAdmissionWebhook is being called last in the processing chain, and it cannot change the object, only validate it. It is a great mechanism that can be used to deny any request that doesn’t comply with the policies of your organization. For example, if you want to verify that no one is using the hostPath volume in their deployment, you can use Validating Webhook to reject these API requests.

Using Dynamic Admission Webhooks with Spot’s rightsizing recommendations

At Spot, we provide our users right-sizing recommendations on their pod resource requests as part of our K8s infrastructure management solution. That means that with these recommendations, the user can better define the resources needed for the pod to operate, and eliminate the unneeded capacity defined originally by the user.

To implement these recommendations without any additional change to the user pipeline deploying applications to the K8s cluster, we developed a webhook server, the ocean-right-sizing-mutator that listens to Mutating Webhooks requests, interacts with Ocean Right-Sizing API and mutates the resource definition if it is, percentage-wise, lower or higher than the recommendation given by the Ocean API. The README file of the project is documented with how to install the webhook server in your cluster, and how to annotate your deployment so the webhook will mutate it.

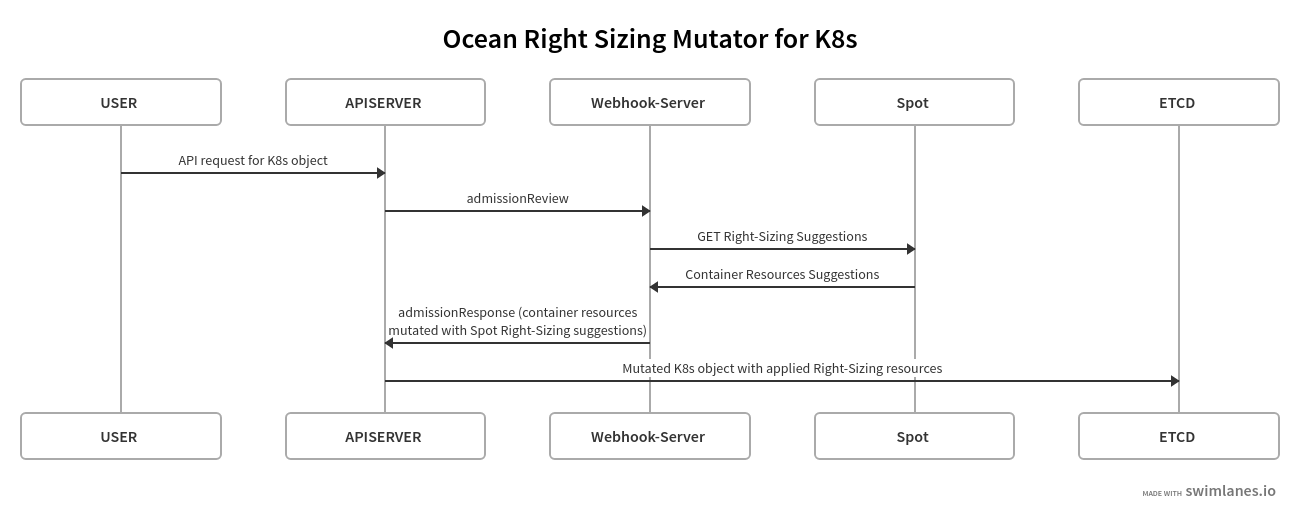

This is a diagram describing the process when using Ocean-Resource-Mutator in your K8s cluster:

What’s needed to develop your own Webhook-Server

I’ve been following the following examples:

- https://medium.com/ovni/writing-a-very-basic-kubernetes-mutating-admission-webhook-398dbbcb63ec

- https://medium.com/ibm-cloud/diving-into-kubernetes-mutatingadmissionwebhook-6ef3c5695f74

- Kubernetes E2E tests in their Github repository

In the following paragraphs, I’ll cover some of the important configurations that need to be taken into consideration.

TLS

Since the webhook server is able to mutate/deny any request targeting the APISERVER, it needs to be secured and thus provide a TLS certificate in order to operate. The server must use a certificate with the following DNS alternate name: “webhook-service-name.webhook-service-namespace.svc”.

This alternate DNS name is used to authenticate the identity of the webhook server. In addition, in the MutatingWebhook/ValidationWebhook configuration, the field “caBundle” needs to be set to the “base64-encoded PEM bundle containing the CA that signed the webhook’s serving certificate”. The reason for this is that the APISERVER, perform a client authentication against the webhook server, providing the PEM bundle containing the CA.

You can find the way it is configured in the script that generates the certificates in our repository.

IDEMPOTENCE

Since the webhook might be triggered several times for a single API request (determined by the K8s APISERVER itself), it’s very important that your server will be idempotent. Meaning, any additional requests to an already mutated object, must result with the same mutation/output of the first initial request. The documentation gives us examples of non-idempotent operations, such as:

- Injecting a side-car container to a pod with an auto generated suffix – This can result in multiple sidecars containers in the same pod. For example: “sidecar-a2c23d”, “sidecar-f9ej3” and “sidecar-p3mm34” for 3 different invocations of the webhook.

- Processing K8s objects (pods for example) based on their labels, while changing these labels as part of the mutation. This can lead to a situation where the second invocation will process the object differently then the first invocation.

FAILING REQUESTS

When processing mutating requests, we should take into consideration how we handle errors (of any kind). If we’ll fail the webhook when errors occur (if we get null value as part of our processing), this will result in an object that will be rejected from the cluster. There might be some cases where we’ll want this to happen (rejecting the creation of an Object in the cluster), but we need to be aware of that. Therefore when designing the webhook server logic, we should decide whether we want to always allow the request (even if there is a failure in the business logic of the webhook server) or not. In our Ocean Right-Sizing Mutator webhook server, we’re always allowing the creation of the deployment, even if the response from our API is not valid/returned an error.

Feel free to try our mutating webhook and of course I’d love to hear your feedback on it all.

Twitter: @tsahiduek