Kubernetes is one of the most popular container orchestration tools used in the vast microservices landscape. Originating from Google’s Borg, it supports automatic deployment, scaling, and management of containerized applications. Maintaining the networking inside clusters manually would be an ordeal. Fortunately, there are existing networking implementations that take care of the internal connectivity.

This article provides a brief overview of how communication between components inside the cluster is designed. Before we get into the details, let’s review the basic components of a Kubernetes cluster.

Basic K8s Concepts

Container: A piece of software to help create a single instance of an application. It contains the application’s code and dependencies running in an isolated environment. Containers are based on images that include everything required to run the application—the app’s code (as mentioned), runtime, tools, libraries, and settings.

Pod: A basic Kubernetes component that consists of one or more containers that are deployed on a single host. Containers inside the pod share the network interface (the same network namespace and port space), volumes (which can be mounted inside any of the containers), and resources (CPU and memory are specified for a pod and shared across all of the containers.).

Node: Nodes are worker machines that run applications stored in containers. Depending on how the cluster is hosted, nodes can be either virtual machines or physical computers. Each of the nodes provides an environment for managing and running nodes inside the cluster.

Cluster: A set of virtual or physical machines that contains the control plane processes such as the Kubernetes API server, scheduler, and core resource controllers; and nodes (the worker machines covered above).

Service: A Kubernetes-specific abstraction that allows you to route traffic to designated pods. Pods are ephemeral, which means they can be rescheduled to other nodes or deleted when no longer needed. This is where services jump into action to provide static IP and DNS names for selected pods with appropriate labels.

AWS EKS Networking

With its somewhat steep learning curve and extensive setup, hosting Kubernetes can be challenging. While trying out new technologies is certainly enticing, a stable and reliable platform should always be the goal.

Following are the three basic models for Kubernetes deployment:

Self-hosted: All Kubernetes components are managed and maintained by the engineering team. While this offers the advantage of being able to fully customize the cluster, setting up and maintaining it requires more overhead.

Cloud: The Kubernetes control plane is managed by the cloud provider, and only the worker nodes must be handled by the engineering team.

Hybrid: This approach combines both models, with some parts of the infrastructure running in the cloud and some on-premises.

It is strongly advised to use managed Kubernetes if possible. Delegating the heavy lifting to external parties allows you to focus on your core business.

Amazon Web Services’ Elastic Kubernetes Service is one of the popular, cloud-based managed Kubernetes options in the market.

The high availability provided with a control plane set up across various AWS availability zones practically eliminates the likelihood of a single point of failure. The list of benefits goes on, including easy integration with AWS Identity Access Management for authentication, Elastic Load Balancing for distributing the traffic, as well as AWS Virtual Private Cloud and countless other Amazon Web Services to handle the management and configuration of various pieces of infrastructure.

The AWS team has also developed an Amazon VPC CNI plugin for Kubernetes that makes it possible for pods inside the cluster to have the same IP address as they do on the VPC network. But before diving deeper into this, let’s discuss the Kubernetes networking model.

Kubernetes Networking Model

By default, the Kubernetes networking model supports multi-host networking, where pods can communicate with each other without needing to exist on the same host. Interestingly, the Kubernetes project itself does not have a network model default implementation. There are multiple implementations on the market, all of which must follow these requirements:

- Pods are able to communicate with each other without using NAT.

- Nodes are able to communicate with each other without using NAT.

- The IP from inside and outside the container is the same.

There are plenty of existing implementations of the networking model, the most popular among them Flannel (which creates a layer-3 network fabric on hosts) and Calico (with networking fabric based on IPs). Instead of focusing on the implementation details for each of these solutions, let’s explore the nature of communication between Kubernetes resources.

Container-to-Container Networking

Container-to-container networking is fairly straightforward. As seen above, containers live together within a pod. When you take a closer look at the pod, you can see it is designed as a separate network namespace where containers can cooperate with each other. From inside the pod, all of the containers use the same network interface (eth0). There are a number of benefits to employing this design:

- Containers share the same IP address.

- Containers have access to the same shared volumes.

- Containers can communicate with each other via the localhost.

Pod-to-Pod Networking

Next, we’ll explore communication between the pods. In the first scenario, let’s assume that pods exist on the same node (e.g., Linux virtual machine).

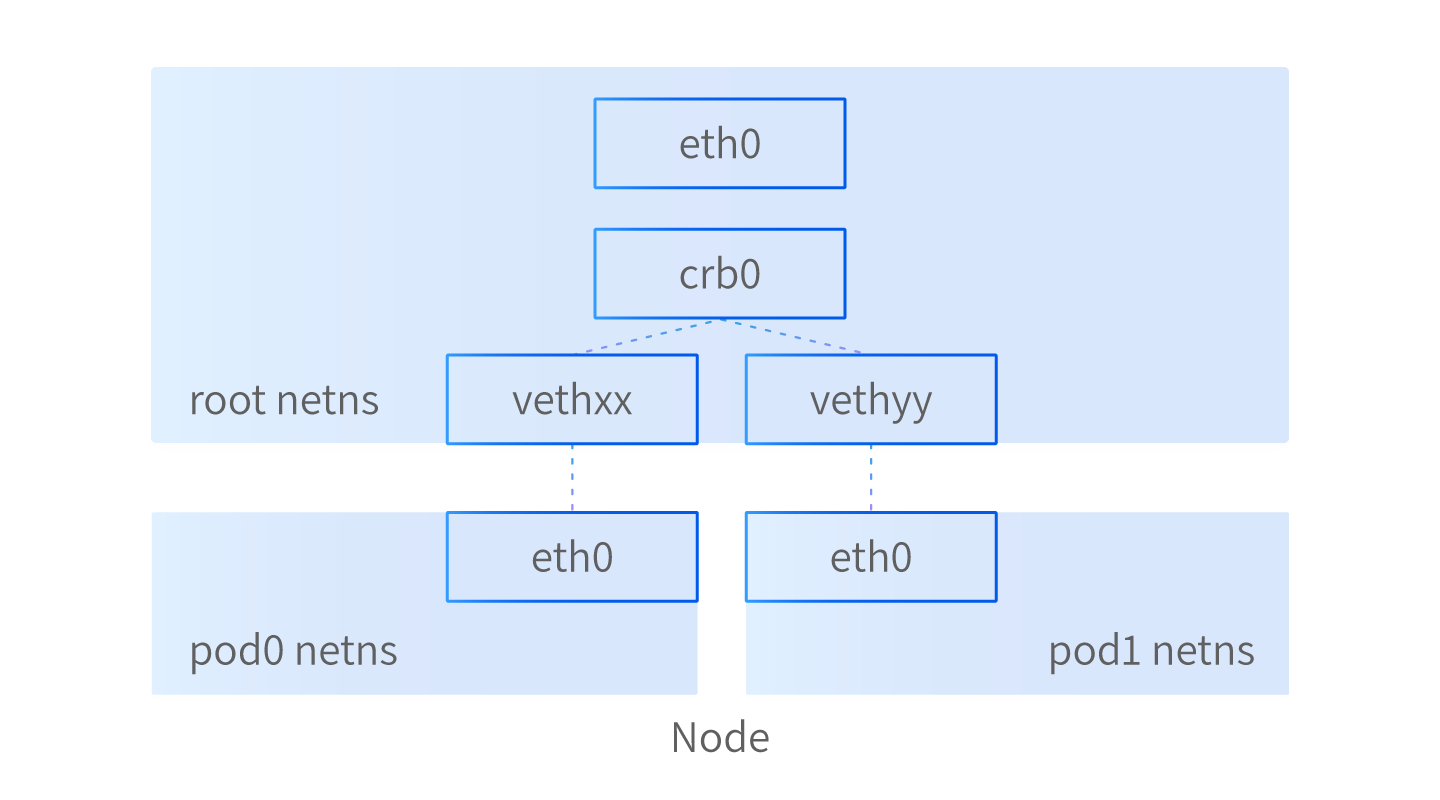

Our virtual machine contains a separate network namespace called “root.” First, we need to connect the root network namespace on the node-to-pod network namespace. This is done using a virtual Ethernet device (veth) with two virtual network interfaces. A pipe is created between the two namespaces and uses the newly created interfaces to enable communication. In a situation where the pods’ namespaces are connected to the root network namespace, it’s easy to connect the pods to each other.

This is done using a network bridge. A network bridge is a layer-2 virtual network device that enables transparent communication between two or more network segments. In short, the network bridge creates a table with forwarding rules between sources and destinations. This table helps the bridge decide if network packets should be forwarded to other network segments or not. It also implements ARP protocol that discovers unique MAC addresses by broadcasting received data frames to all of the devices within the network.

Here’s how pod0 would communicate with pod1:

- Pod1 creates and sends a packet to its own network interface eth0.

- Pod1’s network interface eth0 is connected to the root network namespace with a virtual network interface—veth0.

- Network bridge cbr0 uses ARP protocol to find the correct network segment.

- The packet arrives at the veth1 and is connected to pod1 through a pipe to eth0.

This scenario is fairly straightforward, but what happens when we have pods on different nodes?

In such cases, after leaving the pod network namespace, the bridge would be unable to match the MAC address of the destination pod and would send the packet to the network interface associated with the root network namespace.

Each of the nodes operates on a set CIDR block. Because we know the destination IP, the packet is routed to the node with a matching address. This task would usually belong to a kube-proxy that uses iptables. After arriving at the destination node, it would be forwarded to bridge cbr0 and follow a similar journey as in the same-node scenario.

Back to EKS Networking

Now that you have a better understanding of how networking works inside Kubernetes clusters, let’s get back to the AWS Elastic Kubernetes Service.

The AWS VPC Container Networking Interface (CNI) plugin for Kubernetes allows Kubernetes pods to have the same IP address inside the cluster as in the VPC. Each of the nodes (which are EC2 instances) have the ability to create many Elastic Network Interfaces (ENIs). When a new pod is created, it is assigned to an ENI with a secondary IP address that can be natively used within the AWS VPC network. For example, a m4.4xlarge node can have up to 8 ENIs, and each ENI can have up to 30 IP addresses.

Pod-to-Service Networking

Pods are not eternal. They can be created, deleted, upscaled, downscaled, or moved between the nodes. Using Kubernetes with its full potential requires one more solution to enable connectivity to the pods. This is why we use Services. Services is a Kubernetes resource that enables us to create a virtual IP for a set of matching pods that match given selectors.

Using Services allows us to have a single IP and DNS entry inside the cluster. The translation from virtual IP to the matching pod IP is once again executed by kube-proxy.

In cases where there is more than one replica of a matching pod, Services provides a load balancing feature.

Worry-Free Networking

Cluster networking may at first glance appear to be complex, but its implementation is in fact quite logical and very useful. Instead of reinventing the wheel, it’s best to let the cloud providers do the heavy lifting. Taking advantage of mature products such as AWS Elastic Kubernetes Service is thus a rational choice for cloud-native application development.

That’s all for now!