Reading Time: 6 minutes

Reading Time: 6 minutesShould you run big data workloads on Kubernetes? Not so long ago, most people would have said certainly not. Among the reasons you might have heard:

- Big data workloads needed direct access to storage and network resources

- Kubernetes schedulers didn’t understand the specific needs of big data workloads

- Support for monitoring in Kubernetes was too limited

- Integration with big data software wasn’t advanced enough to make it easy to operate software like Spark, , Kafka, and similar in Kubernetes-managed containers.

Although early adoption of Kubernetes was dominated by stateless services rather than data-centric applications, more and more teams responsible for big data platforms are now looking to adopt Kubernetes, especially those looking to deploy and operate big data workloads in the cloud. With its inherent scalability, Kubernetes is in fact on its way to becoming a primary way big data teams run their applications.

What’s changed? Kubernetes’s architecture and capabilities have always made it appealing for deploying and operating scalable distributed applications on large-scale infrastructure, but unlocking that value proposition had been complex and impractical until developments in Kubernetes and its ecosystem emerged to make those benefits accessible and achievable.

Let’s take a closer look.

The Case for Kubernetes for Big Data Software

The reasons to consider using Kubernetes and containers to support big data software are based on the core benefit of Kubernetes—it makes it easier for infrastructure and operations teams to deploy, scale and manage software and resources in a flexible, reliable way. Teams responsible for big data platforms and infrastructure already have more than enough to do to ensure that data scientists and data engineers have access to the data and systems that they need, making it important to have solutions that simplify and streamline infrastructure management. As Kubernetes becomes increasingly considered as an operating system for the cloud, big data platform teams are increasingly adopting it for these workloads as well.

Ensuring Portability

Big data software is typically deployed in a wide variety of environments—not only are there multiple production clusters processing data, but there are also clusters for testing, staging, development, data exploration and more that are deployed on diverse infrastructure.

Packaging big data software and its dependencies into containers managed by Kubernetes makes it easy to deploy big data software anywhere, without needing to reconfigure the components to fit the underlying hardware and software infrastructure and regardless of where that infrastructure is running. For example, the same stack can be easily reproduced in different cloud regions or even private clouds, using different hardware generations or different instance types, without requiring reconfiguration and repackaging.

Simplification

A related benefit is that using containers and Kubernetes makes it significantly easier to build and deploy big data applications in a reliable and repeatable way. That is particularly important for big data applications, which consist of many components, each of which has a very specific list of dependencies and configuration requirements.

With containers and Kubernetes, you can avoid the complexity of mismatched library versions and component compatibility for Hadoop, Cassandra, Spark or similarly complex systems. Not only that, you can experiment with multiple versions using version control and tagging of containers to make it easy to deploy and update each without creating conflicts and incompatibilities. That makes it far easier to quickly create deployments for data engineers and data scientists to use for experiments, data exploration, tests and new applications, without consuming time and energy of DevOps and infrastructure teams to set up, size and scale the infrastructure and software components.

Resource Management

Big data workloads, particularly those supporting development, data exploration and testing, can have large infrastructure needs when running, but also have highly variable usage. Dedicated clusters for each deployment create significant costs through inefficient use of resources.

Rather than forcing you to create multiple siloed infrastructure clusters, Kubernetes makes it possible to efficiently share resources so that the same cluster can be safely used for multiple and even concurrent applications, increasing utilization while avoiding dependency conflicts or unmanaged resource competition. Kubernetes’s capabilities such as namespace and resource quotas ensure that different workloads can share resources fairly, while node selectors and roles can be used to isolate both resources and access as needed.

Unlocking the Benefits of Kubernetes for Big Data Software

While those benefits have been a fundamental part of Kubernetes and containers from the start, advancements in Kubernetes and big data software have made them far more easily accessible, helping open the door to the growing use of Kubernetes for big data infrastructure.

Ease of Deployment

The availability of operators for common big data platforms has made it significantly easier to deploy big data software on Kubernetes. There are now a variety of specialized Kubernetes operators that make it easy to deploy big data solutions on Kubernetes–for example, there are Apache Kafka operators, Apache Spark operators, Apache Cassandra operators and many others as well. The availability of those operators unlocks the portability benefits of Kubernetes, making it easy to deploy big data software in a variety of environments in a consistent, reliable way.

Growing Ecosystem

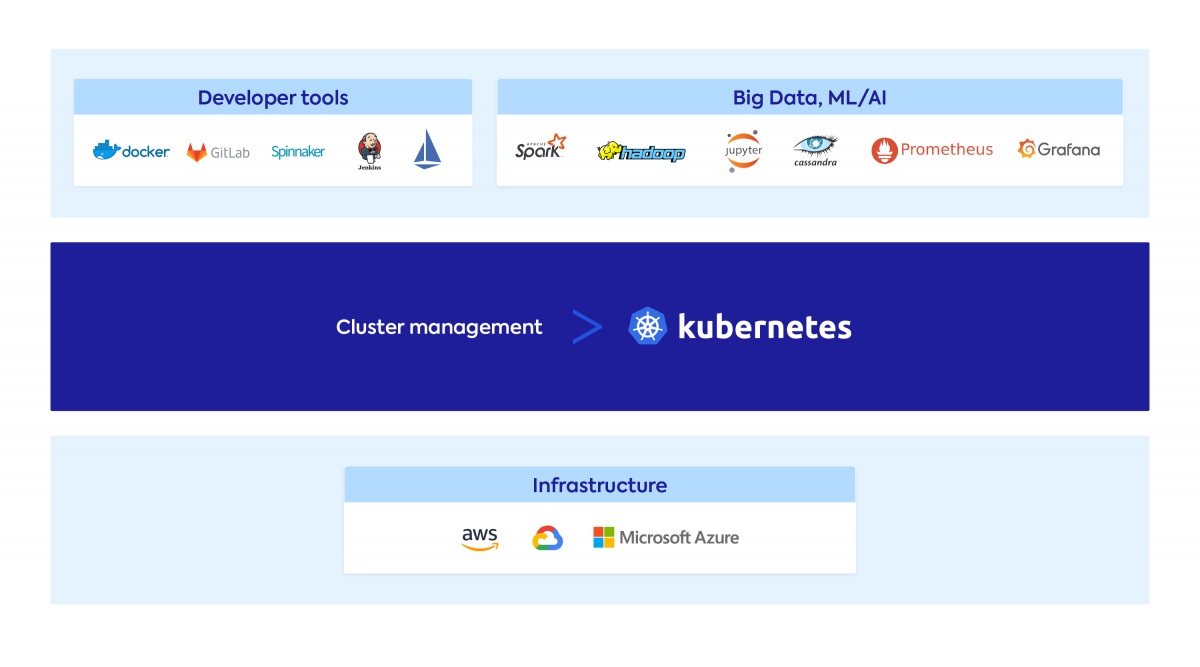

Although the limited ecosystem of tools that understand and work with Kubernetes may have been an obstacle for big data solutions in the early days of Kubernetes, over time Kubernetes has developed a broad and rich ecosystem of solutions for monitoring, logging, security and more that can be used with big data platforms. Looking for tools to help collect and examine all of the logs that each node in a Hadoop cluster generates? A quick search will find many relevant tutorials on topics such as setting up fluentd with Kubernetes, using ElasticSearch to examine Kubernetes logs, and more. Similarly, you can easily find examples of ways to monitor performance of Apache Spark clusters on Kubernetes using Prometheus to collect metrics that can be presented and viewed using pre-built Grafana dashboards for Spark.

Advances in Resource Management

Another factor that has made it easier to use Kubernetes to support big data workloads is the evolution of resource management in both Kubernetes and in big data software. For example, consider scheduling: cluster managers such as YARN or Mesos are used with or by some big data software platforms to help allocate tasks to resources. Although those cluster managers were advances over basic standalone managers, they weren’t designed for Kubernetes–the complexities of setting up load balancing and queues make it difficult to utilize these schedulers with Kubernetes. One challenge that results is that using the same cluster for concurrent applications, for example for two different Spark clusters, means you are forced to make compromises on both dependency isolation and performance isolation.

Recent updates in both Kubernetes and in big data solutions have made it possible and even advantageous to utilize Kubernetes’s resource management with those solutions. Take the example of Spark. Spark recently introduced (currently experimental) support for the use of Kubernetes as a scheduler, formalizing what had until then required third-party add-ons. This support allows you to use Kubernetes to help schedule Spark tasks to resources, taking advantage of the resource management, isolation and load balancing built into Kubernetes. That offers significant improvements over using other schedulers with Spark, making it easier to simultaneously run multiple versions of Spark and other big data tools, share nodes for different tasks without the need to statically partition nodes, and co-schedule real-time and batch workloads.

Performance

Advances in the maturity and sophistication of schedulers for Kubernetes have addressed another common concern—whether Kubernetes can deliver the performance needed for big data processing. As described in a recent AWS blog post that discusses benchmark tests of Spark on Kubernetes, Spark performance using the Kubernetes scheduler can actually exceed performance with other schedulers more commonly used with Spark. Using the TPC-DS benchmark, the AWS team observed performance that was 5% faster when using Kubernetes as a scheduler compared to using YARN.

Taking the Next Step

Once you’ve decided that you want to use Kubernetes to support your big data deployment, it’s important to make sure that you take advantage of the learnings, tools and technologies that are available to make that as painless as possible. For one, you can start by reading how other people have done it, for example how Target deployed Apache Cassandra on Kubernetes or how Adobe got started with Apache Kafka on Kubernetes.

In upcoming posts, we’ll be taking a deeper look at running big data solutions on Kubernetes, including a look at some of the ways that our customers are doing that and how they’re using technology from Spot by NetApp to help in those efforts. If you want to learn more about that right away, one place to get started is to check out Ocean, our solution for automating and optimizing cloud infrastructure for Kubernetes and containers. For a deeper dive and to see how to try it out using our free trial, take a look at the Ocean documentation and at our tutorials on setting up Apache Spark with Kubernetes.